CSV

- Capella Analytics

- reference

This topic explains how to use the COPY TO statement to export data from a database to Amazon S3 or Google Cloud Storage (GCS) in CSV format.

COPY TO CSV introduces the ability to write results of queries or entire collections to external stores (such as AWS S3 and Google Cloud Storage) in CSV format.

For more information, see Query Data in Amazon S3 and Query Data in Google Cloud Storage (GCS).

| To be able to read or write data to or from external cloud storage exclusive permissions are required. For more information see Cloud Read/Write Permissions. |

Syntax

CopyTo EBNF

CopyTo ::= "COPY" SourceDefinition

"TO" ContainerIdentifier

"AT" LinkQualifiedName

OutputClause

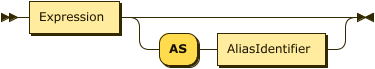

WithClauseShow SourceDefinition Diagram

Show OutputClause Diagram

Show OutputPathExpr Diagram

Show OverClause Diagram

Show PartitionClause Diagram

Show PartitionExpr Diagram

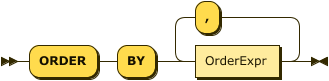

Show OrderClause Diagram

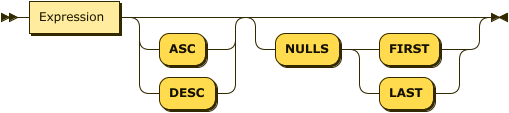

Show OrderExpr Diagram

Show SchemaClause Diagram

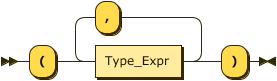

Show CSVTypeExpr Diagram

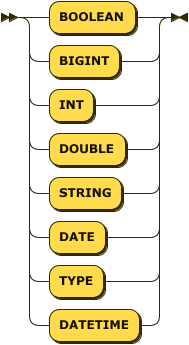

Show TypeExpr Diagram

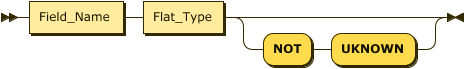

Show FlatType Diagram

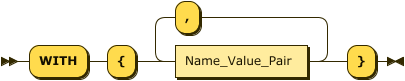

Show WithClause Diagram

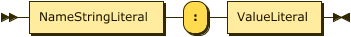

Show NameValuePair Diagram

Example

COPY (

SELECT o.custid, o.order_date, o.orderno, o.gender, o.zip

FROM Orders o

) AS t

TO myS3Bucket

AT myS3Link

PATH ("commerce/Orders/zip-" || zip || "/")

OVER (PARTITION BY t.zip AS zip)

AS (

custid string NOT UNKNOWN,

orderno int,

order_date string,

gender string,

zip string

)

WITH {

"format": "csv",

"header": true

}Arguments

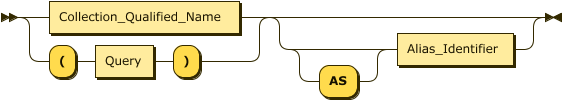

- SourceDefinition

-

As the source, you specify either the fully qualified name of a collection or provide a query.

-

If you specify a collection name, then the whole collection—or view or synonym—is the source of data to copy.

-

If you specify a query, then the result of that query is the source of data.

-

- TO

-

The

TOclause identifies the bucket name on the external data source, an Amazon S3 bucket in this case.

- AT

-

The

ATclause specifies the name of the link that contains credentials for the S3 bucket name. The specified link must have a type of S3.

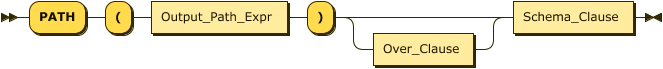

- OutputClause

-

The

OutputClausedefines the destination path for the output objects. You supply one or moreOutputPathExprexpressions to identify the path prefixes. You can include aliases. If you supply more than one expression, Capella Analytics concatenates the values of allOutputPathExprand supplies/characters as the path separators. As a result, you do not need to include slash characters betweenOutputPathExprexpressions.

| The target directory that you specify in the destination path must be empty. The operation fails if the target directory is not empty. |

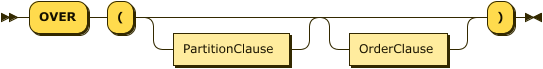

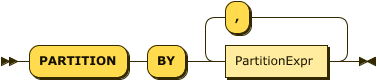

- OverClause

-

You supply an optional

OverClauseto specify output partitioning with aPartitionClauseand ordering with anOrderClause. This is similar to theOVERclause of a WINDOW statement.-

If you specify a

PartitionClause, Capella Analytics evaluates theOutput_Path_Expronce per logical data partition and refers to aliases if defined by anASsub-clause. -

If you do not specify a

PartitionClause, Capella Analytics evaluates theOutputPathExpronce for the wholeCOPY TOoutput dataset. That is, all of the files end up in the same directory.

-

You use the OrderClause to define output object order, either within each partition or for the whole dataset.

- AS (CSV Type Expression)

-

UNKNOWNrefers to the field being null or missing.

AS (

custid STRING NOT UNKNOWN,

orderno BIGINT,

order_date DATE,

gender STRING

)- WITH

-

The

WITHclause is optional. You use it to specify the following additional parameters.

| Name | Description | Schema |

|---|---|---|

max-objects-per-file (Optional) |

Maximum number of objects per file. Default: 10000. Minimum allowed value: 1000. |

String |

compression (Optional) |

Compression mechanism. Default: none. |

String enum (gz, gzip) |

header(Optional) |

Specifies if the header information must be written or not. Default: false |

Boolean |

escape |

Specifies the character used to escape the QUOTE and carriage return value. Default: same as the QUOTE value (the quote character is displayed twice if it appears in the data) |

String |

quote |

A character used to enclose strings. Default: " Value: NONE, ', " |

String |

null (Optional) |

Emits text in CSV output for NULL or MISSING values. Default: unquoted empty string |

String |

record-delimiter |

Character that separates each row/line (record). |

String |

delimiter |

Specifies the character that separates columns within each row (line) of the file. Default: comma in CSV format |

String |

empty-field-as-null |

Empty data values to be written by <null> string. Default: false |

Boolean |

force-quote |

A flag to enable or disable the quotes around the string non-null values. Default: Special characters within the string value are always enclosed within quotes. |

Boolean |

Supported Data Types

Following are the supported data types that can be used in CSV schema:

-

Boolean

-

String

-

Bigint

-

Double

-

Null

-

Temporal Data (Date / Time / DateTime)

Unsupported Data Types

Following are the unsupported data types that cannot be used in CSV schema:

-

Object

-

Array

-

Multiset

Schema Mismatches and Warnings

In case of a schema mismatch between the expected schema versus the actual record’s schema, a warning is issued and returned as part of the final result. The system skips writing records with mismatches and continues to the next record.

Examples of schema mismatches:

-

The provided schema has 4 fields, but the actual record has 5 fields.

-

The type for the same field is different in the provided schema vs the field type in the record schema.

-

The actual record has fields that are not present in the provided schema.