Introduction to Couchbase¶

A newer version of this software is available

You are viewing the documentation for an older version of this software. To find the documentation for the current version, visit the Couchbase documentation home page.

Couchbase Server is a NoSQL document database for interactive web applications. It has a flexible data model, is easily scalable, provides consistent high performance and is “always-on,” meaning it is can serve application data 24 hours, 7 days a week. Couchbase Server provides the following benefits:

-

Flexible Data Model

With Couchbase Server, JSON documents are used to represent application objects and the relationships between objects. This document model is flexible enough so that you can change application objects without having to migrate the database schema, or plan for significant application downtime. Even the same type of object in your application can have a different data structures. For instance, you can initially represent a user name as a single document field. You can later structure a user document so that the first name and last name are separate fields in the JSON document without any downtime, and without having to update all user documents in the system.

The other advantage to the flexible, document-based data model is that it is well suited to representing real-world items and how you want to represent them. JSON documents support nested structures, as well as field representing relationships between items which enable you to realistically represent objects in your application.

-

Easy Scalability

It is easy to scale your application with Couchbase Server, both within a cluster of servers and between clusters at different data centers. You can add additional instances of Couchbase Server to address additional users and growth in application data without any interruptions or changes in your application code. With one click of a button, you can rapidly grow your cluster of Couchbase Servers to handle additional workload and keep data evenly distributed.

Couchbase Server provides automatic sharding of data and rebalancing at runtime; this lets you resize your server cluster on demand. Cross-data center replication providing in Couchbase Server 2.1.0 enables you to move data closer to your user at other data centers.

-

Consistent High Performance

Couchbase Server is designed for massively concurrent data use and consistent high throughput. It provides consistent sub-millisecond response times which help ensure an enjoyable experience for users of your application. By providing consistent, high data throughput, Couchbase Server enables you to support more users with fewer servers. The server also automatically spreads workload across all servers to maintain consistent performance and reduce bottlenecks at any given server in a cluster.

-

“Always Online”

Couchbase Server provides consistent sub-millisecond response times which help ensure an enjoyable experience for users of your application. By providing consistent, high data throughput, Couchbase Server enables you to support more users with fewer servers. The server also automatically spreads workload across all servers to maintain consistent performance and reduce bottlenecks at any given server in a cluster.

Features such as cross-data center replication and auto-failover help ensure availability of data during server or datacenter failure.

All of these features of Couchbase Server enable development of web applications where low–latency and high throughput are required by end users. Web applications can quickly access the right information within a Couchbase cluster and developers can rapidly scale up their web applications by adding servers.

Understanding Couchbase Concepts¶

Before you develop applications on the Couchbase Server, you will want to understand key concepts and components that are related to application development on Couchbase Server. For more information about underlying functions of Couchbase Server, data storage, and cluster management, see the Couchbase Server Manual.

Couchbase as Document Store¶

The primary unit of data storage in Couchbase Server is a JSON document, which is a data structure capable of holding arrays and other complex information. JSON documents are information-rich, flexible structures that enable you to model objects as individual documents. By using JSON documents to model your data, you can construct your application data as individual documents which would otherwise require rigidly-defined relational database tables. This provides storage for your web application which is well suited to serialized objects and the programming languages that use them.

Note

Binary objects, such as integers and strings, can also be stored.

Because application objects are modeled as documents, schema migrations do not need to be performed. The documents and the fields they store indicate the relationships between application objects; therefore, to update the structure of objects you store, change the document structure that is written to Couchbase Server.

When Couchbase Server is used as a store for JSON documents, the records can be indexed and queried. Couchbase Server provides a JavaScript-based query engine to find records based on field values. For more information, see Finding Data with Views.

For more information about working with JSON documents and Couchbase, see Modeling Documents.

Data Buckets¶

Your web application stores data in a Couchbase cluster using buckets. Buckets are isolated, virtual containers which logically group records within a cluster; they are the functional equivalent to a database. Buckets can be accessed by multiple client applications across a cluster. They provide a secure mechanism for organizing, managing and analyzing data storage. As an application developer you will most likely create buckets for your development and production environment.

For more information about data buckets in Couchbase Server and how to create them, see About Data Buckets and Data storage in the Couchbase Server Manual.

Keys and Metadata¶

All information that you store in Couchbase Server are documents with keys. Keys are unique identifiers for a document, and values are either JSON documents or if you choose the data you want to store can be byte stream, data types, or other forms of serialized objects.

Keys are also known as document IDs and serve the same function as a SQL primary key. A key in Couchbase Server can be any string, including strings with separators and identifiers, such as ‘person_93679.’ A key is unique.

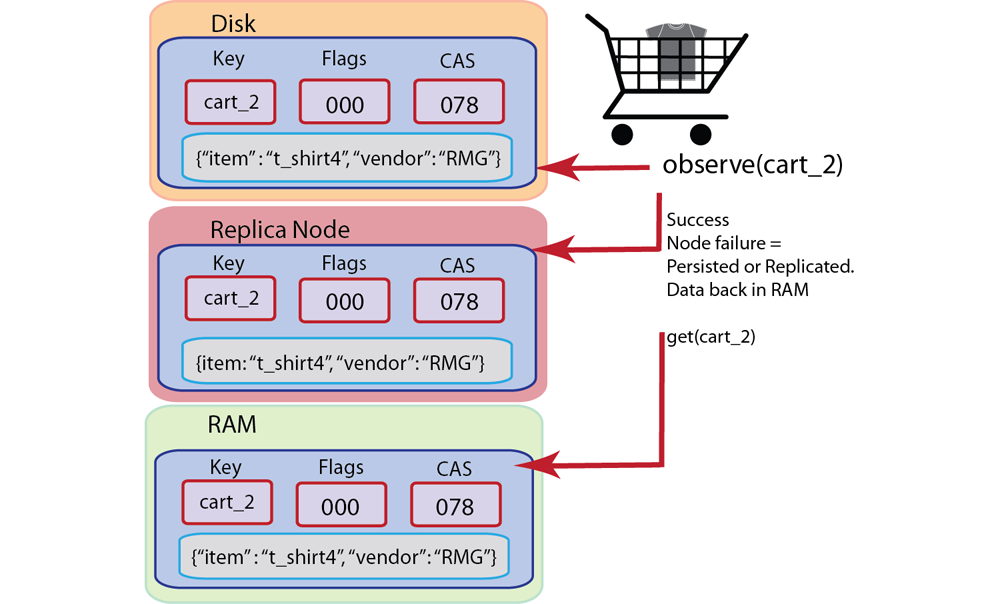

By default, all documents contain three types of metadata which are provided by the Couchbase Server. This information is stored with the document and is used to change how the document is handled:

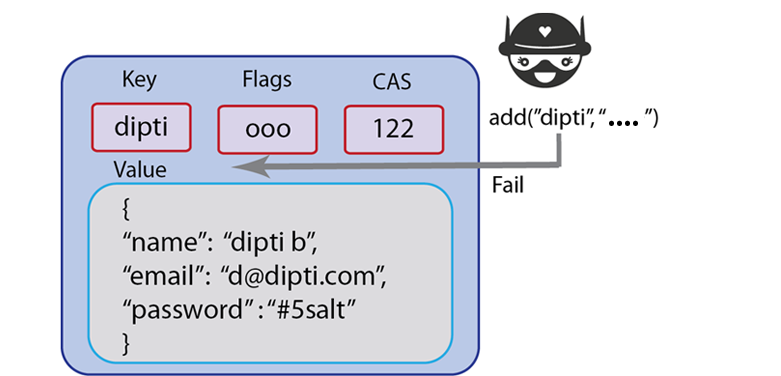

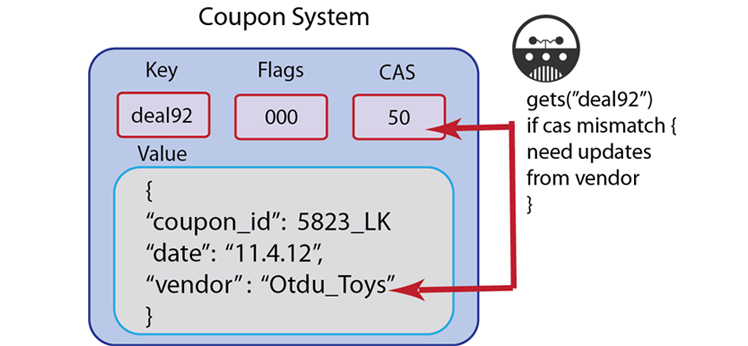

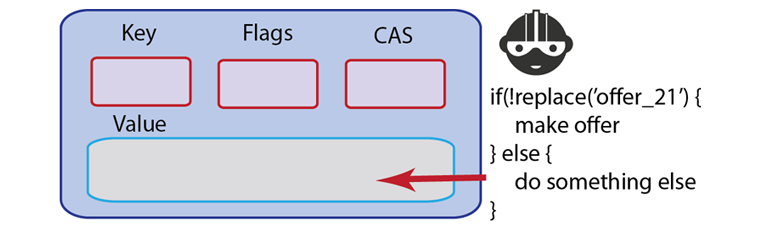

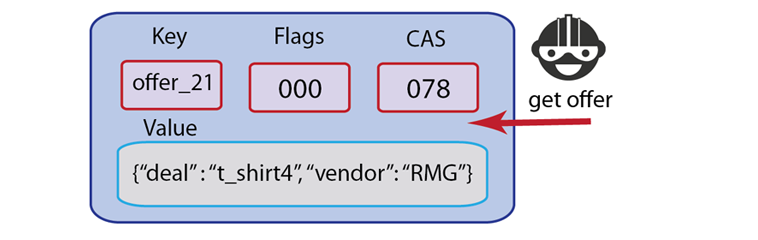

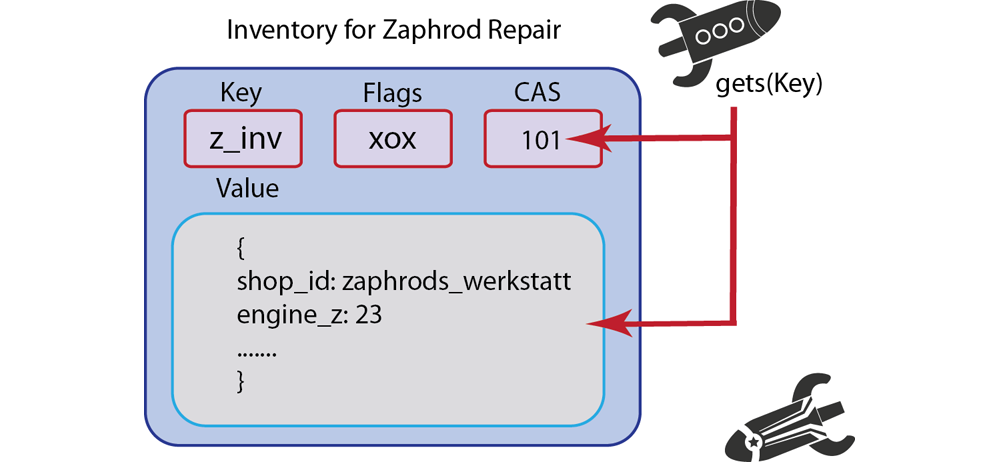

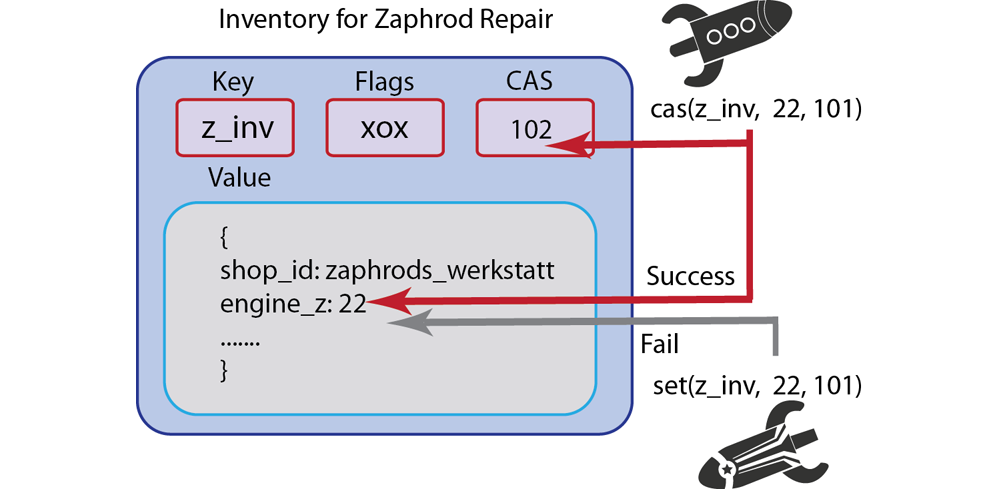

Cas Value —Also called cas token or cas ID ; this is a unique identifier associated with a document, and verified by the Couchbase Server before a document is deleted or changed. This provides a form of basic optimistic concurrency; when Couchbase Server checks a CAS value before changing data, it effectively prevents data loss without having to lock records. Couchbase Server will prevent a document from being altered by an operation if another process alters the document and its CAS value, in the meantime.

-

Time to Live (ttl) — This is an expiration for a document typically specified in seconds. By default, any document created in Couchbase Server that does not have a given ttl will have an indefinite life span and will remain in Couchbase Server unless an explicit delete call from a client removes it. The Couchbase Server will delete values during regular maintenance if the ttl for an item has expired.

Note

The expiration value deletes information from the entire database. It has no effect on when the information is removed from the RAM caching layer.

- Flags —These are SDK- specific flags which are used to provides a variety of options during storage, retrieval, update, and removal of documents. Typically flags are optional metadata used by a Couchbase client library to perform additional processing of a document. An example of flags include the ability to specify that a document be formatted a specific way before it is stored.

Couchbase SDKs¶

Couchbase SDKs, sometimes also referred to as client libraries, are the language-specific SDKs provided by Couchbase and third-party providers that are installed on your web application server. A Couchbase SDK is responsible for communicating with the Couchbase Server and provides language-specific interfaces your web application can use to perform database operations.

All Couchbase SDKs automatically read and write data to the right node in a cluster. If database topology changes, the SDK responds automatically and correctly distributes read/write requests to the right cluster nodes. Similarly, if your cluster experiences server failure, SDKs will automatically direct requests to still-functioning nodes. SDKs are able to determine the locations of information, the status of nodes, and the status of the cluster using a REST API for administration. For more information about the REST API for Administration, see the Couchbase Server REST API.

The following diagram shows a single web application server, the Couchbase SDK, and a Couchbase Server cluster. In real deployments, multiple web application servers can communicate via a Couchbase SDK to a cluster.

Nodes and Clusters¶

You deliver your application on several grouped servers, also known as a cluster. Each cluster consists of multiple nodes :

Couchbase Server or Node: A node is a single instance of a Couchbase Server running on a physical or virtual machine, or other environment.

Cluster: This is a collection of one or more nodes. All nodes in a cluster are identical in function, interfaces, components and systems. Couchbase Server manages data across nodes in a cluster. When you increase the size of a cluster, the cluster scales linearly; that is, there is no hierarchy or parent/child relationships between multiple nodes in a cluster.

Nodes or clusters typically reside on a separate physical machine than your web server. Your Couchbase node/cluster will communicate with your web application via a Couchbase SDK, which we describe in detail in this guide. Your application logic does not need to handle information about nodes or clusters; the Couchbase SDKs are able to automatically communicate with the appropriate Couchbase cluster node.

Information about the Cluster¶

Your web application does not need to directly handle any information about where a document resides; Couchbase SDKs automatically retrieve updates from Couchbase Server about the location of items in a cluster. Multiple web application instances can access the same item at the same time using Couchbase SDKs.

How an SDK gets updates on cluster topology is a slightly more advanced topic and is mainly relevant for those developers who want to create their own Couchbase SDK. For instance developers who want to create a Couchbase SDK for a language not yet supported would be interested in this topic. For more information, see, Getting Cluster Topology.

Comparing Couchbase and Traditional RDMS¶

If you are an application developer with a background primarily in relational databases, Couchbase Server has some key characteristics and advantages that you should be familiar with. The following compares the different database systems:

Couchbase Server Traditional Relational Database (RDBMS) Rapidly scalable to millions of users. Scalable to thousands of users. Data can be structured, semi-structured, and unstructured. Data must be normalized. Built on modern reality of relatively inexpensive. plentiful memory. Built on assumption of scarce, expensive memory. Built for environments with high-speed data networking. Built at a time when networking still formative and slow. Data can be flexibly stored as JSON documents or binary data. No need to predefine data types. Data types must be predefined for columns. Does not require knowledge or use of SQL as query language. Requires SQL as query language. Highly optimized for retrieve and append operations; high-performance for data-intensive applications, such as serving pages on high-traffic websites; can handle a large number of documents and document read/writes. Significantly slower times for retrieving and committing data. Designed for occasional, smaller read/write transactions and infrequent larger batch transactions. Data stored as key-document pairs; well suited for applications which handle rapidly growing lists of elements. Data stored in tables with fixed relations between tables. Does not require extensive data modeling; data structure is of lesser significance during development. Data modeling and establishing relational model for data structures required during application development. Asynchronous operations and optimistic concurrency enable applications designed for high throughput. Strict enforcement of data integrity and normalization, with the tradeoff of lower performance and slower response times. Before you develop your application and model application data, you should consider the issues faced when you use a traditional RDBMS. Couchbase Server is well suited to handle these issues:

Stores many serialized objects,

Stores dissimilar objects that do not fit a single schema,

Scales out from thousands to millions of users rapidly,

Performs large volume reads/writes,

Supports schema and application data changes on running system.

If you need a system that provides a high level of scalability, flexibility in data structure, and high performance, a NoSQL solution such as Couchbase is well suited. If you want to handle multi-record transactions, have complex security needs, or need to perform rollback of operations, a traditional RDBMS may be the better alternative for your application. There may also be many cases in which you perform an analysis of your application needs and determine you use both a RDBMS and Couchbase Server for your data. For more detailed information about the topic, see our resource library, webinars and whitepapers on the topic at Couchbase, Why NoSQL, Why Now?

Support for Memcached Protocol¶

The Couchbase Server is completely compatible with the memcached protocol, which is a widely adopted protocol for storing information in high-performance, in-memory caches. This means than any existing memcached client libraries and applications using these libraries can be migrated to Couchbase Server with little or no modification.

There are numerous challenges faced by developers who currently use memcached with a traditional RDBMS which are resolved by a move to Couchbase Server. For instance, if you currently use a memcached layer for data service and a traditional RDBMS, your database could become overloaded and non-responsive when memcached nodes go down. With a Couchbase Server cluster, your information will be automatically replicated across the cluster, which provides a high availability of data, even during node failure.

For more information about Couchbase Server as a replacement for memcached and RDBMS systems, see Replacing a Memcached Tier with a Couchbase Cluster and the Couchbase SDKs.

Server Rebalancing¶

During a server rebalance, Couchbase Server automatically updates information about where data is located. During the rebalance, a Couchbase SDK can therefore still write to an active node in a cluster and the Couchbase Server will update information about the newly saved data location. Once the rebalance is complete, the Couchbase SDK automatically switches to the new topology. For more information, see the Couchbase Server Manual.

Server Failover¶

Couchbase SDKs can connect to any node in a cluster; at runtime SDKs also automatically receive information from Couchbase Server if any nodes are unavailable. If a node that is used by your application fails, the SDK is informed by Couchbase Server and mark that node as down and will also have information about alternate nodes that are still available. You use the Couchbase Admin tool to manually indicate a node has failed, or you can configure couchbase Server to use auto-failover. For more information, see the Couchbase Server Manual. During node failure, Couchbase SDKs receive errors trying to read or write any data that is on a failed node. Couchbase SDKs are still able to read and write to all other functioning nodes in the cluster. After the node failure has been detected and the node has been failed-over, SDKs are updated by the Couchbase Server and will resume functioning with the cluster and nodes as they normally would. In this way, Couchbase SDKs and the applications you build on them are able to cope with transient node failures and still conduct reads and writes.

For more information about node failover, see the Couchbase Server Manual.

Applications on Couchbase Server¶

If you look at successful Couchbase deployments, you will see there several patterns of use; these patterns tend to rely on Couchbase Server’s unique combination of 1) linear, horizontal scalability, 2) sustained low latency and high throughput performance, and 3) the extensibility of the system. This section highlights ways you might want to think about using the Couchbase Server for your application. For more detailed information, including case studies and whitepapers, see Couchbase NoSQL Use Cases.

Session Store

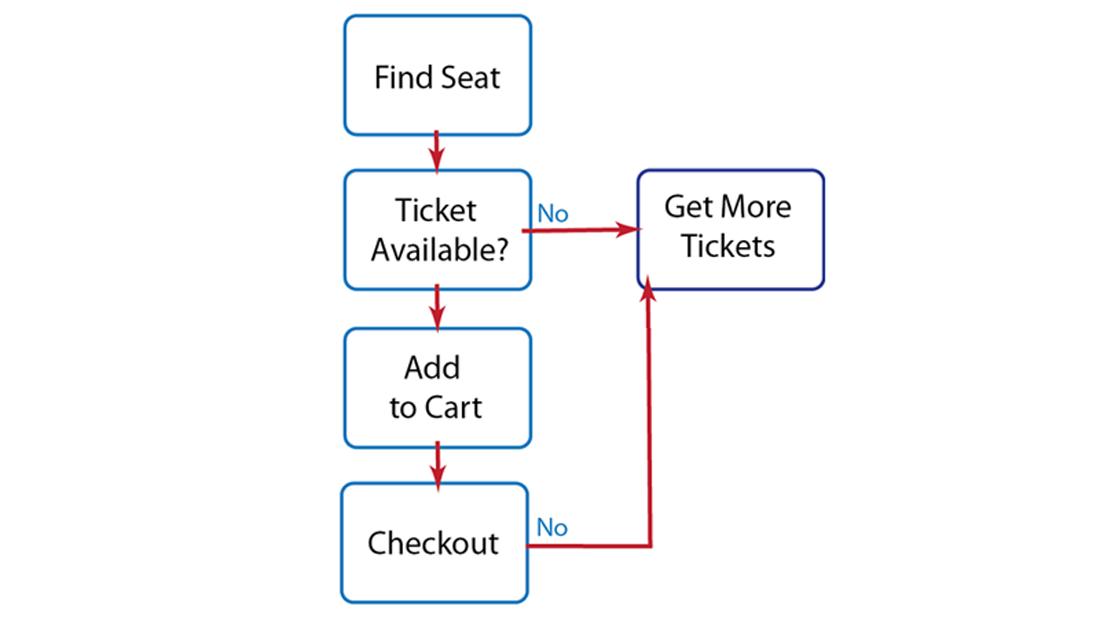

User sessions are easily stored and managed in Couchbase, for instance, by using the document ID naming scheme, “user:USERID”. With Couchbase Server, you can flag items for deletion after a certain amount of time, and therefore you have the option of having Couchbase automatically delete old sessions.

Optimistic concurrency operations can be used to ensure concurrent web requests from a single user do not lose data. For more information, see Check and Set (CAS).

Many web application frameworks such as Ruby on Rails and various PHP and Python web frameworks also provide pre-integrated support for storing session data using Memcached protocol. These are supported by default by Couchbase.

For more detailed information, including case studies and whitepapers, see Couchbase NoSQL Use Cases.

Social Gaming

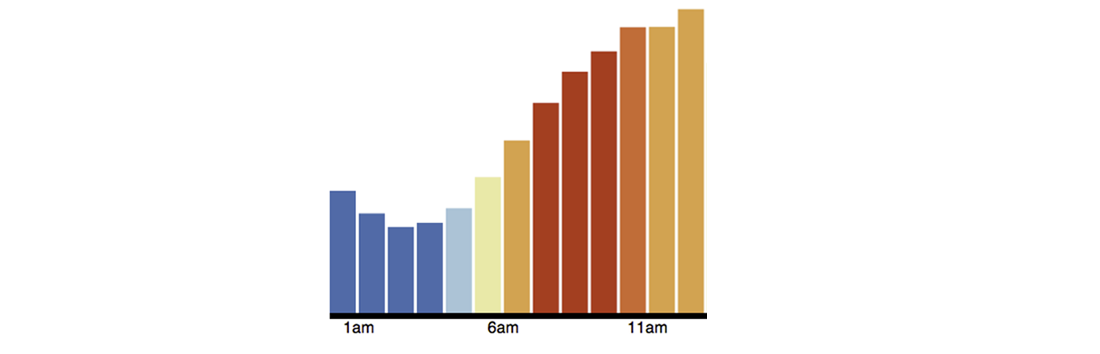

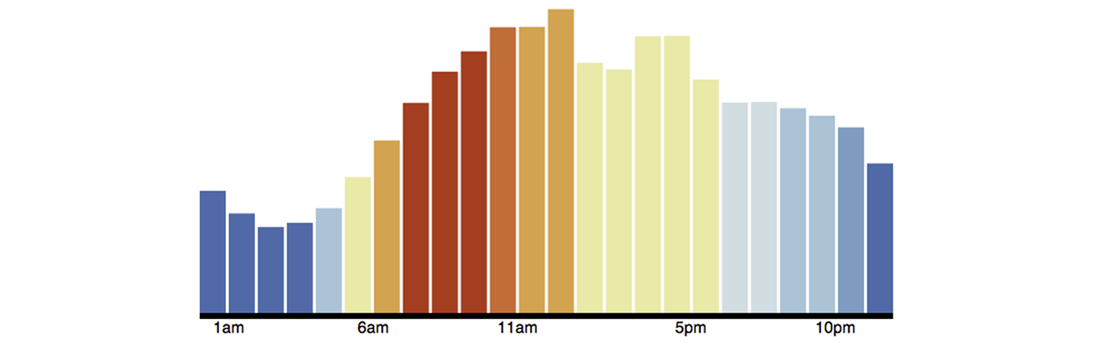

You can model and store game state, property state, time lines, conversations and chats with Couchbase Server. The asynchronous persistence algorithms of Couchbase were designed, built and deployed to support some of the highest scale social games.

In particular, the heavy dual read and write storage access patterns of social games, where nearly every user gesture mutates game state, is serviced by Couchbase by asynchronously queueing mutations for disk storage and also by collapsing mutations into the most recently queued mutation. For example, a player making 10 game state mutations in 5 seconds, such as planting 10 flowers in 5 seconds, is compressed by Couchbase automatically into just one queued disk mutation.

Couchbase Server can also force-save mutated item data to disk, even if an item is heavily changed, such as when the user keeps on clicking and clicking. Additionally, game state for that player remains instantly readable as long as it is in the memory working set of Couchbase.

For more detailed information, including case studies and whitepapers, see Couchbase NoSQL Use Cases.

Ad, Offer and Content Targeting

The same attributes which serve Couchbase in the gaming context also apply well for real-time ad and content targeting. For example, Couchbase provides a fast storage capability for counters. Counters are useful for tracking visits, associating users with various targeting profiles, tracking ad-offers, and for tracking ad-inventory.

Multi-retrieve operations in Couchbase allow ad applications to concurrently distribute data and then gather it against profiles, counters, or other items in order to allow for ad computation and serving decisions under strict response latency requirements.

For more detailed information, including case studies and whitepapers, see Couchbase NoSQL Use Cases.

Modeling Documents¶

This section describes core elements you will use to handle data in Couchbase Server. It describes the ways you can structure individual JSON documents for your application, how to store the documents from a Couchbase SDK, and describe different approaches you may take when you structure data in documents.

Couchbase Server is a document database ; unlike traditional relational databases, you store information in documents rather than table rows. Couchbase has a much more flexible data format; documents generally contain all the information about a data entity, including compound data rather than the data being normalized across tables.

A document is a JSON object consisting of a number of key-value pairs that you define. There is no schema in Couchbase; every JSON document can have its own individual set of keys, although you may probably adopt one or more informal schemas for your data.

With Couchbase Server, one of the benefits of using JSON documents is that you can index and query these records. This enables you to collect and retrieve information based on rules you specify about given fields; it also enables you to retrieve records without using the key for the record. For more information about indexing and querying using Couchbase SDK, see Finding Data with Views.

Comparing Document-Oriented and Relational Data¶

Note

Want to learn more about moving from relational to document-oriented databases? See Relational to NoSQL.

In a relational database system you must define a schema before adding records to a database. The schema is the structure described in a formal language supported by the database and provides a blueprint for the tables in a database and the relationships between tables of data. Within a table, you need to define constraints in terms of rows and named columns as well as the type of data that can be stored in each column.

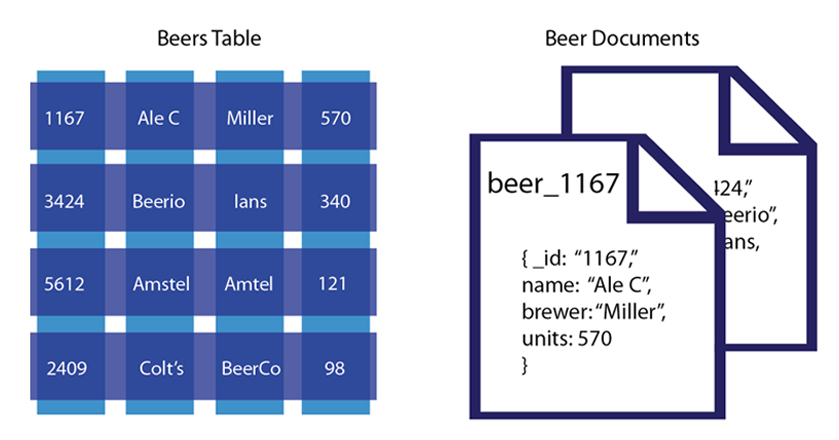

In contrast, a document-oriented database contains documents, which are records that describe the data in the document, as well as the actual data. Documents can be as complex as you choose; you can use nested data to provide additional sub-categories of information about your object. You can also use one or more document to represent a real-world object. The following compares a conventional table with document-based objects:

In this example we have a table that represents beers and their respective attributes: id, beer name, brewer, bottles available and so forth. As we see in this illustration, the relational model conforms to a schema with a specified number of fields which represent a specific purpose and data type. The equivalent document-based model has an individual document per beer; each document contains the same types of information for a specific beer.

In a document-oriented model, data objects are stored as documents; each document stores your data and enables you to update the data or delete it. Instead of columns with names and data types, we describe the data in the document, and provide the value for that description. If we wanted to add attributes to a beer in a relational model, we would need to modify the database schema to include the additional columns and their data types. In the case of document-based data, we would add additional key-value pairs into our documents to represent the new fields.

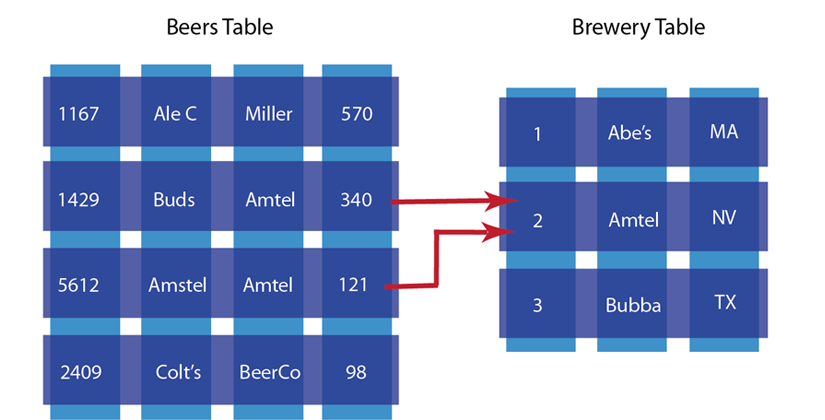

The other characteristic of relational database is data normalization ; this means you decompose data into smaller, related tables. The figure below illustrates this:

In the relational model, data is shared across multiple tables. The advantage to this model is that there is less duplicated data in the database. If we did not separate beers and brewers into different tables and had one beer table instead, we would have repeated information about breweries for each beer produced by that brewer.

The problem with this approach is that when you change information across tables, you need to lock those tables simultaneously to ensure information changes across the table consistently. Because you also spread information across a rigid structure, it makes it more difficult to change the structure during production, and it is also difficult to distribute the data across multiple servers.

In the document-oriented database, we could choose to have two different document structures: one for beers, and one for breweries. Instead of splitting your application objects into tables and rows, you would turn them into documents. By providing a reference in the beer document to a brewery document, you create a relationship between the two entities:

In this example we have two different beers from the Amstel brewery. We represent each beer as a separate document and reference the brewery in the

brewerfield. The document-oriented approach provides several upsides compared to the traditional RDBMS model. First, because information is stored in documents, updating a schema is a matter of updating the documents for that type of object. This can be done with no system downtime. Secondly, we can distribute the information across multiple servers with greater ease. Since records are contained within entire documents, it makes it easier to move, or replicate an entire object to another server.Using JSON Documents¶

JavaScript Object Notation (JSON) is a lightweight data-interchange format which is easy to read and change. JSON is language-independent although it uses similar constructs to JavaScript. JSON documents enable you to benefit from all new Couchbase features, such as indexing and querying; they also to provide a logical structure for more complex data and enable you to provide logical connections between different records.

The following are basic data types supported in JSON:

Numbers, including integer and floating point,

Strings, including all Unicode characters and backslash escape characters,

Boolean: true or false,

Arrays, enclosed in square brackets: [“one”, “two”, “three”]

Objects, consisting of key-value pairs, and also known as an associative array or hash. The key must be a string and the value can be any supported JSON data type.

For more information about creating valid JSON documents, please refer to JSON.

When you use JSON documents to represent your application data, you should think about the document as a logical container for information. This involves thinking about how data from your application fits into natural groups. It also requires thinking about the information you want to manage in your application. Doing data modeling for Couchbase Server is a similar process that you would do for traditional relational databases; there is however much more flexibility and you can change your mind later on your data structures. As a best practice, during your data/document design phase, you want to evaluate:

What are the things you want to manage in your applications, for instance, users, breweries, beers, and so forth.

What do you want to store about the things. For example, this could be alcohol percentage, aroma, location, etc.

How do the things in your application fit into natural groups.

For instance, if you are creating a beer application, you might want a particular document structure to represent a beer:

{ "name": "description": "category": "updated": }For each of the keys in this JSON document you would provide unique values to represent individual beers. If you want to provide more detailed information in your beer application about the actual breweries, you could create a JSON structure to represent a brewery:

{ "name": "address": "city": "state": "website": "description": }Performing data modeling for a document-based application is no different than the work you would need to do for a relational database. For the most part it can be much more flexible, it can provide a more realistic representation or your application data, and it also enables you to change your mind later about data structure. For more complex items in your application, one option is to use nested pairs to represent the information:

{ "name": "address": "city": "state": "website": "description": "geo": { "location": ["-105.07", "40.59"], "accuracy": "RANGE_INTERPOLATED" } "beers": [ _id4058, _id7628] }In this case we added a nested attribute for the geolocation of the brewery and for beers. Within the location, we provide an exact longitude and latitude, as well as level of accuracy for plotting it on a map. The level of nesting you provide is your decision; as long as a document is under the maximum storage size for Couchbase Server, you can provide any level of nesting that you can handle in your application.

In traditional relational database modeling, you would create tables that contain a subset of information for an item. For instance a brewery may contain types of beers which are stored in a separate table and referenced by the beer id. In the case of JSON documents, you use key-values pairs, or even nested key-value pairs.

Schemaless Data Modeling¶

When you use documents to represent data, a database schema is optional. The majority of your effort will be creating one or more documents that will represent application data. This document structure can evolve over time as your application grows and adds new features.

In Couchbase Server you do not need to perform data modeling and establish relationships between tables the way you would in a traditional relational database. Technically, every document you store with structure in Couchbase Server has its own implicit schema; the schema is represented in how you organize and nest information in your documents.

While you can choose any structure for your documents, the JSON model in particular will help you organize your information in a standard way, and enable you to take advantage of Couchbase Server’s ability to index and query. As a developer you benefit in several ways from this approach:

Extend the schema at runtime, or anytime. You can add new fields for a type of item anytime. Changes to your schema can be tracked by a version number, or by other fields as needed.

Document-based data models may better represent the information you want to store and the data structures you need in your application.

You design your application information in documents, rather than model your data for a database.

Converting application information into JSON is very simple; there are many options, and there are many libraries widely available for JSON conversion.

Minimization of one-to-many relationships through use of nested entities and therefore, reduction of joins.

When you use JSON documents with Couchbase, you also create an application that can benefit from all the new features of Couchbase 2.0, particularly indexing and querying. For more information, see Finding Data with Views.

There are several considerations to have in mind when you design your JSON document:

Whether you want to use a type field at the highest level of your JSON document in order to group and filter object types.

What particular keys, ids, prefixes or conventions you want to use for items, for instance ‘beer_My_Brew.’

When you want a document to expire, if at all, and what expiration would be best.

If want to use a document to access other documents. In other words, you can store keys that refer other documents in a JSON document and get the keys through this document. In the NoSQL database jargon, this is often known as using composite keys.

You can use a

typefield to group together sets of records. For example, the following JSON document contains atypefield with the valuebeerto indicate that the document represents a beer. A document that represents another kind of beverage would use a different value in the type field, such asaleorcider.{ "beer_id": "beer_Hoptimus_Prime", "type” : “beer”, "abv": 10.0, "category": "North American Ale", "name": "Hoptimus Prime", "style": “Double India Pale Ale” }Here is another type of document in our application which we use to represent breweries. As in the case of beers, we have a

typefield we can use now or later to group and categorize our beer producers:{ "brewery_id": ”brewery_Legacy_Brewing_Co", "type” : “brewery", "name" : "Legacy Brewing Co.", "address": "525 Canal Street Reading, Pennsylvania, 19601 United States", "updated": "2010-07-22 20:00:20" }What happens if we want to change the fields we store for a brewery? In this case we just add the fields to brewery documents. In this case we decide later that we want to include GPS location of the brewery:

{ "brewery_id": ”brewery_Legacy_Brewing_Co”, "type” : “brewery”, "name" : "Legacy Brewing Co.", "address": "525 Canal Street Reading, Pennsylvania, 19601 United States", "updated": "2010-07-22 20:00:20", "latitude": -75.928469, "longitude": 40.325725 }So in the case of document-based data, we extend the record by just adding the two new fields for

latitudeandlongitude. When we add other breweries after this one, we would include these two new fields. For older breweries we can update them with the new fields or provide programming logic that shows a default for older breweries. The best approach for adding new fields to a document is to perform a check-and-set operation on the document to change it; with this type of operation, Couchbase Server will send you a message that the data has already changed if someone has already changed the record. For more information about check-and-set methods with Couchbase, see Check and Set (CAS)To create relationships between items, we again use fields. In this example we create a logical connection between beers and breweries using the

breweryfield in our beer document which relates to theidfield in the brewery document. This is analogous to the idea of using a foreign key in traditional relational database design.This first document represents a beer, Hoptimus Prime:

{ "beer_id": "beer_Hoptimus_Prime", "type” : “beer”, "abv": 10.0, "brewery": ”brewery_Legacy_Brewing_Co", "category": "North American Ale", "name": "Hoptimus Prime", "style": “Double India Pale Ale” }This second document represents the brewery which brews Hoptimus Prime:

{ "brewery_id": ”brewery_Legacy_Brewing_Co”, "type” : “brewery”, "name" : "Legacy Brewing Co.", "address": "525 Canal Street Reading, Pennsylvania, 19601 United States", "updated": "2010-07-22 20:00:20", "latitude": -75.928469, "longitude": 40.325725 }In our beer document, the

breweryfield points to ‘brewery_Legacy_Brewery_Co’ which is the key for the document that represents the brewery. By using this model of referencing documents within a document, we create relationships between application objects.Document Design Considerations¶

When you work on document design, there are a few other considerations you should have in mind. This will help you determine whether you use one or more documents to represent something in your application. It will also help you determine how and when you provide references to show relationships between multiple documents. Consider:

Whether you will represent the items as separate objects.

Whether you want to access the objects together at runtime.

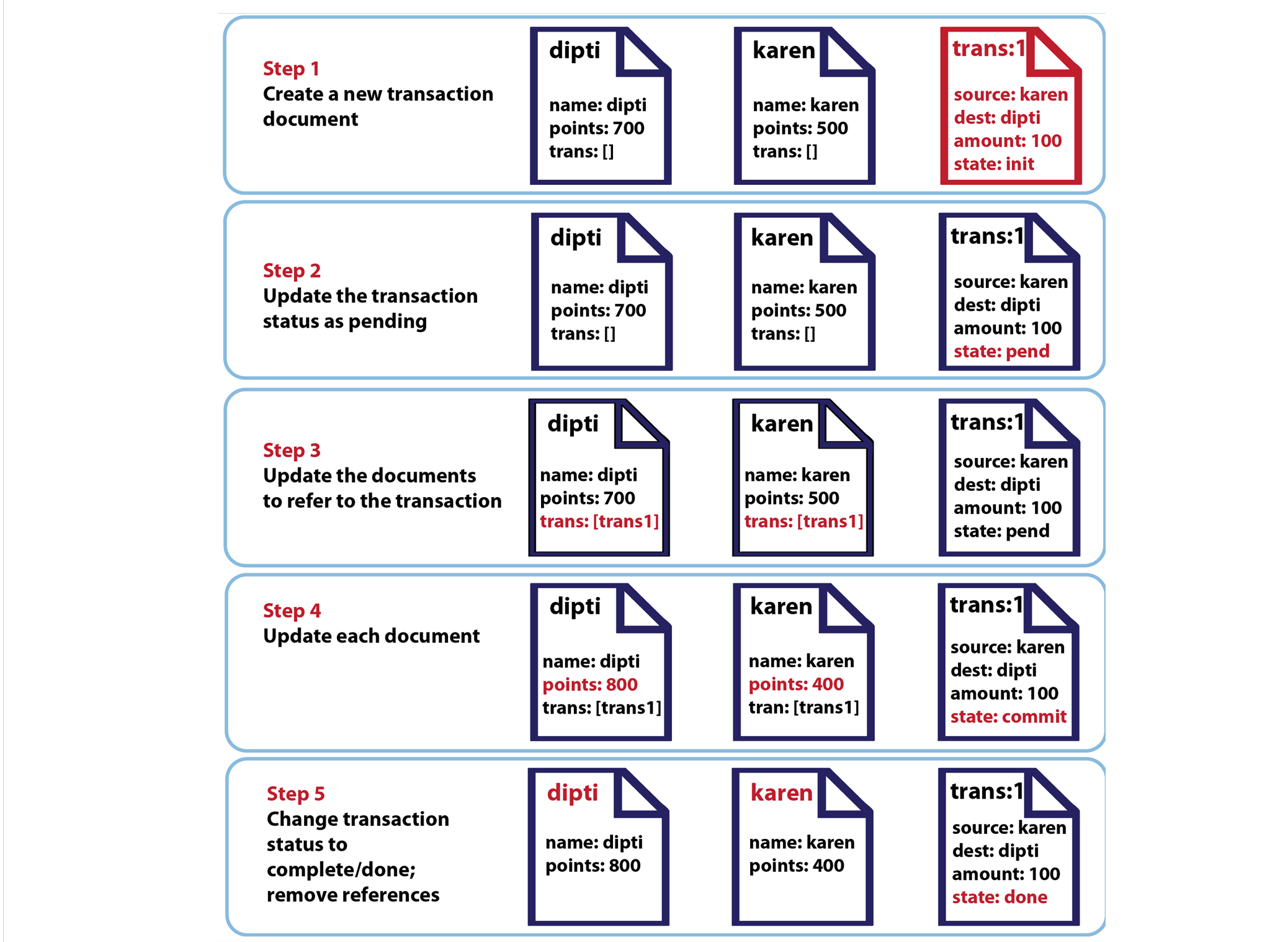

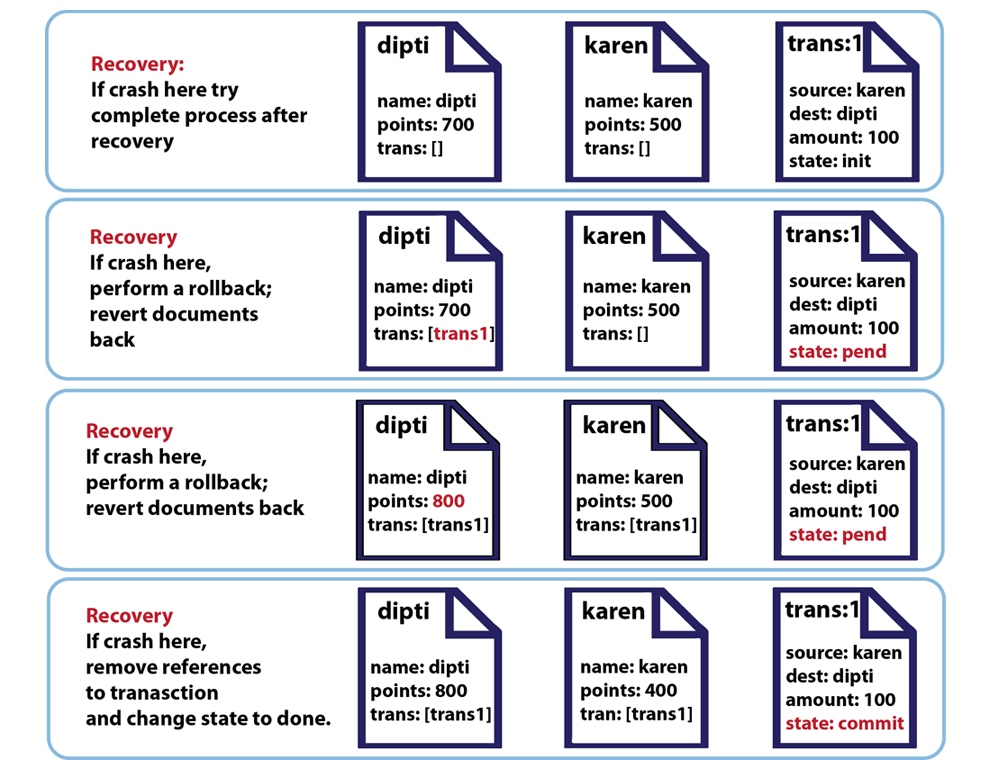

If you want some data to be atomic; that is, changes occur at once to this data, or the change fails and will not made.

Whether you will index and query data through views, which are stored functions you use to find, extract, sort, and perform calculations on documents in Couchbase Server. For more information see Finding Data with Views.

The following provides some guidelines on when you would prefer using one or more than one document to represent your data.

When you use one document to contain all related data you typically get these benefits:

Application data is de-normalized.

Can read/write related information in one operation.

Eliminate need for client-side joins.

If you put all information for a transaction in a single document, you can better guarantee atomicity since any changes will occur to a single document at once.

When you provide a single document to represent an entire entity and any related records, the document is known as an aggregate. You can also choose to use separate documents for different object types in your application. This approach is known as denormalization in NoSQL database terms. In this case you provide cross references between objects as we demonstrated earlier in the beer-brewery documents. You typically gain the following from separate documents:

Reduce data duplication.

May provide better application performance and scale by keeping document size smaller.

Application objects do not need to be in same document; separate documents may better reflect the objects as they are in the real world.

The following examples demonstrate the use of a single document compared to separate documents for a simple blog. In the blog application a user can create an entry with title and content. Other users can add comments to the post. In the first case, we have a single JSON document to represent a blog post, plus all the comments for the post:

{ "post_id": "dborkar_Hello_World", "author": "dborkar", "type": "post" "title": "Hello World", "format": "markdown", "body": "Hello from [Couchbase](http://couchbase.com).", "html": "<p>Hello from <a href=\"http: … "comments":[ ["format": "markdown", "body":"Awesome post!"], ["format”: "markdown", "body":"Like it." ] ] }The next JSON documents show the same blog post, however we have split the post into the actual entry document and a separate comment document. First is the core blog post document as JSON. Notice we have a reference to two comments under the

commentskey and two values in an array:{ "post_id": "dborkar_Hello_World", "author": "dborkar", "type": "post", "title": "Hello World", "format": "markdown", "body": "Hello from [Couchbase](http://couchbase.com).", "html": “<p>Hello from <a href="http: …"> "comments" : ["comment1_jchris_Hello_world", "comment2_kzeller_Hello_World"] }The next document contains the first actual comment that is associated with the post. It has the key

comment_idwith the first value of ‘comment1_dborkar_Hello_world’; this value serves as a reference back to the blog post it belongs to:{ "comment_id": "comment1_dborkar_Hello_World", "format": "markdown", "body": "Awesome post!" }The next example demonstrates our beer and breweries example as single and separate documents. If we wanted to use a single-document approach to represent a beer, it could look like this in JSON:

{ "beer_id": 10.0, "name": "Hoptimus Prime", "category": "North American Ale", "style": "Imperial or Double India Pale Ale", "brewery": "Legacy Brewing Co." : { "address1" : "Easy Peasy St.", "address2" : "Suite 4", "city" : "Baltimore", "state" : "Maryland", "zip" : "21215", "capacity" : 10000, }, "updated": [2010, 7, 22, 20, 0, 20], "available": true }In this case we provide information about the brewery as a subset of the beer. But consider the case where we have more than one beer from the brewery, in this case:

{ "beer_id": 12.0, "name": "Pleny the Hipster", "category": "Wheat Beer", "style": "Koelsch", "brewery": "Legacy Brewing Co." : { "address1" : "Easy Peasy St.", "address2" : "Suite 4", "city" : "Baltimore", "state" : "Maryland", "zip" : "21215", "capacity" : 10000, }, "updated": [2011, 8, 2, 20, 0, 20], "available": true }Here we are starting to develop duplicate information because we have the same brewery information in each beer document. In this case it makes sense to separate the brewery and beers as different documents and relate them through fields. The revised, separate beer document appears below. Notice we have added a new field to represent the brewery and provide the brewer id:

{ "beer_id": 10.0, "name": "Hoptimus Prime", "category": "North American Ale", "style": "Imperial or Double India Pale Ale", "brewery" : "leg_brew_10" "updated": [2010, 7, 22, 20, 0, 20], "available": true }And here is the associated brewery as a separate brewery document. In this case, we may simplify the document structure since it is separate from the beer data, and provide all the brewery information at the same level:

{ "brewery_id" : "leg_brew_10", "name": "Legacy Brewing Co.", "address1" : "Easy Peasy St.", "address2" : "Suite 4", "city" : "Baltimore", "state" : "Maryland", "zip" : "21215", "capacity" : 10000, }Modeling Documents for Retrieval¶

Once you grasp the concept that you can model real-world objects as documents and you understand the idea that you can create relationships between documents, you may wonder how

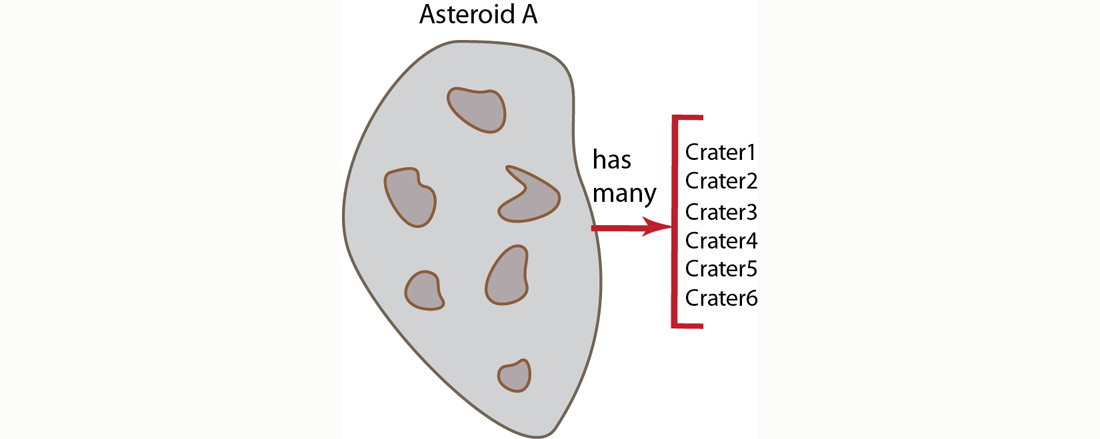

shouldyou go about representing the relationships? For instance, if you have an object that has a relationship of ownership/possession, do you always want to include fields in that object which reference all the objects it owns? In other words, if you follow this approach, when an asteroid has craters, the asteroid document should contain references to each crater document. In traditional relational database terminology, this is called a one-to-many relationship, and is often also called a has-many relationship. In an asteroid example we say the “asteroid has many craters” and conceptually it would appear as follows:

Imagine we are creating a virtual universe containing asteroids. And all asteroids can have zero or more craters; users in the environment can create more craters on the asteroids, and the environment can also create more craters on an asteroid. In this case, we have a relationship of ownership/possession by our asteroid since an asteroid contains the craters that are on it. If we choose to express ownership of the craters by the asteroid and say the asteroid has-many craters, we would provide an asteroid document as follows:

{ "a_id" : "asteroidA", "craters" : ["crater1", "crater2" .... ], .... }In the asteroid document, we reference the crater by crater ID in an array of craters. Each of the craters could be represented by the following JSON document:

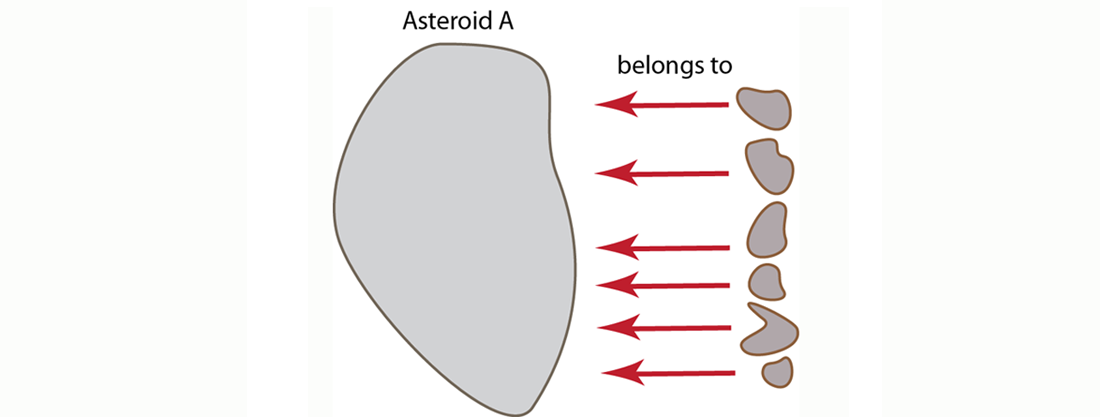

{ "crater_id" : "crater1", "location" : [ "37.42N", "-112.165W" ], "depth" : 80 .... }But because we are working with a flexible, document-centric design, we could instead put all the references to the object-that-owns in the objects that are owned. In the asteroid example, we would have references from each crater document to the asteroid document. In the relational world, we refer to this as a many-to-one relationship which is sometimes also called a belongs-to relationship. This alternate approach would appear as follows:

The respective asteroid and crater JSON documents for this approach would now appear as follows:

{ "a_id" : "asteroidA", .... }In the asteroid document we have a unique asteroid ID field,

a_idwhich we can reference from our crater documents. Each of the craters could be represented by the following JSON document:{ "crater_id" : "crater1", "on_asteroid" : "asteroidA" "location" : [ "37.42N", "-112.165W" ], "depth" : 80 .... }With this alternate approach, we provide any information about a relationship between asteroid and crater in each crater document. We provide a field

on_asteroidin each crater document with the value linking us to the asteroid document.So which of these two approaches is preferable for relating the two documents? There are two important considerations to keep in mind when you relate documents:

-

Issues of Contention : if you expect a lot of updates from different processes to occur to a document, creating several belongs-to relationships is more desirable.

In the case of our asteroid example, if we have all craters referenced in the asteroid document, we can expect a good amount of conflict and contention over the asteroid document. As users create more craters, or as the environment creates more craters, we can expect conflicts between the processes that are all trying to update crater information on a single asteroid document. We could use locks and check-and-sets to prevent conflict, but this would diminish read/write response time. So in this type of scenario, putting the link from craters to asteroid makes more sense to avoid contention.

-

Retrieving Information: how you relate documents or how you provide references between documents will influence the way you should retrieve data at a later point. Similarly, how you want to retrieve information will influence your decision on how to model your documents.

In this asteroid model, since we choose to reference from craters to asteroid to avoid contention, we need to use indexing and querying to find all craters associated with an asteroid. If we had chosen the first approach where the asteroid contains references to all craters, we could perform a multiple-retrieve with the list of craters to get the actual crater documents.

If we did not have this concern about contention in our asteroid example, it would be preferable to use the

has-manyapproach, where one document has references to multiple documents. This is because performing a multiple-retrieve on a list of documents is always faster than getting the same set of documents through indexing and querying. Therefore, as long as there is less concern about contention, we should use thehas-manymodel as the preferred approach. The advantages of this approach apply to all cases where our object is relatively static. For example, if you have a player document and you do not expect to change the player profile object that often you could store references to player abilities in the player document and describe the abilities in separate documents.For more information about retrieving information using a multiple retrieve, or by using indexing and querying, see Retrieving Multiple Keys and Finding Data with Views

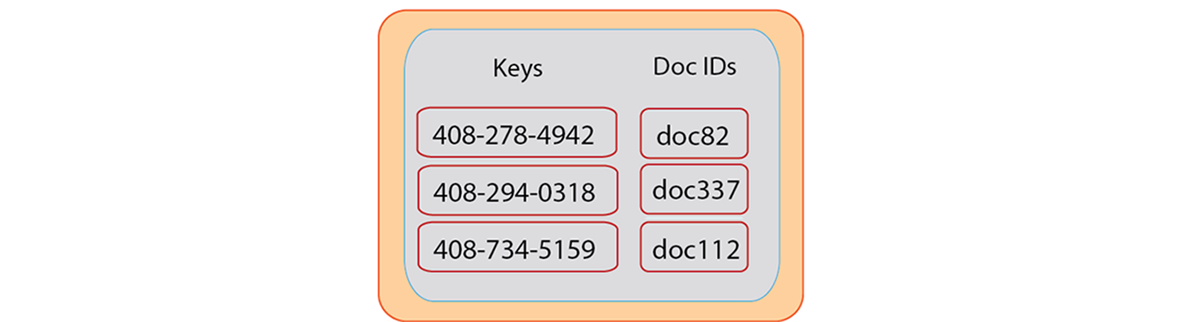

Using Reference Documents for Lookups¶

There are two approaches for finding information based on specific values. One approach is to perform index and querying with views in Couchbase. The other approach is to use supporting documents which contain the key for the main document. The latter approach may be preferable even with the ability to query and index in Couchbase because the document-based lookup can still provide better performance if you are the lookup frequently. In this scenario, you could separate documents to represent a main application object, and create additional documents to represent alternate values associated with the main the document.

When you store an object, you use these supporting documents which enable you to later lookup the object with different keys. For instance, if you store a user as a document, you can create additional helper documents so that you can find the user by email, Facebook ID, TwitterID, username, and other identifiers beside the original document key.

To use this approach, you should store your original document and use a predictable pattern as the key for that type of object. In this case we specifically create a unique identifier for each user so that we avoid any duplicate keys. Following the example of performing a user profile lookup, imagine we store all users in documents structured as follows:

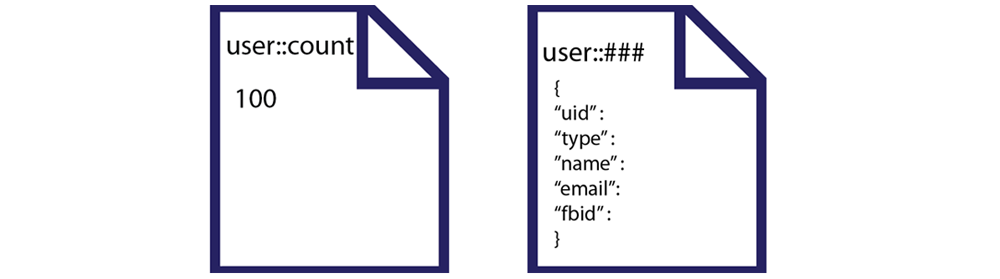

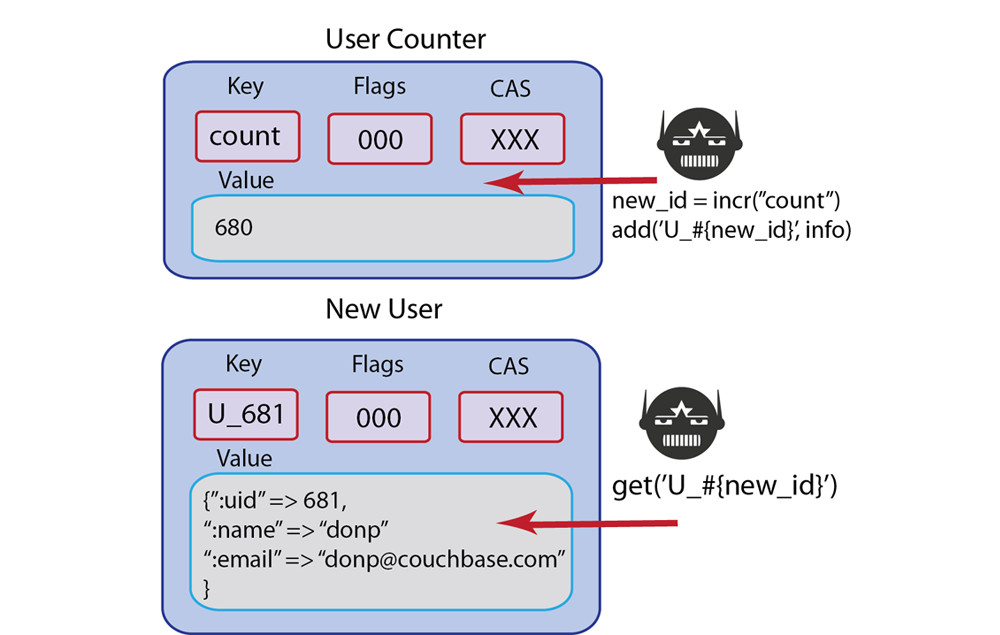

{ "uid" "type" "name" "email" "fbid" }To keep track of how many users are in our system, we create a counter,

user::countand increment it each time we add a new user. The key for each user document will be in the standard form ofuser::uuid. The records that we will have in our system would be structured as follows:

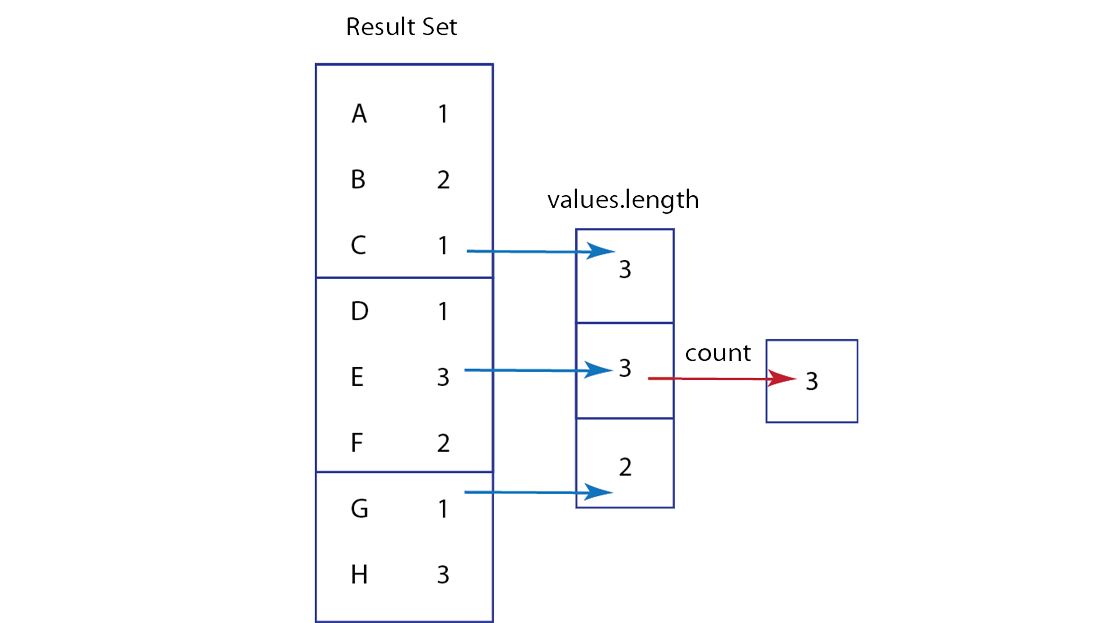

In this case we start with an initial user count of

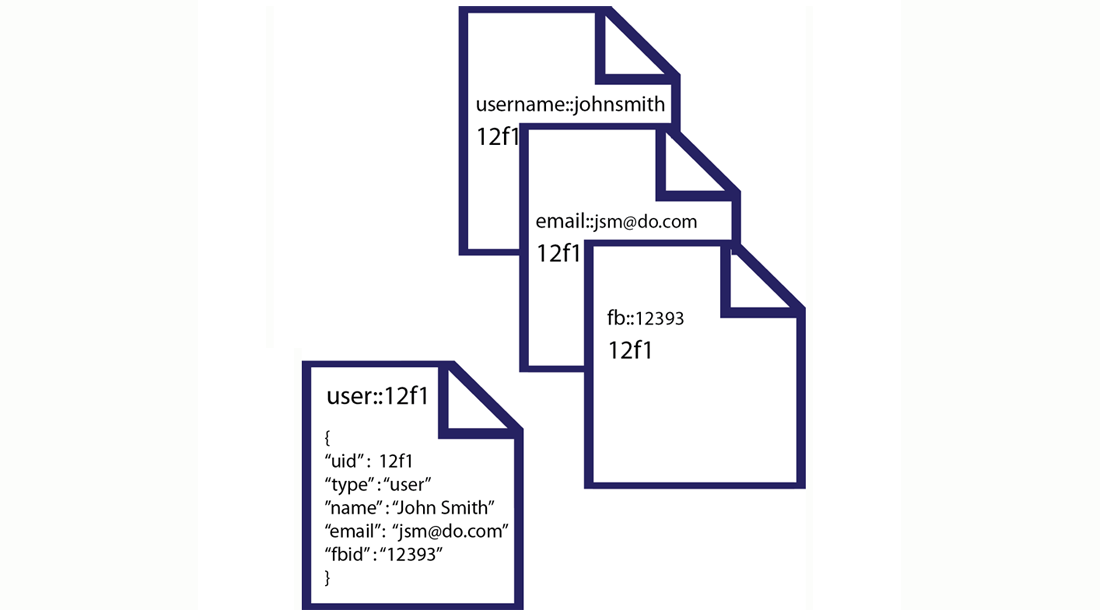

100. In the Ruby example that follows we increment the counter and then set a new user record with a new unique user id:# => setup default connection c = Couchbase.new # => initialize counter to 100 c.set("user::count", 100) # => increment counter c.incr("user::count") # => get unique uuid, new_id = 12f1 new_id = UUID.timestamp_create().to_s user_name = "John Smith" user_username = "johnsmith" user_email = "jsm@do.com" user_fb = "12393" # save User to Couchbase user_doc = c.add("user::#{new_id}", { :uid => new_id, :type => "user", :name => user_name, :email => user_email, :fbid => user_fb })Here we create a default connection to the server in a new Couchbase client instance. We then create a new record

user::counterwith the initial value of 100. We will increment this counter each time we add a new user. We then generated a unique user ID with a standard Ruby UUID gem. The next part of our code creates local variables which represent our user properties, such asJohn Smitha the user name. In the past part of this code we take the user data and perform anaddto store it to Couchbase. Now our document set is as follows:

Then we store additional supporting documents which will enable us to find the user with other keys. For each different type of lookup we create a separate record. For instance to enable lookup by email, we create a email record with the fixed prefix

email::for a key:# using same variables from above for the user's data # add reference document for username c.add("username::#{user_username.downcase}", new_id) # => save lookup document, with document key = "username::johnsmith" => 101 # add reference document for email c.add("email::#{user_email.downcase}", new_id) # => save lookup document, with document key = "email::jsmith@domain.com" => 101 # add reference document for Facebook ID c.add("fb::#{user_fb}", new_id) # => save lookup document, with document key = "fb::12393" => 101The additional ‘lookup’ documents enable us to store alternate keys for the user and relate those keys to the unique key for the user record

user::101.The first document we set is for a lookup by username, so we do anaddusing the keyusername::. After we create all of our lookup records, the documents in our system that relate to our user appear as follows:

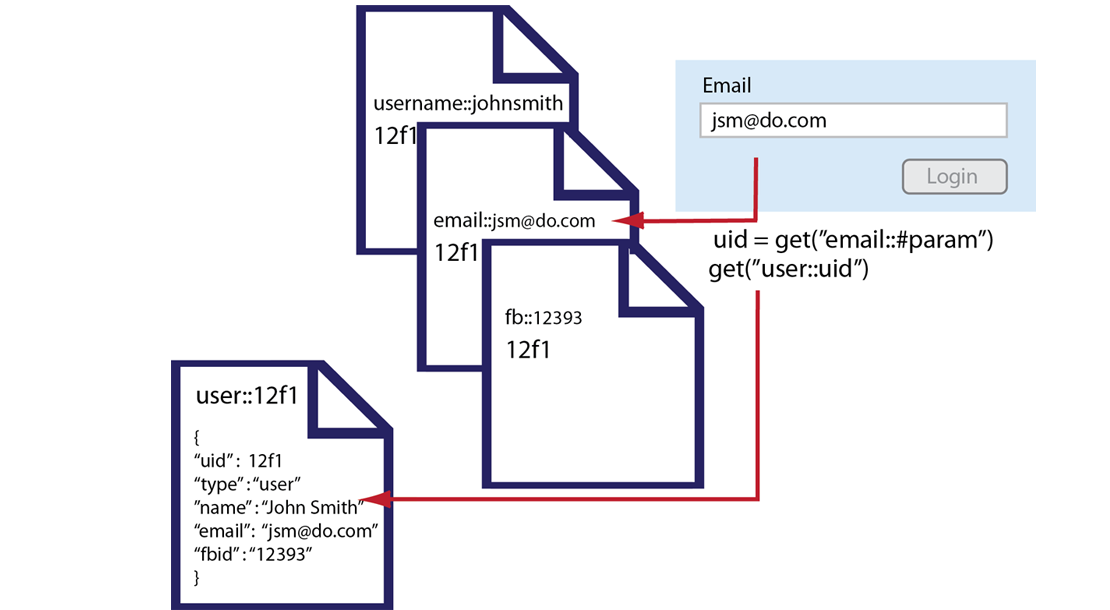

Once these supporting documents are stored, we can attempt a lookup using input from a form. This can be any type of web form content, such as an entry in a login, an item from a customer service call, or from an email support system. First we retrieve the web form parameter:

#retrieve input from a web form user_username = params["username"] # retrieve by user_id value using username provided in web form user_id = c.get("username::#{user_username.downcase}") # => get the user_id # => 12f1 user_hash = c.get("user::#{user_id}") # => get the primary User document (key = user::12f1) puts user_hash # => { "uid" => 101, "type" => "user", "name" => "John Smith", "email" => "jsmith@domain.com", "fbid" => "12393" } #get additional web form parameter, email user_email = params["email"] # retrieve by email user_id = c.get("email::#{user_email.downcase}") # => get the user_id # => 12f1 user_hash = c.get("user::#{user_id}") # => get the primary User document (key = user::12f1) #get facebook ID user_fb = auth.uid # retrieve by Facebook ID user_id = c.get("fb::#{user_fb}") # => get the user_id # => 12f1 user_hash = c.get("user::#{user_id}") # => get the primary User document (key = user::12f1)The first part of our code stores the

usernamefrom a web form to variable we can later use. We pass the lowercase version of the form input to agetto find the unique user id 12f1. With that unique user id, we perform agetwith the key consisting ofuser::12f1to get the entire user record. So the supporting documents enable you to store a reference to a primary document under a different key. By using a standard key pattern, such as prefix ofemail::you can get to the primary user document with an email. By using this pattern you can lookup an object based on many different properties. The following illustrates the sequence of operations you can perform, and the documents used when you do an email-based lookup:

The other use case for this pattern is to create categories for objects. For instance, if you have a beer, keyed with the id

beer::#{sku}, you can create a product category and reference products belonging to that category with they keycategory::ales::count. For this category key, you would provide a unique count and the category name, such as ales. Then you would add products to the content that references the SKU for your beers. For instance, the key-value pair, could look like this:{ "product" : "#{sku}" }When you perform a lookup, you could also do a

multi-geton all items that are keyedcategory::ales. This way you can retrieve all primary records for ales. For more information about multi-get, see Retrieving Multiple KeysSample Storage Documents¶

The following are some sample JSON documents which demonstrate some different types of application data which can be stored as JSON in Couchbase Server.

Here is an example of a message document:

{ "from": "user_512", "to": "user_768", "text": "Hey, that Beer you recommended is pretty fab, thx!" "sent_timestamp":476560 }The next example is a user profile document. Notice in this case, we have two versions of a user profile; in order to extend the number of attributes for a user, you would just add additional string-values to represent the new fields:

{ "user_id": 512, "name": "Bob Likington", "email": "bob.like@gmail.com", "sign_up_timestamp": 1224612317, "last_login_timestamp": 1245613101 } { "user_id": 768, "name": "Simon Neal", "email": "sneal@gmail.com", "sign_up_timestamp": 1225554317, "last_login_timestamp": 1234166701, "country": "Scotland", "pro_account" true, "friends": [512, 666, 742, 1111] }In this case we add county, account type, and friends as additional fields to our user profile. To extend our application with new user attributes, we simply start storing additional fields at the document level. Unlike traditional relational databases, there is no need for us to have server downtime, or database migration to a new schema.

To add new data fields, we simply start writing the additional JSON values for that particular transaction. You would also update your application to provide a default value for documents that do not yet have these fields.

This last example provides a sample JSON document to store information about a photo:

{ "photo_id": "ccbcdeadbeefacee", "size": { "w": 500, "h": 320, "unit": "px" }, "exposure: "1/1082", "aperture": "f/2.4", "flash": false, "camera": { "name": "iPhone 4S", "manufacturer": "Apple", } "user_id": 512, "timestamp": [2011, 12, 13, 16, 31, 07] }As we did in the brewery document earlier in this chapter, we nest a set of attributes for the photo size and camera by using JSON syntax.

Accessing Data with Couchbase SDKs¶

Couchbase Server communicates with a web application in two ways: 1) through APIs in your chosen SDK which are supported by Couchbase Server, or 2) through a RESTful interface which you can use to manage an entire cluster.

Couchbase SDKs enable you to perform read/write operations to Couchbase Server and will be responsible for getting updates on cluster topology from Couchbase Server. The SDKs provide an abstraction level so that you do not need to be concerned about handling the logic of cluster rebalance and failover in your application. All SDKs are able to automatically get updated server and cluster information so that your web application continues to function during a Couchbase Server rebalance or failover.

Couchbase SDKs are written in several programming languages so that you can interact with Couchbase Server using the same language you use for your web application. The SDKs available from Couchbase are at: Couchbase SDK Downloads

You use a Couchbase SDK for storage, retrieval, update, and removal of application data from the database. As of Couchbase 2.0 you can also use the SDKs to index and query information and also determine if entries are available to index/query. Couchbase SDK read/write methods are all built upon the binary version of the memcached protocol. When you perform an operation an SDK converts it into a binary memcached command which is then sent to Couchbase Server. For more information about memcached protocol, see memcached protocol.

Couchbase REST API can be used to get information about a cluster or make changes to a entire cluster. At an underlying level, Couchbase SDKs use the REST API to perform indexing and querying; for developers who want to write their own SDK, the REST API can also be used to provide cluster updates to a SDK. There are also some helpful bucket-level operations that you will use as an application developer, such as creating a new data bucket, and setting authentication for the bucket. With the REST API, you can also gather statistics from a cluster, define and make changes to buckets, and add or remove new nodes to the cluster. For more information about helpful bucket-level operations you will can use as you develop an application in Couchbase Server Manual REST API.

Couchbase SDKs and SQL Commands¶

Couchbase SDKs support all of the four standard SQL commands used for reading and writing data. These functions in Couchbase have different method names, but they are the functional equivalents of the following SQL commands:

SQL Command Couchbase SDK Method INSERT, to create.setandaddSELECT, to retrieve/read data.get, multiple-retrieves, and get-and-touch (get and update expiration).UPDATE, to modify data.setwith a given key, oraddwith a new key, orreplacewith a key, orcas, also known as Check-and-Set. Used to update a value by providing the matching CAS value for the document. There are also methods for incrementing and decrementing numeric values, changing the expiration for a value, as well as pre-pending or appending to stored objects.DELETE, to remove data.delete, deletes information associated with a given key.Reading/Writing Data¶

In general, all Couchbase SDKs provide the same core set of methods to read, write and update documents in the Couchbase Server’s data stores. Common features across all SDKs include:

All operations are atomic

All operations require a key

No implicit locking, such as a row lock, occur during an operation

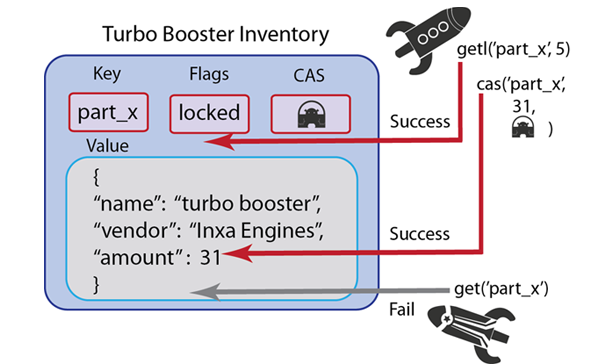

Several update operations require a matching CAS value in order to succeed. You provide the CAS value as a parameter to a method and if the value matches the current CAS value stored with a document, it will be updated.

The following describes major data store operations you can perform in your web application using the Couchbase Client. Language-specific variations in the SDK’s do exist; please consult your chosen SDK’s Language Reference for details specific to the SDK at Develop with Couchbase.

Note: If you use the text-based memcache protocol to communicate with Couchbase Server, you will need to use moxi as a message proxy. For more information, see Moxi Manual 1.8.

-

Store Operations

Add: Stores a given document if it does not yet exist in the data store.

Set: Stores a given document, overwriting an existing version if it exists.

-

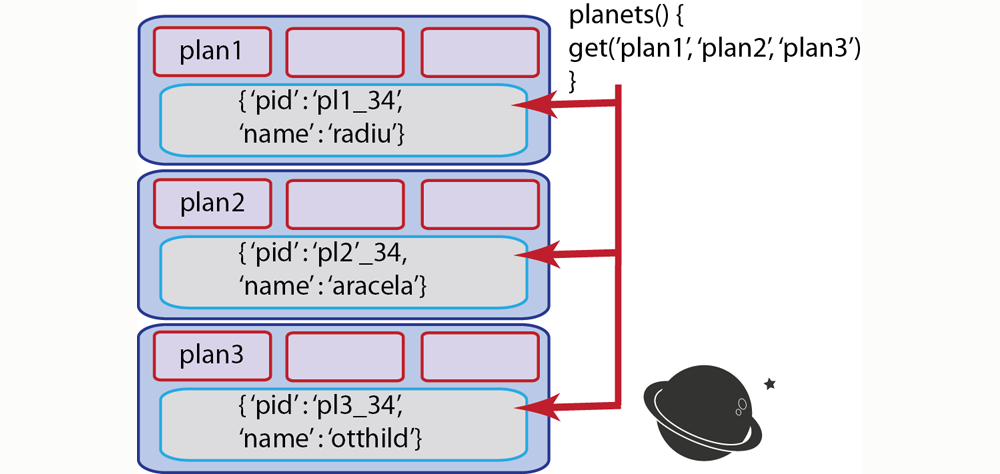

Retrieve Operations

Get: Retrieve/Fetch a specified document.

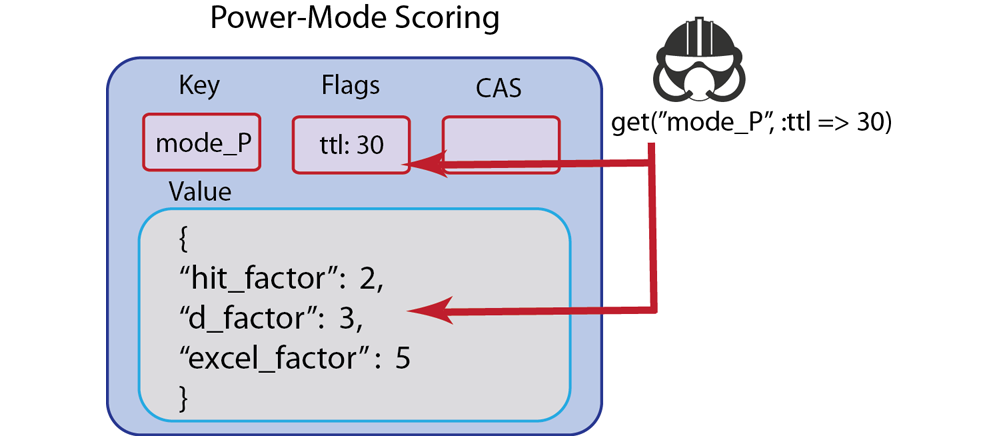

Get and touch: Fetch a specified document and update the document expiration.

Multi-retrieves: Fetch multiple documents in a single server request.

-

Update Operations

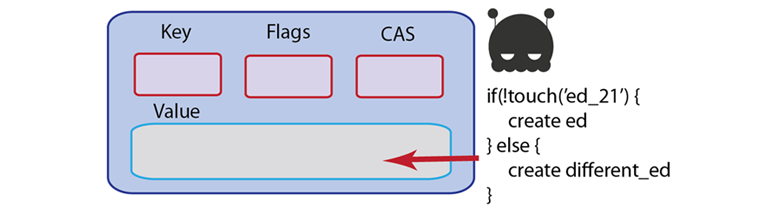

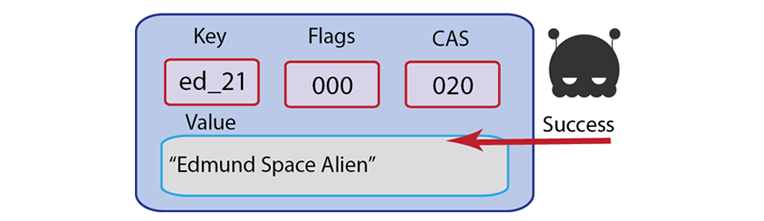

Touch: Update the Time to Live (TTL) for a given document.

Replace: Replace a given document, if it exists, otherwise do not commit any data to the store.

Check and Set (CAS): Replace a current document with a given document if it matches a given CAS value.

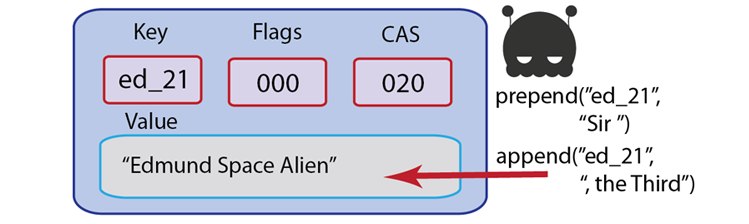

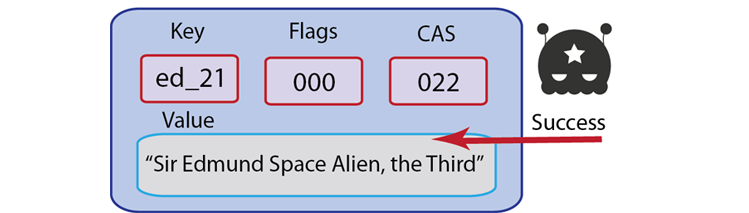

Append/Prepend: Add data at the start, or at the end of a specified document.

Delete: Remove a specified document from the store.

Flush: Delete an entire data bucket, including cached and persisted data.

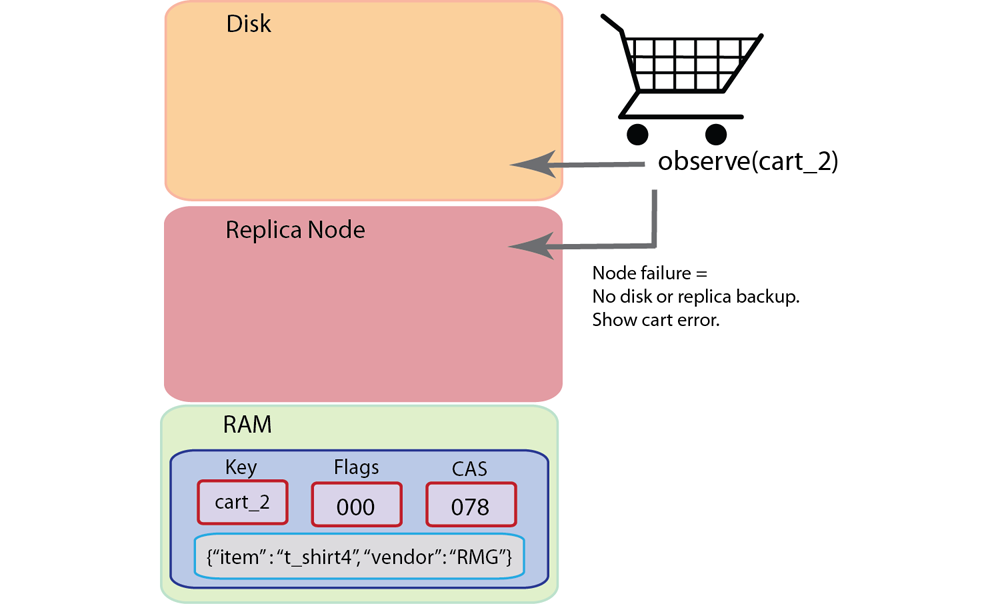

‘Observe’: Determine whether a stored document is persisted onto disk, and is therefore also available via indexing and querying.

About Document Expiration¶

Time to Live, also known as TTL, is the time until a document expires in Couchbase Server. By default, all documents will have a TTL of 0, which indicates the document will be kept indefinitely. Typically when you add, set, or replace information, you would establish a custom TTL by passing it as a parameter to your method call. As part of normal maintenance operations, Couchbase Server will periodically remove all items with expirations that have passed.

Here is how to specify a TTL:

-

Values less than 30 days: if you want an item to live for less than 30 days, you can provide a TTL in seconds, or as Unix epoch time. The maximum number of seconds you can specify are the seconds in a month, namely 30 x 24 x 60 x 60. Couchbase Server will remove the item the given number of seconds after it stores the item.

Be aware that even if you specify a TTL as a relative value such as seconds into the future, it is actually stored in Couchbase server as an absolute Unix timestamp. This means, for example, if you store an item with a two-day relative TTL, immediately make a backup, and then restore from that backup three days later, the expiration will have passed and the data is no longer there.

Values over 30 days: if you want an item to live for more than 30 day you must provide a TTL in Unix epoch time; for instance, 1 095 379 198 indicates the seconds since 1970.

Be aware that Couchbase Server does lazy expiration, that is, expired items are flagged as deleted rather than being immediately erased. Couchbase Server has a maintenance process, called expiry pager that will periodically look through all information and erase expired items. This maintenance process will run every 60 minutes, but it can be configured to run at a different interval. Couchbase Server will immediately remove an item flagged for deletion the next time the item is requested; the server will respond that the item does not exist to the requesting process.

Couchbase Server offers new functionality you can use to index and find documents and perform calculations on data, known as views. For views, you write functions in JavaScript that specify what data should be included in an index. When you want to retrieve information using views, it is called querying a view and the information Couchbase Server returns is called a result set.

The result set from a view will contain any items stored on disk that meet the requirements of your views function. Therefore information that has not yet been removed from disk may appear as part of a result set when you query a view.

Using Couchbase views, you can also perform reduce functions on data, which perform calculations or other aggregations of data. For instance if you want to count the instances of a type of object, you would use a reduce function. Once again, if an item is on disk, it will be included in any calculation performed by your reduce functions. Based on this behavior due to disk persistence, here are guidelines on handling expiration with views:

-

Detecting Expired Documents in Result Sets : If you are using views for indexing items from Couchbase Server, items that have not yet been removed as part of the expiry pager maintenance process will be part of a result set returned by querying the view. To exclude these items from a result set you should use query parameter

include_docset totrue. This parameter typically includes all JSON documents associated with the keys in a result set. For example, if you use the parameterinclude_docs=trueCouchbase Server will return a result set with an additional"doc"object which contains the JSON or binary data for that key:{"total_rows":2,"rows":[ {"id":"test","key":"test","value":null,"doc":{"meta":{"id":"test","rev":"4-0000003f04e86b040000000000000000","expiration":0,"flags":0},"json":{"testkey":"testvalue"}}}, {"id":"test2","key":"test2","value":null,"doc":{"meta":{"id":"test2","rev":"3-0000004134bd596f50bce37d00000000","expiration":1354556285,"flags":0},"json":{"testkey":"testvalue"}}} ] }For expired documents if you set

include_doc=true, Couchbase Server will return a result set indicating the document does not exist anymore. Specifically, the key that had expired but had not yet been removed by the cleanup process will appear in the result set as a row where"doc":null:{"total_rows":2,"rows":[ {"id":"test","key":"test","value":null,"doc":{"meta":{"id":"test","rev":"4-0000003f04e86b040000000000000000","expiration":0,"flags":0},"json":{"testkey":"testvalue"}}}, {"id":"test2","key":"test2","value":null,"doc":null} ] } -

Reduces and Expired Documents : In some cases, you may want to perform a reduce function to perform aggregations and calculations on data in Couchbase Server. In this case, Couchbase Server takes pre-calculated values which are stored for an index and derives a final result. This also means that any expired items still on disk will be part of the reduction. This may not be an issue for your final result if the ratio of expired items is proportionately low compared to other items. For instance, if you have 10 expired scores still on disk for an average performed over 1 million players, there may be only a minimal level of difference in the final result. However, if you have 10 expired scores on disk for an average performed over 20 players, you would get very different result than the average you would expect.

In this case, you may want to run the expiry pager process more frequently to ensure that items that have expired are not included in calculations used in the reduce function. We recommend an interval of 10 minutes for the expiry pager on each node of a cluster. Note that this interval has a slight impact on node performance as it performs cleanup more frequently on the node.

For more information about setting intervals for the maintenance process, see the Couchbase Server Manual command line tool,

cbepctlassociated with specifying disk cleanup intervals and refer to the examples onexp_pager_stime. For more information about views and view query parameters, see Finding Data with Views.About Asynchronous Methods¶

All Couchbase SDKs provide data operations as synchronous methods. In the case of synchronous methods, your application will block and not continue executing until it receives a response from Couchbase Server. In most SDKs, notably Java, Ruby and PHP, there are data operations you can perform asynchronously; in this case your application can continue performing other, background operations until Couchbase Server responds. Asynchronous operations are particularly useful when your application accesses persisted data, or when you are performing bulk data stores and updates.

There are a few standard approaches in Couchbase SDKs for asynchronous operations: 1) performing the asynchronous method, then later explicitly retrieving any results returned by Couchbase server that are stored in run-time memory, 2) performing an asynchronous method and retrieving the results from memory in a callback, and/or 3) perform an event loop which waits for and dispatches events in the program.

The following briefly demonstrates the first approach, where we perform an asynchronous call and then later explicitly retrieve it from runtime memory with a second call. The sample is from the PHP SDK:

<?php $cb = new Couchbase(); $cb->set('int', 99); $cb->set('array', array(11, 12)); $cb->getDelayed(array('int', 'array'), true); //do something else var_dump($cb->fetchAll()); ?>In the first two lines we create a new Couchbase client instance which is connected to the default bucket. Then we set some sample variables named

intandarray. We perform an asynchronous request to retrieve the two keys. Using thefetchAllcall we can retrieve any results returned by Couchbase server which are now in runtime memory.This is only one example of the pattern of method calls used to perform an asynchronous operation. A few more examples will follow in this section, therefore we introduce the concept here. For more information, see Synchronous and Asynchronous Transactions

Storing Information¶

These operations are used for storing information into Couchbase Server and consist of

addandset. Both operations exist for all SDKs provided by Couchbase. For some languages, parameters, return values, and data types may differ. Unique behavior for these store methods that you should be aware of:Expiration: By default all documents you store using

setandaddwill not expire. Removal must be explicit, such as usingdelete. If you do set an expiration to the value 0, this will also indicate no expiration. For more information, see About Document ExpirationCAS ID/CAS Value: For every value that exists in Couchbase Server, the server will automatically add a unique Check and Set (CAS) value as a 64-bit integer with the item. You can use this value in your implementation to provide basic optimistic concurrency. For more information, see Retrieving Items with CAS Values

For existing keys,

setwill overwrite any existing value if a key already exists; in contrastaddwill fail and return an error. If you usereplaceit will fail if the key does not already exist.The following storage limits exist for each type of information that you provide as well as the metadata that Couchbase Server automatically adds to items:

Keys: Can be up to 250 Bytes. Couchbase Server keeps all keys in RAM and does not eject any keys to free up space.

Metadata: This is the information Couchbase Server automatically stores with your value, namely CAS value, expiration and flags. Metadata per document is 60 Bytes for Couchbase 2.0.1 and 54 for Couchbase 2.1.0. This is stored in RAM at all times, and cannot be ejected from RAM.

Values: You can store values up to 1 MB in memcached buckets and up to 20 MB in Couchbase buckets. Values can be any arbitrary binary data or it can be a JSON-encoded document.

Be aware of key and metadata size if you are handling millions of documents or more. Couchbase Server keeps all keys and metadata in RAM and does not remove them to create more space in RAM. One hundred million keys which are 70 Bytes each plus meta data at 54 Bytes each will require about 11.2 GB of RAM for a cluster. This figure does not include caching any values or replica copies of data, if you consider these factors, you would need over 23 GB. For more information, see Sizing Guidelines in the Couchbase Server Manual.

Set¶

setwill write information to the data store regardless of whether the key for the value already exists or not. The method is destructive; if the key exists, it will overwrite any existing value. Typically you want to usesetin cases where you do not care whether or not you overwrite an existing value, nor do you care if the key already exists or not. This method is similar to anINSERTstatement in SQL.For instance, if you have a player location document in a game, you might not care whether you overwrite the location with a new value; it is however important that you quickly create a location document if it does not already exist. In the case of this type of application logic, you might not want to waste any code to check if a player location exists; performing rapid read/writes of the player location and creating the initial score document may be more important than performing any checks in your application logic. In this case, using

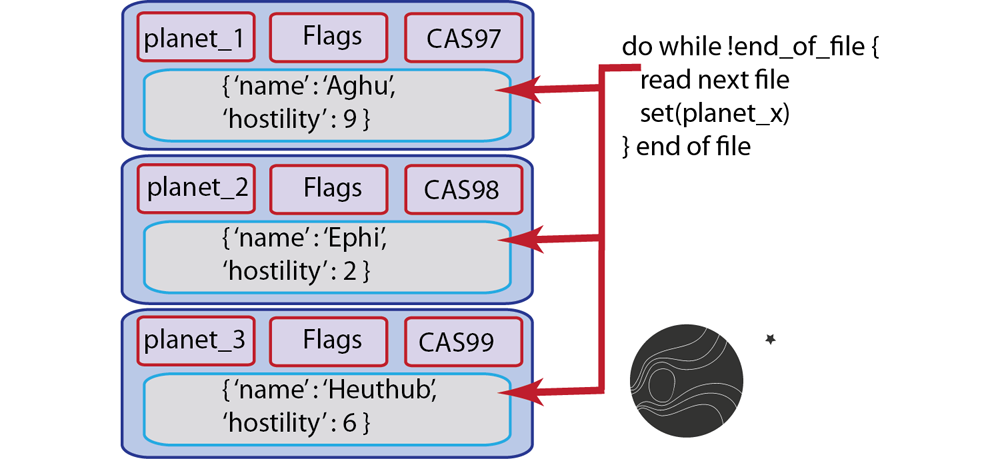

setwould be suitable.Another scenario is when you populate a database with initial values. This can be a production or development database. In this case, you are creating all the initial values for an entire set of keys. Since you are starting out with an empty database, and have no risk of overwriting useful data, you would use

sethere as well. For instance, if you are populating your new test database with documents that represent different planets, you could follow this approach:

Another scenario that is appropriate for using

setis another scenario where you do not care about overwriting the last value for a key. For instance if you want to document the last visitor to your site, you would store that as a key and update it each time a new visitor is at your site. You might not care who the previous visitors are; in this case, you usesetto overwrite anything that exists and replace it with the latest visit information.This method is the functional equivalent of a RDMS commit/insert.

Setwill support the following parameters which are used during the operation:Key: unique identifier for the information you want to store. This can be a string, symbol, or numeric sequence. A required parameter.

Value: the information you want to store. This can be in byte-stream, object, JSON document, or even string. A required parameter.

Options: this includes expiration, also known as TTL (time to live), which specifies how long the information remains in the data store before it is automatically flagged for removal and then deleted. You can also specify formatting options and flags.

The following shows a simple example of using set using the Ruby SDK:

c.set("foo", "bar", :ttl => 2)This operation takes the key

fooand sets the string ‘bar’ for the key which will expire after 2 seconds. This next example is part of a data loader script in PHP which reads in different JSON files in a specified directory. It then sends requests to write each file to Couchbase Server:function import($cb, $dir) { $d = dir($dir); while (false !== ($file = $d->read())) { if (substr($file, -5) != '.json') continue; echo "adding $file\n"; $json = json_decode(file_get_contents($dir . $file), true); unset($json["_id"]); echo $cb->set(substr($file, 0, -5), json_encode($json)); echo "\n"; } }The first part of this function takes a Couchbase client instance as a parameter and a directory. It then assigns the directory to a local variable

$dand opens it. Thewhileloop will continue reading each file in the directory so long as we have not finished reading all the files. In the loop we detect if the file has the.jsonfile type or not so we know to handle it, and store it. If the file is json we decode it, remove the attribute_idand then set the key as the filename with the other file contents as value. We choose this different key for better identification in our system. The following illustrates a sample JSON file which you can use with this loader:{ "_id":"beer_#40_Golden_Lager", "brewery":"Minocqua Brewing Company", "name":"#40 Golden Lager", "updated":"2010-07-22 20:00:20" }To view the complete loader application and sample data available on GitHub, see Couchbase Labs: Beer Sample

In Couchbase SDKs you can store a value with

setwhile simultaneously providing a document expiration.Setwill return a boolean of true if it is able to successfully commit data to the databases; it can also return a CAS value, which is a unique identifier for the document, and is used for optimistic locking.The associated memcached protocol in ASCII is

setwhich stores a key. For more information, see memcached protocolThe types of errors that can occur during this operation include 1) inability to connect to a node, or 2) some error exists while attempting to format a value being set. If you have a connection-level error you may need to reattempt connection, and possibly check the status of the server. If you have an error with the size of your value or formatting, you need to check the value itself, and how it is encoded and see if there are any issues that make the document incompatible with Couchbase Server.

For more information about connections and connection-level settings, see Optimizing Client Instances and Client-Side Timeouts

Add¶

addwill also write information to the Couchbase Server, but unlikeset,addwill fail if the value for a given key already exists in Couchbase Server.The reason you would want to use

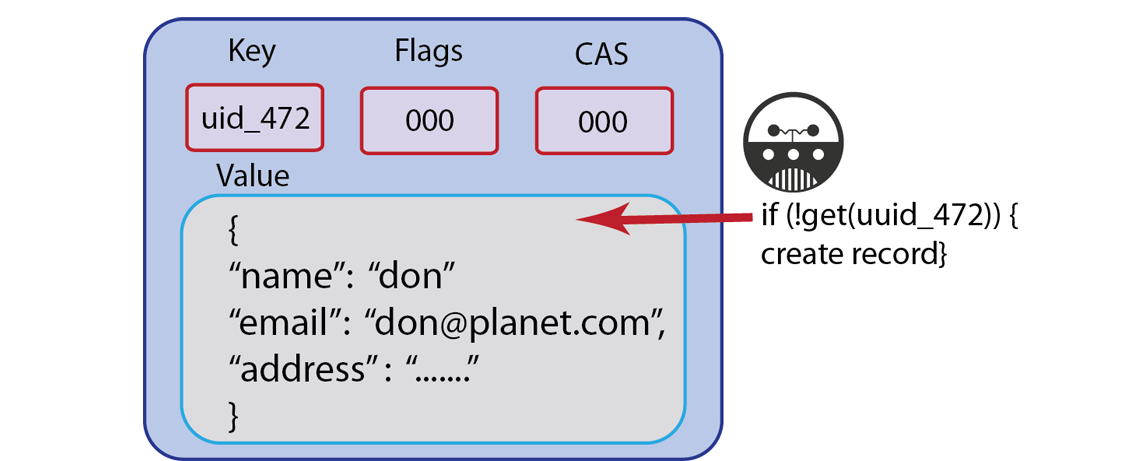

addinstead ofsetis so that you can create a new key, without accidentally overwriting an existing key with the same name. For Couchbase Server, keys must be unique for every bucket; therefore when you commit new keys to Couchbase Server, usingaddmay be preferable based on your application logic.For example, if you create an application where you store all new users with a unique username and you want to use usernames as a keys, you would want to store the new key with

addinstead ofset.

If a user already exists in your system with the unique username, you would not want to overwrite the user with a new user’s information. Instead, you could perform some real-time feedback and let the user know if the username has already been taken when the new user fills out their profile information. You can catch this type of error and report it back in your application when you use

addto create the document.Because

addfails and reports an error when a key exists, some Couchbase Server developers prefer it tosetin cases where they create a new document.#stores successfully c.add("foo", "bar") #raises Couchbase::Error::KeyExists: failed to store value #failed to store value (key="foo", error=0x0c) c.add("foo", "baz")This next example demonstrates an

addin PHP:$script_name=$_SERVER["SCRIPT_NAME"]; $script_access_count=$cb_obj->get($script_name); if($cb_obj->getResultCode() == COUCHBASE_KEY_ENOENT){ #the add will fail if it has already been added $cb_obj->add($script_name,0);In this example we try to get a script name for the script that currently runs on our web application server. We then try to retrieve any script name that is already stored in Couchbase Server. If we receive a ‘key not found’ error, we add the script name to Couchbase Server.

In Couchbase SDKs you can store a value with

addwhile simultaneously providing a document expiration.The memcached protocol equivalent for this method is

add. For more information about the underlying protocol, see memcached protocolIf you receive an unexpected ‘key exists’ error when you use

addyou should log the error, and then go back into your code to determine why the key already exists. You will want to go back into the application logic that creates these keys and find out if there is a problem in the logic. One approach to use to ensure you have unique keys for all your documents is to use a key generator that creates unique keys for all documents.There are application scenarios where you receive a ‘key exists’ error and you want that error to occur so you can handle it in your application logic. For instance, if you are handling a coupon, and if the coupon key already exists you know the coupon code has already been redeemed. In that case you can use the error to trigger a message to the user that the coupon has already been used.