Introduction to Couchbase Server¶

A newer version of this software is available

You are viewing the documentation for an older version of this software. To find the documentation for the current version, visit the Couchbase documentation home page.

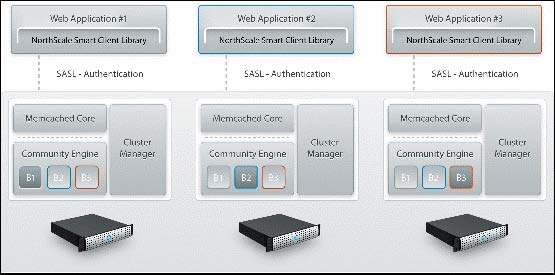

Couchbase Server is a distributed, document (“NoSQL”) database management system, designed to store the information for web applications. Couchbase Server provides a managed in-memory caching tier, so that it supports very fast create, store, update and retrieval operations.

These features are designed to support web application development where the high-performance characteristics are required to support low-latency and high throughput applications. Couchbase Server achieves this on a single server and provides support for the load to be increased almost linearly by making use of the clustered functionality built into Couchbase Server.

The cluster component distributes data over multiple servers to share the data and I/O load, while incorporating intelligence into the server and client access libraries that enable clients to quickly access the right node within the cluster for the information required. This intelligent distribution allows Couchbase Server to provide excellent scalability that can be extended simply by adding more servers as your load and application requirements increase.

For a more in-depth description of Couchbase Server, see the following sections:

The guiding principles and design goals of Couchbase Server are covered in Couchbase Server Basics.

Couchbase Server is part of the NoSQL database movement. For background information on what NoSQL is, and how this maps to Couchbase Server functionality, see Couchbase Server and NoSQL.

Information on the different components and systems in Couchbase Server, and how these map to the concepts and architecture required to understand the fundamentals of how it works are provided in Architecture and Concepts.

Couchbase Server Basics¶

Couchbase Server is a database platform that combines the principles and components of Membase Server and Apache CouchDB. From Membase Server, Couchbase Server builds on the high performance, memory-based, document storage interface, and incorporates the core principles of being Simple, Fast, and Elastic.

-

Simple

Couchbase Server is easy to install and manage, and through the document nature and memcached protocol interface, an easy to use database system. Because the database uses the document structure you do not need to create or manage the databases, tables and schemas. The simplified structure also means that the information can be distributed across nodes in a Couchbase Cluster automatically, without having to worry about normalizing or sharding your data to scale out performance.

-

Fast

Couchbase Server is fast, primarily because of the in-memory nature of the database. Furthermore, Couchbase Server provides quasi-deterministic latency and throughput, meaning that you can predict and rely on the speed and performance of your database without having to prepare and cope for spikes in load and problems.

-

Elastic

Couchbase Server was built from the core with the ability to expand and distribute the load across multiple servers. This is achieved through a combination of intelligence built into the server for distributing the stored data, and complimentary intelligence for clients accessing the data to be directed to the right machine. Data is automatically redistributed across the cluster, and changing the capacity of the cluster is a case of adding or removing nodes and rebalancing the cluster.

In tandem with the elastic nature of Couchbase Server, a Couchbase Cluster also takes advantage of the clustered architecture to support high availability. All nodes in a cluster are identical, and the cluster automatically creates replicas of information across the cluster. If a node fails, the stored data will be available on another node in the cluster.

-

memcached Compatibility

memcached is an memory-based caching application that uses the notion of a document store to save important data that are required by applications directly in RAM. Because the information is stored entirely in RAM, the latency for storing and retrieving information is very low. As a caching solution, memcached is used by a wide range of companies, including Google, Facebook, YouTube, Twitter and Wikipedia to help speed up their web-application performance by acting as a storage location for objects retrieved at comparative expense from a traditional SQL database.

Couchbase Server supports the same client protocol used by memcached for creating, retrieving, updating and deleting information in the database. This enables Couchbase Server to be a drop-in replacement for memcached, and this means that applications already employing memcached can take advantage of the other functionality within Couchbase Server, such as clustered and elastic distribution.

Couchbase Server and NoSQL¶

NoSQL is a somewhat unfortunate term that has been widely used to describe a class of database management systems that don’t employ a relational data model. The terminology keys off the SQL query language - a hallmark of relational database management systems. Unfortunately the query language is not the real differentiator; in fact, it is not necessarily a differentiator at all. Some NoSQL database management systems do, in fact, support the SQL query language! The fundamental difference in these systems lies not in the query language, but in the non-relational data model they employ. While non-relational database would be a more technically accurate term, it would also be more broad than the term NoSQL intends. It is interesting to note that a backronym has emerged in which NoSQL is proposed to stand for Not Only SQL. While more accurate, it is even less descriptive.

NoSQL databases are characterized by their ability to store data without first requiring one to define a database schema. In Couchbase Server, data is stored as a distributed, associative array of document IDs and contents, where the value is a blob of opaque binary data that doesn’t conform to a rigid, pre-defined schema from the perspective of the database management system itself. Additionally, and largely enabled by their schema-less nature, these systems tend to support a scale out approach to growth, increasing data and I/O capacity by adding more servers to a cluster; and without requiring any change to application software. In contrast, relational database management systems scale up by adding more capacity (CPU, Memory and Disk) to a single server to accommodate growth.

Relational databases store information in relations which must be defined, or modified, before data can be stored. A relation is simply a table of rows, where each row (also known as a tuple) in a given relation has a fixed set of attributes (columns). These columns are consistent across each row in a relation. Relations (tables) can be further connected through cross-table references. One table, CITIZENS for example, could hold rows of all individual citizens residing in a town. Another table, PARENTS, could have rows consisting of PARENT, CHILD and RELATIONSHIP fields. The first two fields could be references to rows in the CITIZENS table while the third field describes the parental relationship between the persons in the first two fields (father, mother).

Architecture and Concepts¶

In order to understand the structure and layout of Couchbase Server, you first need to understand the different components and systems that make up both an individual Couchbase Server instance, and the components and systems that work together to make up the Couchbase Cluster as a whole.

The following section provides key information and concepts that you need to understand the fast and elastic nature of the Couchbase Server database, and how some of the components work together to support a highly available and high performance database.

Nodes and Clusters¶

Couchbase Server can be used either in a standalone configuration, or in a cluster configuration where multiple Couchbase Servers are connected together to provide a single, distributed, data store.

In this description:

-

Couchbase Server or Node

A single instance of the Couchbase Server software running on a machine, whether a physical machine, virtual machine, EC2 instance or other environment.

All instances of Couchbase Server are identical, provide the same functionality, interfaces and systems, and consist of the same components.

-

Cluster

A cluster is a collection of one or more instances of Couchbase Server that are configured as a logical cluster. All nodes within the cluster are identical and provide the same functionality. Each node is capable of managing the cluster and each node can provide aggregate statistics and operational information about the cluster. User data is stored across the entire cluster through the vBucket system.

Clusters operate in a completely horizontal fashion. To increase the size of a cluster, you add another node. There are no parent/child relationships or hierarchical structures involved. This means that Couchbase Server scales linearly, both in terms of increasing the storage capacity and the performance and scalability.

Cluster Manager¶

Every node within a Couchbase Cluster includes the Cluster Manager component. The Cluster Manager is responsible for the following within a cluster:

Cluster management

Node administration

Node monitoring

Statistics gathering and aggregation

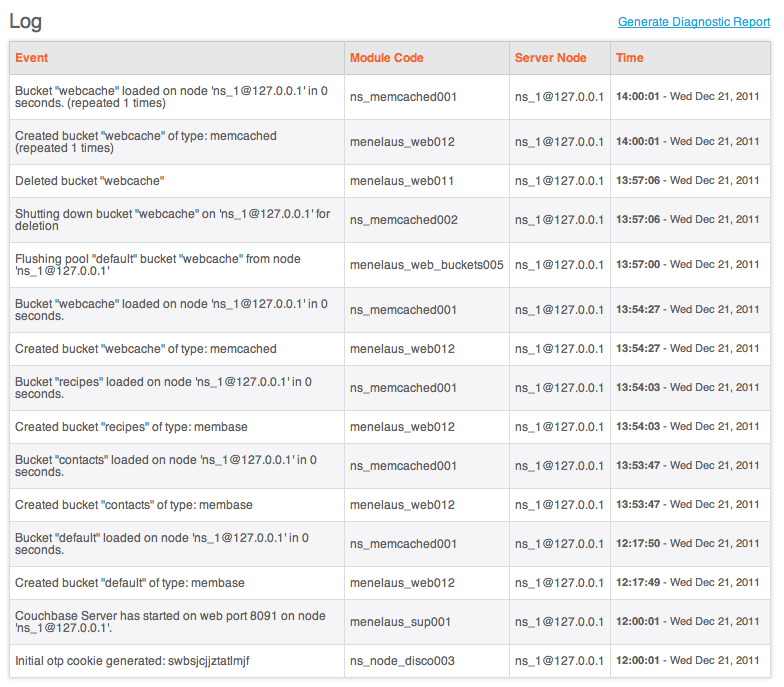

Run-time logging

Multi-tenancy

Security for administrative and client access

Client proxy service to redirect requests

Access to the Cluster Manager is provided through the administration interface (see Administration Tools ) on a dedicated network port, and through dedicated network ports for client access. Additional ports are configured for inter-node communication.

Memory Quotas¶

Couchbase Server manages the memory used across different components of the system:

-

Managing Disk and Memory for Nodes in the Cluster

Couchbase Server automatically manages storing the working set between disk and memory resources for nodes in a cluster. This allows an installation to have a working set that is larger than the available RAM in the nodes participating in the cluster. To keep throughput high and latency low, Couchbase Server will always keep metadata about all items in memory.

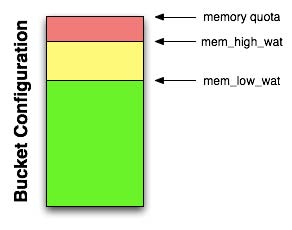

When configuring a Couchbase Server, a memory quota is set. Couchbase Server will automatically migrate items from memory to disk when the configured memory quota is reached. If those items are later accessed, they will be moved back into system memory. For efficiency purposes, these operations are performed on a regular basis in the background.

At the moment, there is no ability define a quota for the on-disk persistent storage. It is up to the administrator to appropriately monitor the disk utilization and take action (either deleting data from Couchbase or adding capacity by upgrading the nodes themselves or adding more nodes).

Couchbase Server monitors and reports on statistics for managing disk and memory. As with any multi-tier cache, if the working set of data is greater than the available amount of the bucket RAM quota (the first level of caching), performance will drop due to disk access latencies being higher and disk throughput being lower than RAM latencies and throughput. Acceptable performance of the system is application dependent. Statistics should be monitored in case tuning adjustments are required.

-

Server Quotas

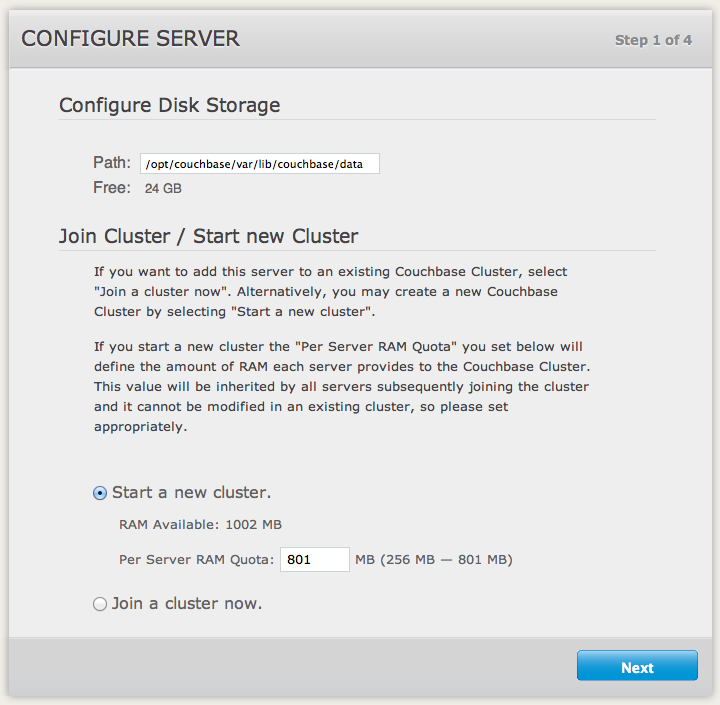

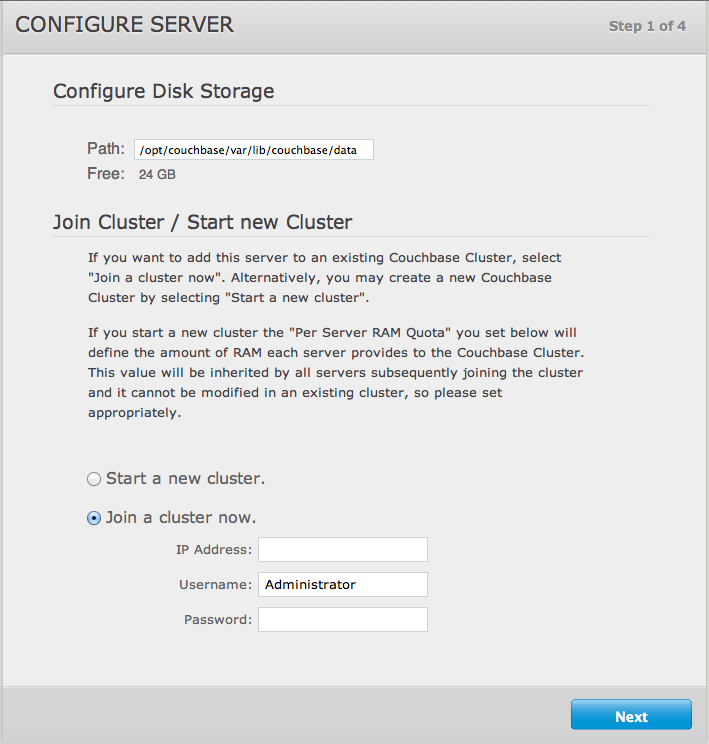

Each server node has a memory quota that defines the amount of system memory that is available to that server node on the host system. The first node in a cluster sets a memory quota that is subsequently inherited by all servers joining the cluster. The maximum memory quota set on the first server node must be less than or equal to 80% of the total physical RAM on that node. A server cannot join a cluster if it has less physical RAM than 1.25x the RAM quota (the same maximum allocation of 80% of physical RAM to the cluster). If a server that was a standalone cluster joins another cluster, the memory quota is inherited from the cluster to which the node is added.

Server nodes do not have disk quotas. System administrators are responsible for monitoring free disk space on individual server nodes. Each server node in a cluster has its own storage path - the location on disk where data will be stored. Storage paths do not need to be uniform across all server nodes in a cluster. If a server that was a standalone cluster joins another cluster, the storage path for that server remains unchanged.

-

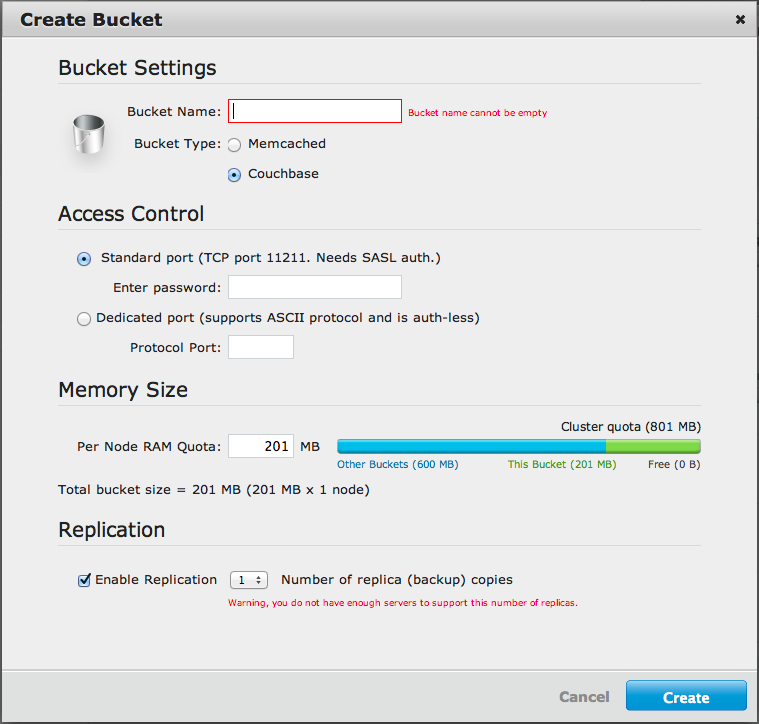

Bucket Quotas

Memory quota allocation is also controlled on a bucket-by-bucket basis. A fixed amount of memory per node is allocated for use by a bucket. Adding or removing nodes will change the size of the bucket.

For more information about creating and changing these node memory quota and bucket quota, see Initializing Nodes and Creating and Editing Data Buckets.

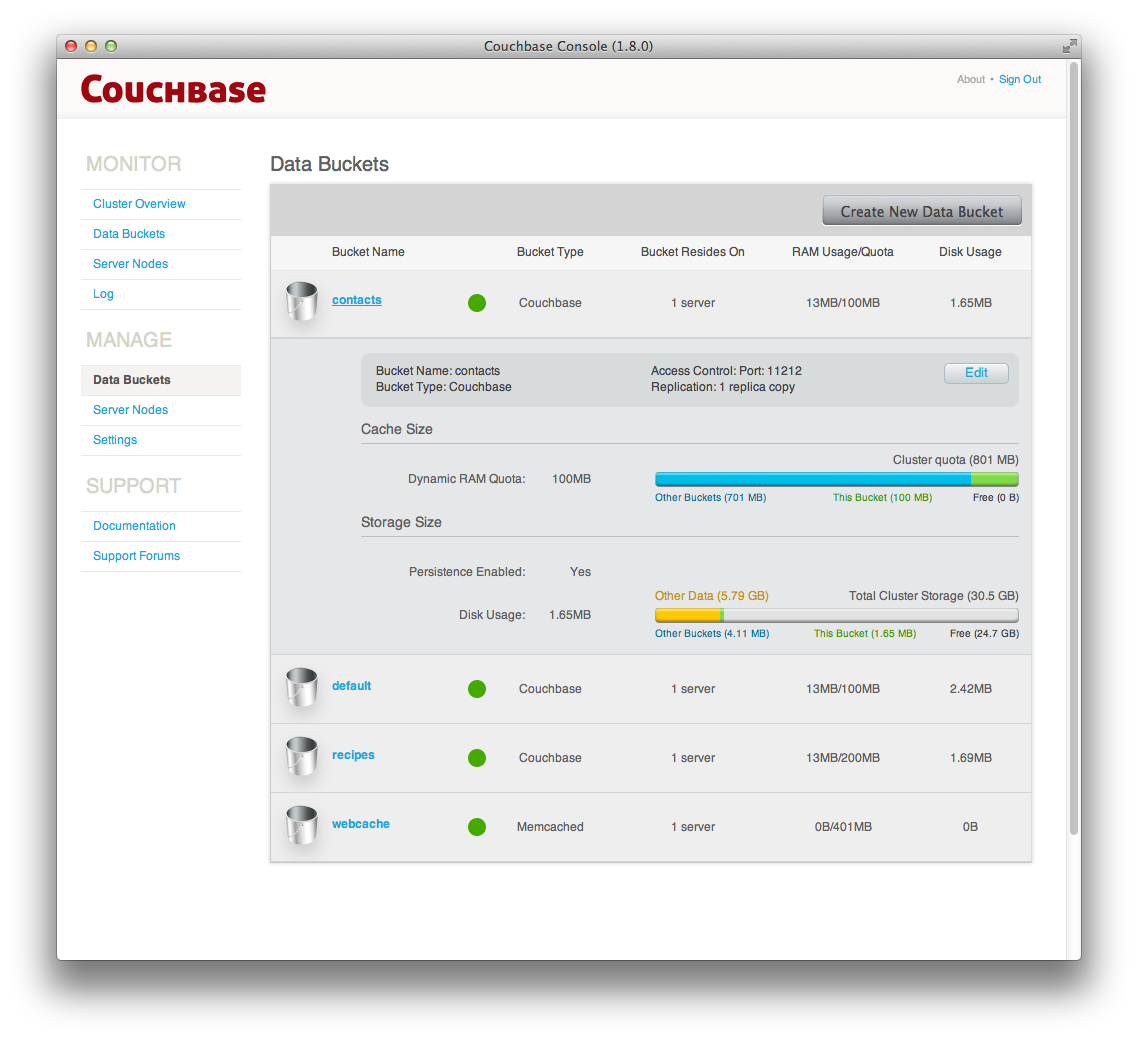

Buckets¶

Couchbase Server provides data management services using named buckets. These are isolated virtual containers for data. A bucket is a logical grouping of physical resources within a cluster of Couchbase Servers. They can be used by multiple client applications across a cluster. Buckets provide a secure mechanism for organizing, managing, and analyzing data storage resources.

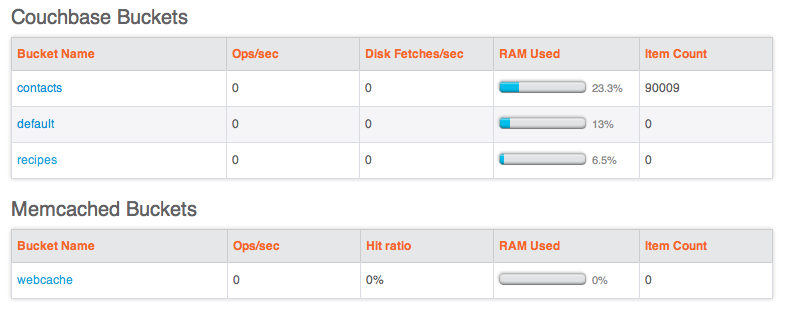

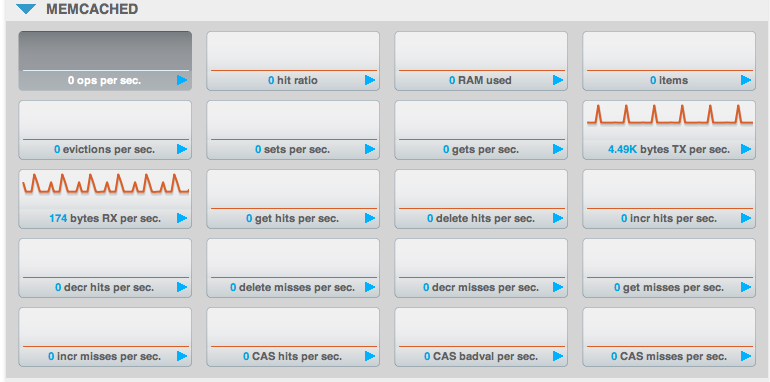

Couchbase Server provides the two core types of buckets that can be created and managed. Couchbase Server collects and reports on run-time statistics by bucket type.

| Bucket Type | Description |

|---|---|

| Couchbase | Provides highly-available and dynamically reconfigurable distributed data storage, providing persistence and replication services. Couchbase buckets are 100% protocol compatible with, and built in the spirit of, the memcached open source distributed document cache. |

| Memcached | Provides a directly-addressed, distributed (scale-out), in-memory, document cache. Memcached buckets are designed to be used alongside relational database technology – caching frequently-used data, thereby reducing the number of queries a database server must perform for web servers delivering a web application. |

The different bucket types support different capabilities. Couchbase-type buckets provide a highly-available and dynamically reconfigurable distributed data store. Couchbase-type buckets survive node failures and allow cluster reconfiguration while continuing to service requests. Couchbase-type buckets provide the following core capabilities.

| Capability | Description |

|---|---|

| Caching | Couchbase buckets operate through RAM. Data is kept in RAM and persisted down to disk. Data will be cached in RAM until the configured RAM is exhausted, when data is ejected from RAM. If requested data is not currently in the RAM cache, it will be loaded automatically from disk. |

| Persistence | Data objects can be persisted asynchronously to hard-disk resources from memory to provide protection from server restarts or minor failures. Persistence properties are set at the bucket level. |

| Replication | A configurable number of replica servers can receive copies of all data objects in the Couchbase-type bucket. If the host machine fails, a replica server can be promoted to be the host server, providing high avilability cluster operations via failover. Replication is configured at the bucket level. |

| Rebalancing | Rebalancing enables load distribution across resources and dynamic addition or removal of buckets and servers in the cluster. |

For more information on the bucket types, their configuration and accessibility, see Buckets.

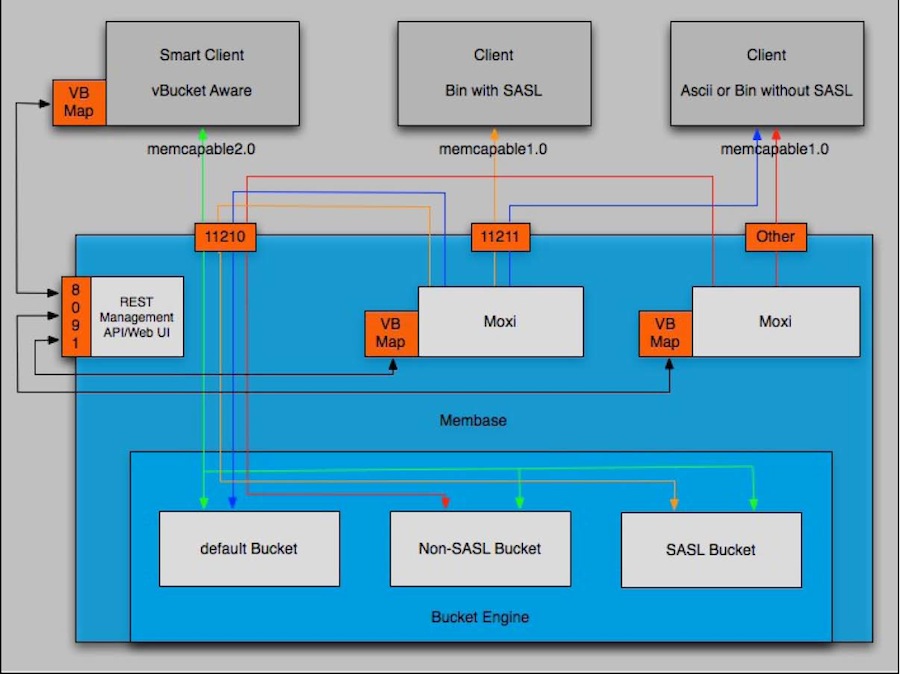

Couchbase Server leverages the memcached storage engine interface and the Couchbase Bucket Engine to enable isolated buckets that support multi-tenancy.

Smart clients discover changes in the cluster using the Couchbase Management REST API. Buckets can be used to isolate individual applications to provide multi-tenancy, or to isolate data types in the cache to enhance performance and visibility. Couchbase Server allows you to configure different ports to access different buckets, and gives you the option to access isolated buckets using either the binary protocol with SASL authentication, or the ASCII protocol with no authentication

Couchbase Server allows you to use and mix different types of buckets (Couchbase and Memcached) as appropriate in your environment. Buckets of different types still share the same resource pool and cluster resources. Quotas for RAM and disk usage are configurable per bucket so that resource usage can be managed across the cluster. Quotas can be modified on a running cluster so that administrators can reallocate resources as usage patterns or priorities change over time.

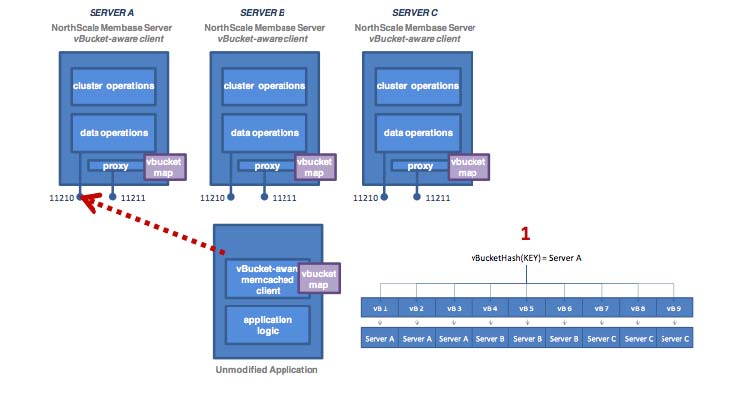

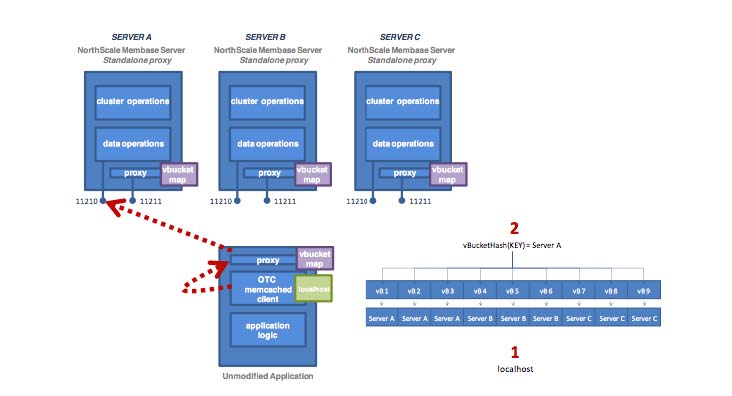

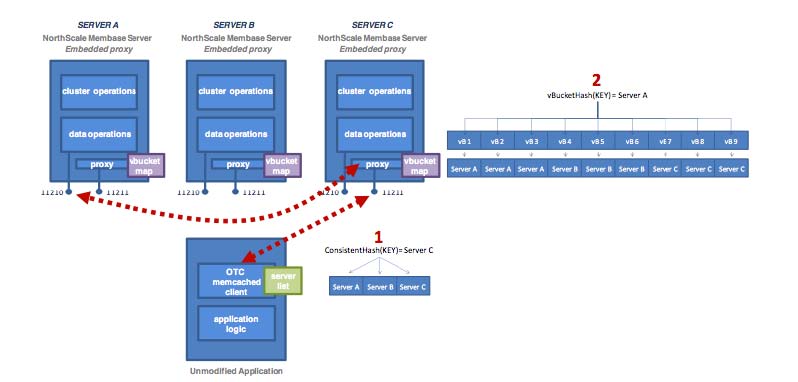

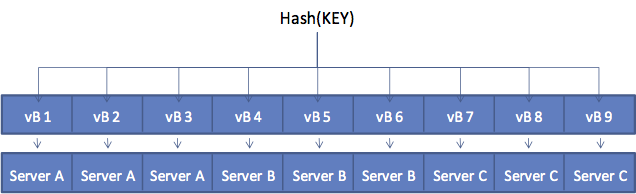

vBuckets¶

A vBucket is defined as the owner of a subset of the key space of a Couchbase cluster. These vBuckets are used to allow information to be distributed effectively across the cluster. The vBucket system is used both for distributing data, and for supporting replicas (copies of bucket data) on more than one node.

Clients access the information stored in a bucket by communicating directly with the node response for the corresponding vBucket. This direct access enables clients to communicate with the node storing the data, rather than using a proxy or redistribution architecture. The result is abstracting the physical toplogy from the logical partitioning of data. This architecture is what gives Coucbase Server the elasticity.

This architecture differs from the method used by memcached, which uses

client-side key hashes to determine the server from a defined list. This

requires active management of the list of servers, and specific hashing

algorithms such as Ketama to cope with changes to the topology. The structure is

also more flexible and able to cope with changes than the typical sharding

arrangement used in an RDBMS environment.

vBuckets are not a user-accessible component, but they are a critical component of Couchbase Server and are vital to the availability support and the elastic nature.

Every document ID belongs to a vBucket. A mapping function is used to calculate the vBucket in which a given document belongs. In Couchbase Server, that mapping function is a hashing function that takes a document ID as input and outputs a vBucket identifier. Once the vBucket identifier has been computed, a table is consulted to lookup the server that “hosts” that vBucket. The table contains one row per vBucket, pairing the vBucket to its hosting server. A server appearing in this table can be (and usually is) responsible for multiple vBuckets.

Data in RAM¶

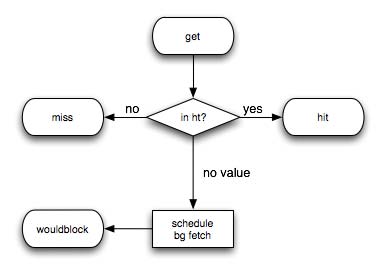

The architecture of Couchbase Server includes a built-in caching layer. This approach allows for very fast response times, since the data is initially written to RAM by the client, and can be returned from RAM to the client when the data is requested.

The effect of this design to provide an extensive built-in caching layer which acts as a central part of the operation of the system. The client interface works through the RAM-based data store, with information stored by the clients written into RAM and data retrieved by the clients returned from RAM, or loaded from disk into RAM before being returned to the client.

This process of storing and retrieving stored data through the RAM interface ensures the best performance. For the highest performance, you should allocate the maximum amount of RAM on each of your nodes. The aggregated RAM is used across the cluster.

This is different in design to other database systems where the information is written to the database and either a separate caching layer is employed, or the caching provided by the operating system is used to kept regularly used information in memory and accessible.

Ejection¶

Ejection is a mechanism used with Couchbase buckets, and is the process of removing data from RAM to provide room for the active and more frequently used information and is a key part of the caching mechanism. Ejection is automatic and operates in conjunction with the disk persistence system to ensure that data in RAM has been persisted to disk and can be safely ejected from the system.

The system ensures that the data stored in RAM will already have been written to disk, so that it can be loaded back into RAM if the data is requested by a client. Ejection is a key part of keeping the frequently used information in RAM and ensuring there is space within the Couchbase RAM allocation to load that data back into RAM when the information is requested by a client.

For Couchbase buckets, data is never deleted from the system unless a client explicitly deletes the document from the database or theexpiration value for the document is reached. Instead, the ejection mechanism removes it from RAM, keeping a copy of that information on disk.

Expiration¶

Each document stored in the database has an optional expiration value (TTL, time to live). The default is for there to be no expiration, i.e. the information will be stored indefinitely. The expiration can be used for data that naturally has a limited life that you want to be automatically deleted from the entire database.

The expiration value is user-specified on a document basis at the point when the data is stored. The expiration can also be updated when the data is updated, or explicitly changed through the Couchbase protocol. The expiration time can either be specified as a relative time (for example, in 60 seconds), or absolute time (31st December 2012, 12:00pm).

Typical uses for an expiration value include web session data, where you want the actively stored information to be removed from the system if the user activity has stopped and not been explicitly deleted. The data will time out and be removed from the system, freeing up RAM and disk for more active data.

Eviction¶

Eviction is the process of removing information entirely from memory for

memcached buckets. The memcached system uses a least recently used (LRU)

algorithm to remove data from the system entirely when it is no longer used.

Within a memcached bucket, LRU data is removed to make way for new data, with

the information being deleted, since there is no persistence for memcached

buckets.

Disk Storage¶

For performance, Couchbase Server prefers to store and provide information to clients using RAM. However, this is not always possible or desirable in an application. Instead, what is required is the ‘working set’ of information stored in RAM and immediately available for supporting low-latency responses.

Couchbase Server stores data on disk, in addition to keeping as much data as possible in RAM as part of the caching layer used to improve performance. Disk persistence allows for easier backup/restore operations, and allows datasets to grow larger than the built-in caching layer.

Couchbase automatically moves data between RAM and disk (asynchronously in the background) in order to keep regularly used information in memory, and less frequently used data on disk. Couchbase constantly monitors the information accessed by clients, keeping the active data within the caching layer.

The process of removing data from the caching to make way for the actively used

information is called ejection, and is controlled automatically through

thresholds set on each configured bucket in your Couchbase Server Cluster.

The use of disk storage presents an issue in that a client request for an

individual document ID must know whether the information exists or not.

Couchbase Server achieves this using metadata structures. The metadata holds

information about each document stored in the database and this information is

held in RAM. This means that the server can always return a ‘document ID not

found’ response for an invalid document ID, while returning the data for an item

either in RAM (in which case it is returned immediately), or after the item has

been read from disk (after a delay, or until a timeout has been reached).

The process of moving information to disk is asynchronous. Data is ejected to disk from memory in the background while the server continues to service active requests. During sequences of high writes to the database, clients will be notified that the server is temporarily out of memory until enough items have been ejected from memory to disk.

Similarly, when the server identifies an item that needs to be loaded from disk because it is not in active memory, the process is handled by a background process that processes the load queue and reads the information back from disk and into memory. The client is made to wait until the data has been loaded back into memory before the information is returned.

The asynchronous nature and use of queues in this way enables reads and writes to be handled at a very fast rate, while removing the typical load and performance spikes that would otherwise cause a traditional RDBMS to produce erratic performance.

Warmup¶

When Couchbase Server is re-started, or when it is started after a restore from backup, the server goes through a warm-up process. The warm-up loads data from disk into RAM, making the data available to clients.

The warmup process must complete before clients can be serviced. Depending on the size and configuration of your system, and the amount of data that you have stored, the warmup may take some time to load all of the stored data into memory.

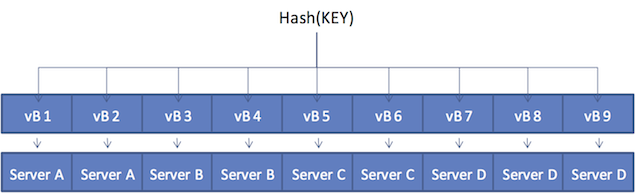

Rebalancing¶

The way data is stored within Couchbase Server is through the distribution

offered by the vBucket structure. If you want to expand or shrink your Couchbase

Server cluster then the information stored in the vBuckets needs to be

redistributed between the available nodes, with the corresponding vBucket map

updated to reflect the new structure. This process is called rebalancing.

Rebalancing is an deliberate process that you need to initiate manually when the structure of your cluster changes. The rebalance process changes the allocation of the vBuckets used to store the information and then physically moves the data between the nodes to match the new structure.

The rebalancing process can take place while the cluster is running and servicing requests. Clients using the cluster read and write to the existing structure with the data being moved in the background between nodes. Once the moving process has been completed, the vBucket map is updated and communicated to the smart clients and the proxy service (Moxi).

The result is that the distribution of data across the cluster has been rebalanced, or smoothed out, so that the data is evenly distributed across the database, taking into account the data and replicas of the data required to support the system.

Replicas and Replication¶

In addition to distributing information across the cluster for the purposes of even data distribution and performance, Couchbase Server also includes the ability to create additional replicas of the data. These replicas work in tandem with the vBucket structure, with replicas of individual vBuckets distributed data around the cluster. Distribution of replicas is handled in the same way as the core data, with portions of the data distributed around the cluster to prevent a single point of failure.

The replication of this data around this cluster is entirely peer-to-peer based, with the information being exchanged directly between nodes in the cluster. There is no topology, hierarchy or master/slave relationship. When the data is written to a node within the cluster, the data is stored directly in the vBucket and then distributed to one or more replica vBuckets simultaneously using the TAP system.

In the event of a failure of one of the nodes in the system, the replica vBuckets are enabled in place of the vBuckets that were failed in the bad node. The process is near-instantaneous. Because the replicas are populated at the same time as the original data, there is no need for the data to be copied over; the replica vBuckets are there waiting to be enabled with the data already within them. The replica buckets are enabled and the vBucket structure updated so that clients now communicate with the updated vBucket structure.

Replicas are configured on each bucket. You can configure different buckets to contain different numbers of replicas according to the required safety level for your data. Replicas are only enabled once the number of nodes within your cluster support the required number of replicas. For example, if you configure three replicas on a bucket, the replicas will only be enabled once you have four nodes.

The number of replicas for a bucket cannot be changed after the bucket has been created.

Failover¶

Information is distributed around a cluster using a series of replicas. For

Couchbase buckets you can configure the number of replicas (complete copies of

the data stored in the bucket) that should be kept within the Couchbase Server

Cluster.

In the event of a failure in a server (either due to transient failure, or for

administrative purposes), you can use a technique called failover to indicate

that a node within the Couchbase Cluster is no longer available, and that the

replica vBuckets for the server are enabled.

The failover process contacts each server that was acting as a replica and updates the internal table that maps client requests for documents to an available server.

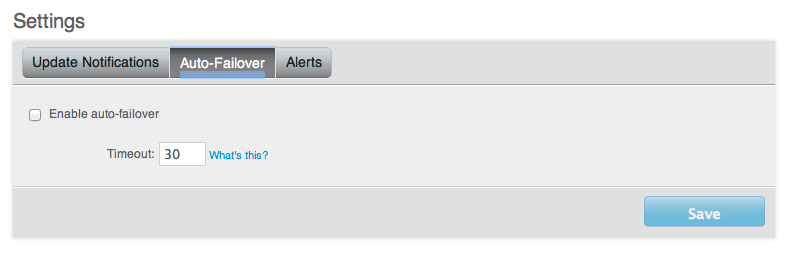

Failover can be performed manually, or you can use the built-in automatic failover that reacts after a preset time when a node within the cluster becomes unavailable.

For more information, see Node Failover.

TAP¶

The TAP protocol is an internal part of the Couchbase Server system and is used in a number of different areas to exchange data throughout the system. TAP provides a stream of data of the changes that are occurring within the system.

TAP is used during replication, to copy data between vBuckets used for replicas. It is also used during the rebalance procedure to move data between vBuckets and redestribute the information across the system.

Client Interface¶

Within Couchbase Server, the techniques and systems used to get information into and out of the database differ according to the level and volume of data that you want to access. The different methods can be identified according to the base operations of Create, Retrieve, Update and Delete:

-

Create

Information is stored into the database using the memcached protocol interface to store a document against a specified document ID. Bulk operations for setting the value of a large number of documents at the same time are available, and these are more efficient than multiple smaller requests.

The value stored can be any binary value, including structured and unstructured strings, serialized objects (from the native client language), native binary data (for example, images or audio).

-

Retrieve

To retrieve, you must know the document ID used to store a particular value, then you can use the memcached protocol (or an appropriate memcached compatible client-library) to retrieve the value stored against a specific document ID. You can also perform bulk operations

-

Update

To update information in the database, you must use the memcached protocol interface. The memcached protocol includes functions to directly update the entire contents, and also to perform simple operations, such as appending information to the existing record, or incrementing and decrementing integer values.

-

Delete

To delete information from Couchbase Server you need to use the memcached protocol which includes an explicit delete command to remove a document from the server.

However, Couchbase Server also allows information to be stored in the database with an expiry value. The expiry value states when a document should be automatically deleted from the entire database, and can either be specified as a relative time (for example, in 60 seconds), or absolute time (31st December 2012, 12:00pm).

The methods of creating, updating and retrieving information are critical to the way you work with storing data in Couchbase Server.

Administration Tools¶

Couchbase Server was designed to be as easy to use as possible, and does not require constant attention. Administration is however offered in a number of different tools and systems. For a list of the most common administration tasks, see Administration Tasks.

Couchbase Server includes three solutions for managing and monitoring your Couchbase Server and cluster:

-

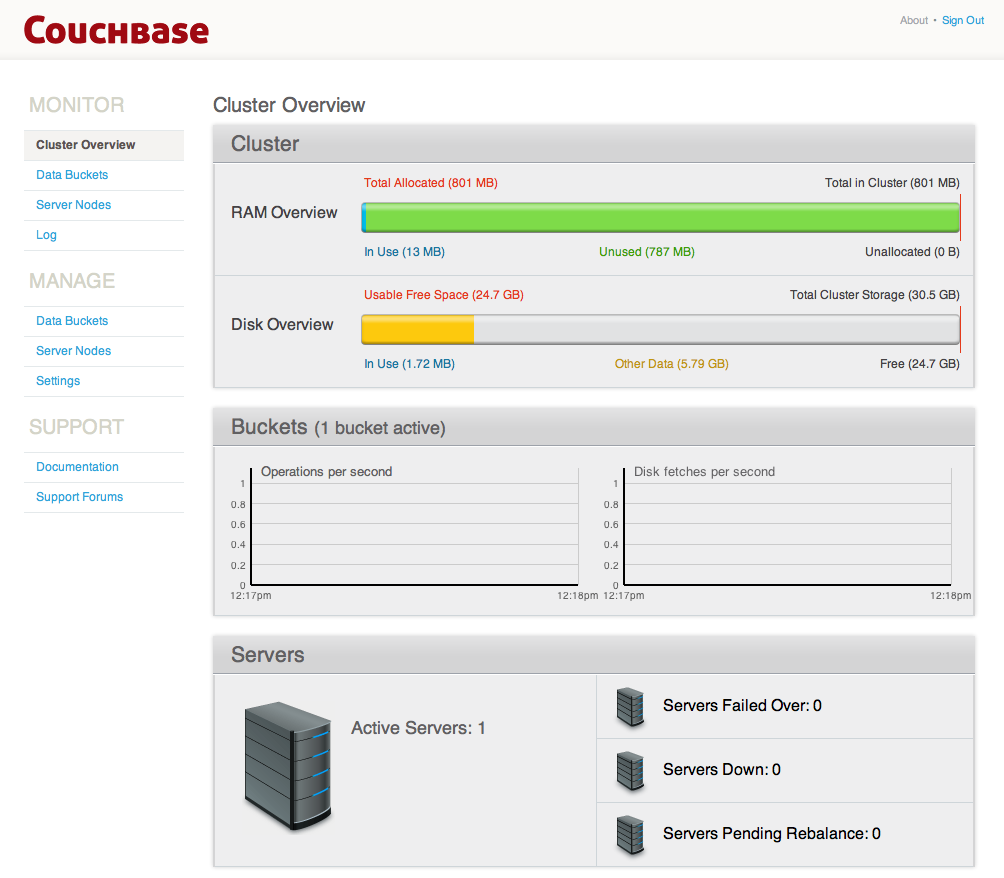

Web Administration Console

Couchbase Server includes a built-in web-administration console that provides a complete interface for configuring, managing, and monitoring your Couchbase Server installation.

For more information, see Web Console for Administration.

-

Administration REST API

In addition to the Web Administration console, Couchbase Server incorporates a management interface exposed through the standard HTTP REST protocol. This REST interface can be called from your own custom management and administration scripts to support different operations.

Full details are provided in REST API for Administration

-

Command Line Interface

Couchbase Server includes a suite of command-line tools that provide information and control over your Couchbase Server and cluster installation. These can be used in combination with your own scripts and management procedures to provide additional functionality, such as automated failover, backups and other procedures. The command-line tools make use of the REST API.

For information on the command-line tools available, see Command-line Interface for Administration.

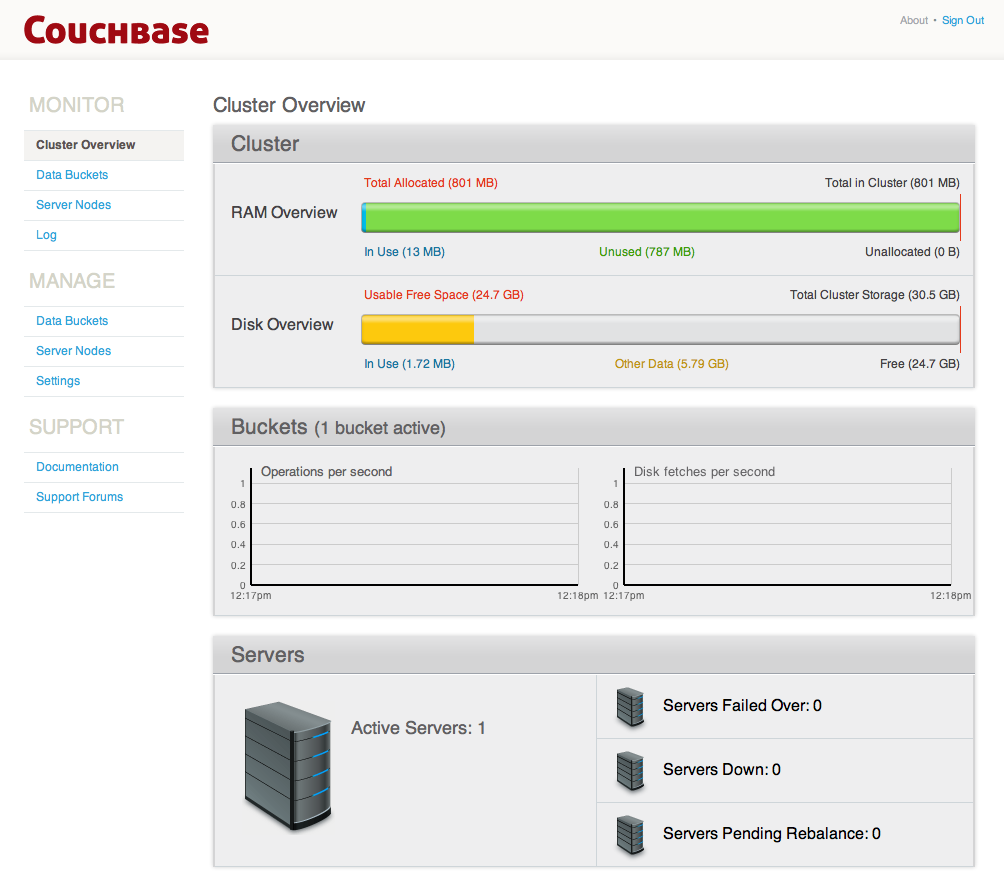

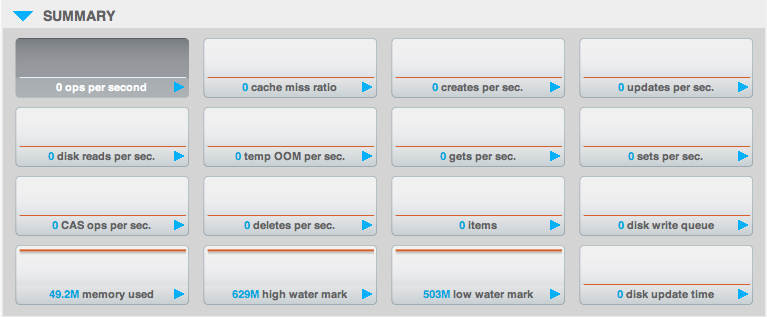

Statistics and Monitoring¶

In order to understand what your cluster is doing and how it is performing, Couchbase Server incorporates a complete set of statistical and monitoring information. The statistics are provided through all of the administration interfaces. Within the Web Administration Console, a complete suite of statistics are provided, including built-in real-time graphing and performance data.

The statistics are divided into a number of groups, allowing you to identify different states and performance information within your cluster:

-

By Node

Node statistics show CPU, RAM and I/O numbers on each of the servers and across your cluster as a whole. This information can be used to help identify performance and loading issues on a single server.

-

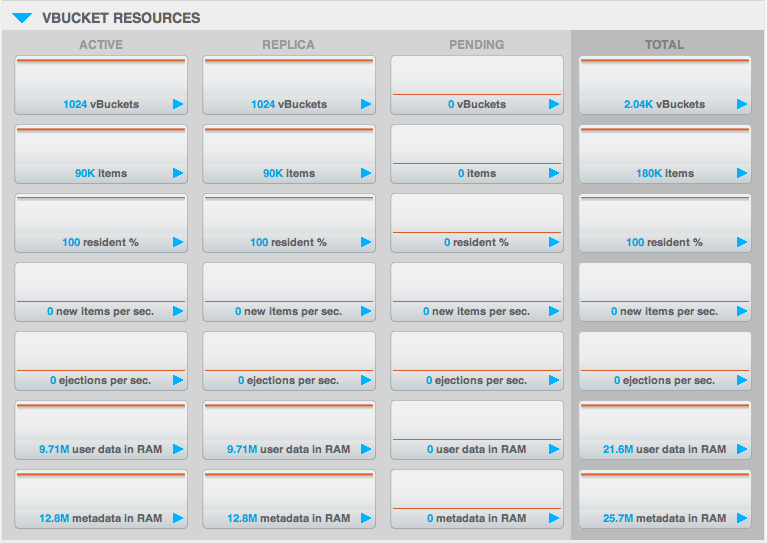

By vBucket

The vBucket statistics show the usage and performance numbers for the vBuckets used to store information in the cluster. These numbers are useful to determine whether you need to reconfigure your buckets or add servers to improve performance.

-

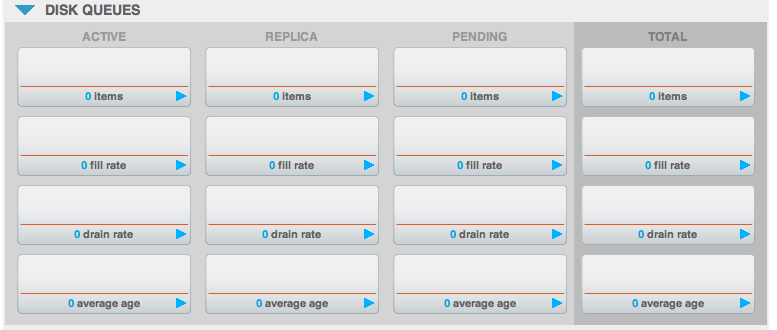

By Disk Queues

These statistics monitor the queues used to read and write information to disk and between replicas. This information can be helpful in determining whether you should expand your cluster to reduce disk load.

-

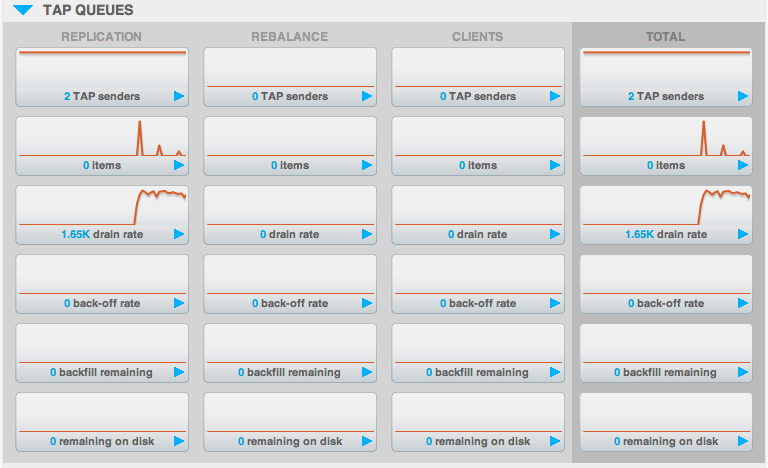

By TAP Queues

The TAP interface is used to monitor changes and updates to the database. TAP is used internally by Couchbase to provide replication between Couchbase nodes, but can also be used by clients for change notifications.

In nearly all cases the statistics can be viewed both on a whole of cluster basis, so that you can monitor the overall RAM or disk usage for a given bucket, or an individual server basis so that you can identify issues within a single machine.

Getting Started¶

To start using Couchbase Server, you need to follow these steps:

Prepare your target system by ensuring that you meet the system requirements. See Preparation.

Install Couchbase Server using one of the available binary distributions. See Installing Couchbase Server.

For more information on Upgrading Couchbase Server from a previous version, see Upgrading to Couchbase Server 1.8.

Test the installation by connecting to the Couchbase Server and storing some data using the native Memcached protocol. See Testing Couchbase Server.

Setup the new Couchbase Server system by completing the web-based setup instructions. See Setting up Couchbase Server.

Preparation¶

Heterogeneous or mixed deployments (deployments with both Linux and Windows server nodes) are not supported at this time. It is recommended that when deploying to multiple systems, that system be running the same operating system.

When running Couchbase Server your system should meet or exceed the following system requirements.

Supported Platforms¶

The following operating systems are supported:

RedHat Enterprise Linux 5.2 (Deprecated). Requires additional third-party libraries and packages.

RedHat Enterprise Linux 5.4 (32-bit and 64-bit)

Ubuntu Linux 10.04 (32-bit and 64-bit)

Ubuntu Linux 11.10 (32-bit and 64-bit) Developer Only

Windows Server 2008 R2 (32-bit and 64-bit)

Mac OS X 10.5 or higher (minimum), 10.6 or higher preferred (64-bit only) Developer Only

Use of SELinux¶

Couchbase Server does not currently operate when SELinux is enabled. You should disable SELinux on each node in the cluster to prevent problems with the operation of Couchbase Server. For more information on disable SELinux, see How to Disable SELinux.

Hardware Requirements¶

The following hardware requirements are recommended for installation:

Quad-core, 64-bit CPU running at 3GHz

16GB RAM (physical)

Block-based storage device (hard disk, SSD, EBS, iSCSI). Network filesystems (e.g. CIFS, NFS) are not supported.

A minimum specification machine should have the following characteristics:

Dual-core CPU running at 2GHz

4GB RAM (physical)

For development and testing purposes a reduced CPU and RAM configuration than the minimum specified can be used. This can be as low as 256MB of free RAM (beyond operating system requirements) and a single CPU core.

However, you should not use a configuration lower than that specified above in production. Performance on machines lower than the above specification will be significantly lower and should not be used as an indication of the performance on a production machine.

You must have enough memory to run your operating system and the memory reserved for use by Couchbase Server. For example, if you want to dedicate 8GB of RAM to Couchbase Server you must have at least an additional 128MB of RAM to host your operating system. If you are running additional applications and servers, you will need additional RAM.

Storage Requirements¶

For running Couchbase Server you must have the following storage available:

100MB for application logging

Disk space to match your physical RAM requirements for persistence of information

Web Browser (for administration)¶

The Couchbase Server administration interface is supported using the following Web browsers, with Javascript support enabled:

-

Mozilla Firefox 3.6 or higher

To enable JavaScript, select the

Enable JavaScriptoption within theContentpanel of the application preferences. -

Safari 5 or higher

To enable JavaScript, use the checkbox on the security tab of the application preferences.

-

Google Chrome 11 or higher

To enable JavaScript, use the

Allow all sites to run JavaScript (recommended)option within theContentbutton of theUnder the Hoodsection of the application preferences. -

Internet Explorer 8 or higher

To enable JavaScript, by enabling

Active Scriptingwithin theCustom Level, section of theSecuritysection of theInternet Optionsitem of theToolsmenu.

Network Ports¶

Couchbase Server uses a number of different network ports for communication between the different components of the server, and for communicating with clients that accessing the data stored in the Couchbase cluster. The ports listed must be available on the host for Couchbase Server to run and operate correctly.

Couchbase Server will configure these ports automatically, but you must ensure that your firewall or IP tables configuration allow communication on the specified ports for each usage type.

The following table lists the ports used for different types of communication with Couchbase Server, as follows:

-

Node to Node

Where noted, these ports are used by Couchbase Server for communication between all nodes within the cluster. You must have these ports open on all to enable nodes to communicate with each other.

-

Node to Client

Where noted, these ports should be open between each node within the cluster and any client nodes accessing data within the cluster.

-

Cluster Administration

Where noted, these ports should be open and accessible to allow administration, whether using the REST API, command-line clients, and Web browser.

| Port | Purpose | Node to Node | Node to Client | Cluster Administration |

|---|---|---|---|---|

| 8091 | Web Administration Port | Yes | Yes | Yes |

| 11209 | Internal Cluster Port | Yes | No | No |

| 11210 | Internal Cluster Port | Yes | Yes | No |

| 4369 | Erlang Port Mapper ( epmd ) |

Yes | No | No |

| 21100 to 21199 (inclusive) | Node data exchange | Yes | No | No |

Installing Couchbase Server¶

To install Couchbase Server on your machine you must download the appropriate package for your chosen platform from http://www.couchbase.com/downloads. For each platform, follow the corresponding platform-specific instructions.

Red Hat Linux Installation¶

The RedHat installation uses the RPM package. Installation is supported on RedHat and RedHat based operating systems such as CentOS.

To install, use the rpm command-line tool with the RPM package that you

downloaded. You must be logged in as root (Superuser) to complete the

installation:

root-shell> rpm --install couchbase-server version.rpm

Where version is the version number of the downloaded package.

Once the rpm command has been executed, the Couchbase Server starts

automatically, and is configured to automatically start during boot under the 2,

3, 4, and 5 runlevels. Refer to the RedHat RPM documentation for more

information about installing packages using RPM.

Once installation has completed, the installation process will display a message similar to that below:

Starting Couchbase server: [ OK ]

You have successfully installed Couchbase Server.

Please browse to http://hostname:8091/ to configure your server.

Please refer to http://couchbase.com/support for

additional resources.

Please note that you have to update your firewall configuration to

allow connections to the following ports: 11211, 11210, 4369, 8091

and from 21100 to 21199.

By using this software you agree to the End User License Agreement.

See /opt/couchbase/LICENSE.txt.

Once installed, you can use the RedHat chkconfig command to manage the

Couchbase Server service, including checking the current status and creating the

links to enable and disable automatic startup. Refer to the RedHat documentation

for instructions.

To continue installation you must open a web browser and access the web administration interface. See Setting up Couchbase Server.

Ubuntu Linux Installation¶

The Ubuntu installation uses the DEB package.

To install, use the dpkg command-line tool using the DEB file that you

downloaded. The following example uses sudo which will require root-access to

allow installation:

shell> dpkg -i couchbase-server version.deb

Where version is the version number of the downloaded package.

Once the dpkg command has been executed, the Couchbase Server starts

automatically, and is configured to automatically start during boot under the 2,

3, 4, and 5 runlevels. Refer to the Ubuntu documentation for more information

about installing packages using the Debian package manager.

Once installation has completed, the installation process will display a message similar to that below:

Selecting previously deselected package couchbase-server.

(Reading database ... 218698 files and directories currently installed.)

Unpacking couchbase-server (from couchbase-server-community_x86_64_beta.deb) ...

Setting up couchbase-server (2-0~basestar) ...

* Started Couchbase server

You have successfully installed Couchbase Server.

Please browse to http://tellurium-internal:8091/ to configure your server.

Please refer to http://couchbase.com for additional resources.

Please note that you have to update your firewall configuration to

allow connections to the following ports: 11211, 11210, 4369, 8091

and from 21100 to 21199.

By using this software you agree to the End User License Agreement.

See /opt/couchbase/LICENSE.txt.

Once installed, you can use the service command to manage the Couchbase Server

service, including checking the current status. Refer to the Ubuntu

documentation for instructions.

To continue installation you must open a web browser and access the web administration interface. See Setting up Couchbase Server.

Microsoft Windows Installation¶

To install on Windows you must download the Windows installer package. This is supplied as an Windows executable. You can install the package either using the GUI installation process, or by using the unattended installation process.

GUI Installation¶

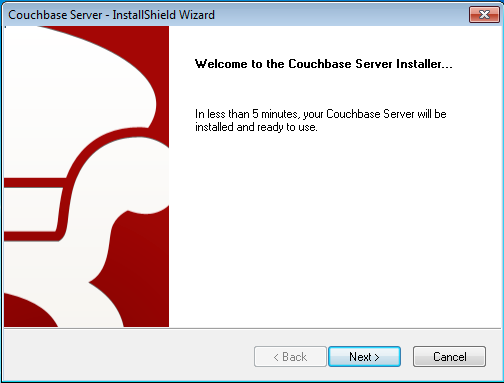

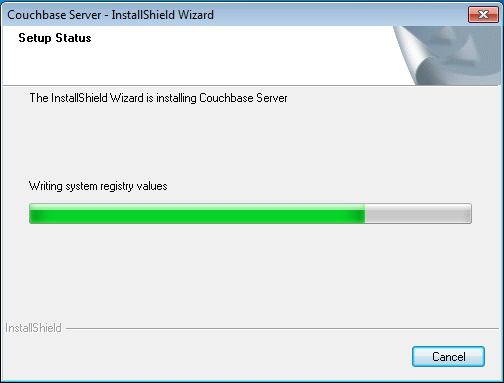

To use the GUI installer, double click on the downloaded executable file.

The installer will launch and prepare for installation. You can cancel this process at any time. Once completed, you will be provided with the welcome screen.

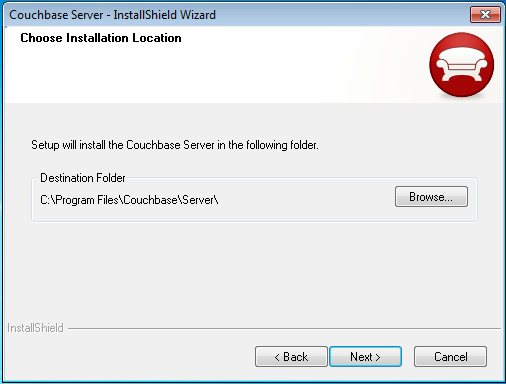

Click Next to start the installation. You will be prompted with the

Installation Location screen. You can change the location where the Couchbase

Server application is located. Note that this does not configure the location of

where the persistent data will be stored, only the location of the application

itself. To select the install location, click the Browse button to select the

folder. Click Next to continue the installation.

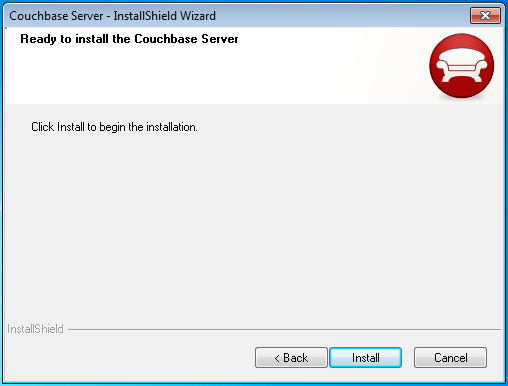

Configuration has now been completed. You will be prompted to confirm that you

want to continue installation. Click Next to confirm the installation and

start the installation process.

The install will copy over the necessary files to the system. During the installation process, the installer will also check to ensure that the default administration port is not already in use by another application. If the default port is unavailable, the installer will prompt for a different port to be used for administration of the Couchbase Server.

Once the installation process has been completed, you will be prompted with the

completion screen. This indicates that the installation has been completed and

your Couchbase Server is ready to be setup and configured. When you click

Finish, the installer will quit and automatically open a web browser with the

Couchbase Server setup window.

To continue installation you should follow the server setup instructions. See Setting up Couchbase Server.

Unattended Installation¶

The unattended installation process works by first recording your required installation settings using the GUI installation process outlined above which are saved to a file. You can then use the file created to act as the option input to future installations.

To record your installation options, open a Command Terminal or Powershell and

start the installation executable with the /r command-line option:

C:\Downloads> couchbase_server_version.exe /r

You will be prompted with the installation choices as outlined above, but the

installation process will not actually be completed. Instead, a file with your

option choices will be recorded in the file C:\Windows\setup.iss.

To perform an installation using a previously recorded setup file, copy the

setup.iss file into the same directory as the installer executable. Run the

installer from the command-line, this time using the /s option.

C:\Downloads> couchbase_server_version.exe /s

You can repeat this process on multiple machines by copying the executable

package and the setup.iss file to each machine.

Mac OS X Installation¶

The Mac OS X installation uses a Zip file which contains a standalone

application that can be copied to the Applications folder or to any other

location you choose. The installation location does not affect the location of

the Couchbase data files.

To install:

Download the Mac OS X Zip file.

Double-click the downloaded Zip installation file to extract the contents. This will create a single file, the

Couchbase.appapplication.Drag and Drop the

Couchbase.appto your chosen installation folder, such as the systemApplicationsfolder.

Once the application has been copied to your chosen location, you can double-click on the application to start it. The application itself has no user interface. Instead, the Couchbase application icon will appear in the menubar on the right-hand side. If there is no active configuration for Couchbase, then the Couchbase Web Console will be opened and you will be asked to complete the Couchbase Server setup process. See Setting up Couchbase Server for more details.

The Couchbase application runs as a background application. Clicking on the menubar gives you a list of operations that can be performed. For more information, see Startup and Shutdown on Mac OS X.

The command line tools are included within the Couchbase Server application

directory. You can access them within Terminal by using the full location of the

Couchbase Server installation. By default, this is /Applications/Couchbase

Server.app/Contents//Resources/couchbase-core/bin/.

Upgrading to Couchbase Server 1.8¶

Couchbase Server supports upgrades from the previous major release version (Membase Server 1.7) to any minor release within Couchbase Server 1.8, or between minor releases within Couchbase Server 1.8.

Upgrades using either the online or offline method are supported only when upgrading from Membase Server 1.7 to Couchbase Server 1.8. For cluster upgrades older than Membase Server 1.7, you must upgrade to Membase Server 1.7.2 first.

For information on upgrading to Membase 1.7.x, see .

A known issue exists when performing a rolling upgrade from Membase Server 1.7.1 to Couchbase Server 1.8. The problem manifests itself as the rebalance process failing to complete effectively. You should perform an offline (in-place) upgrade. See Offline (in-place) Upgrade Process, for more information.

The upgrade process for a cluster can be performed in two ways:

-

Online (rolling) Upgrades

Online upgrades enable you to upgrade your cluster without taking your cluster down, allowing your application to continue running. Using the Online upgrade method, individual nodes are removed from the cluster (using rebalancing), upgraded, and then brought back into action within the cluster.

Online upgrades natually take a long time, as each node must be taken out of the cluster, upgraded, and then brought back in. However, because the cluster can be upgraded without taking either the cluster or associated applications down, it can be a more effective method of upgrading.

For full instructions on performing an online upgrade, see Online (rolling) Upgrade Process.

Starting with Couchbase Server 1.8.1, you can also make use of the swap rebalance functionality to perform an online upgrade with reduced performance impact on your cluster. For more information, see Upgrading to Couchbase Server 1.8.1 Using Swap Rebalance.

-

Offline (in-place) Upgrades

Offline upgrades involve taking your application and Couchbase Server cluster offline, upgrading every node within the cluster while the cluster is down, and then restarting the upgraded cluster.

Offline upgrades can be quicker because the upgrade process can take place simultaneously on every node in the cluster. The cluster, though, must be shut down for the upgrade to take place. This disables both the cluster and all the applications that rely on it.

For full instructions on performing an offline upgrade, see Offline (in-place) Upgrade Process.

| Feature | Online Upgrades | Offline Upgrades |

|---|---|---|

| Applications Remain Available | ✓ | ✗ |

| Cluster Stays in Operation | ✓ | ✗ |

| Cluster must be Shutdown | ✗ | ✓ |

| Time Required | Requires Rebalance, Upgrade, Rebalance per Node | Entire Cluster is Upgraded at Once |

Before beginning any upgrade, a backup should be taken as a best practice, see Backup and Restore with Couchbase.

For information on backing up Membase 1.7.x, see .

Online (rolling) Upgrade Process¶

Within an online or rolling upgrade, the upgrade process can take place without taking down the cluster or the associated application. This means that the cluster and applications can continue to function while you upgrade the individual nodes within the cluster.

The online upgrade process makes use of the auto-sharding and rebalancing functionality within Couchbase Server to enable one or more nodes within the cluster to be temporarily removed from the cluster, upgraded, and then re-enabled within the cluster again.

To perform an online upgrade of your cluster:

-

Depending on the size and activity of your cluster, choose one or more nodes to be temporarily removed from the cluster and upgraded. You can upgrade one node at a time, or if you have capacity, multiple nodes by taking them out of the cluster at the same time.

If necessary, you can add new nodes to your cluster to maintain performance while your existing nodes are upgraded.

-

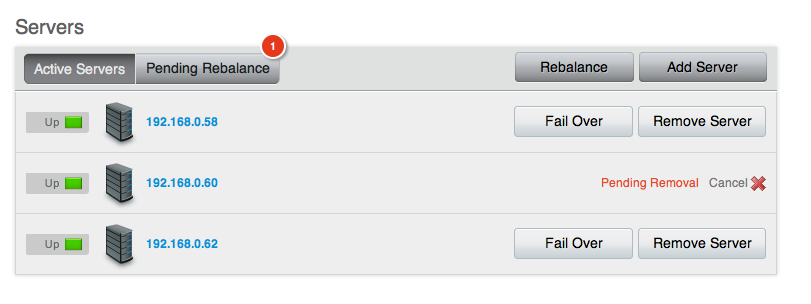

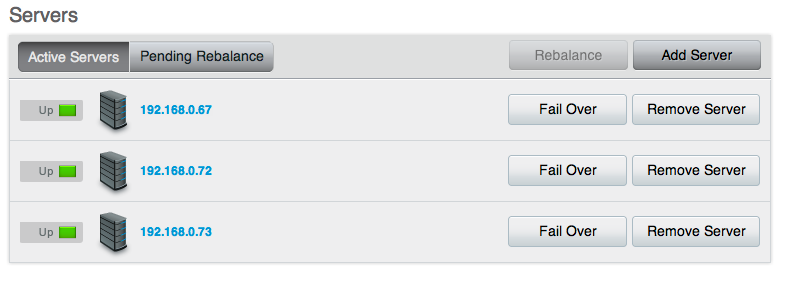

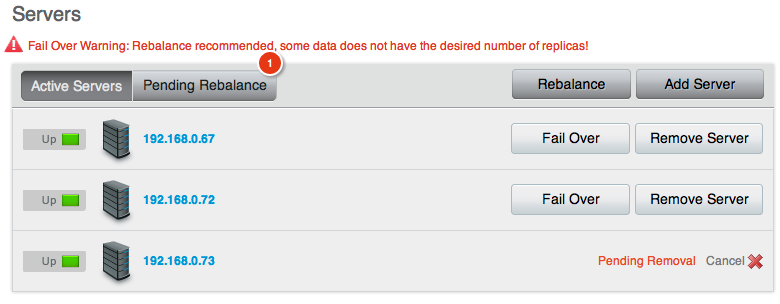

On the

Manage->Server Nodesscreen, click theRemove Server. This marks the server for removal from the cluster, but does not actually remove it.

-

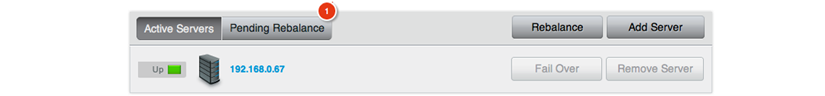

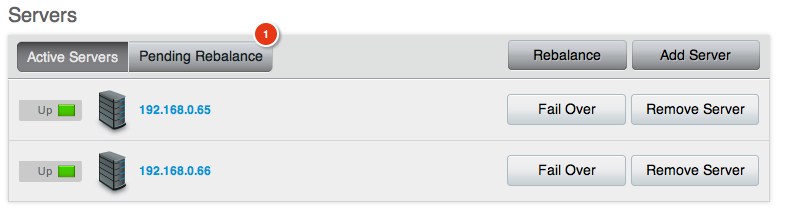

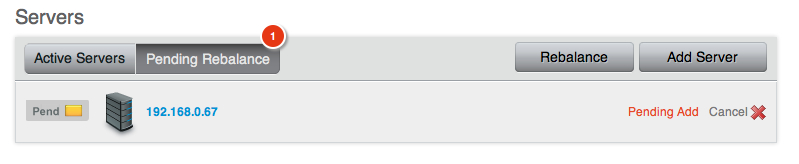

The

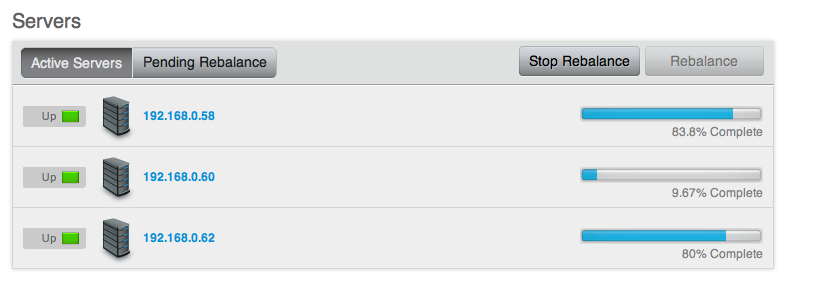

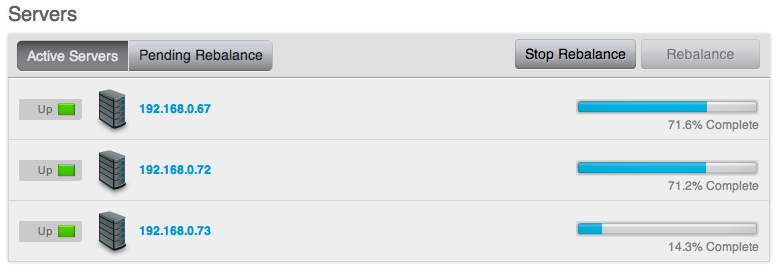

Pending Rebalancewill show the number of servers that require a rebalance to remove them from the cluster. Click theRebalancebutton.

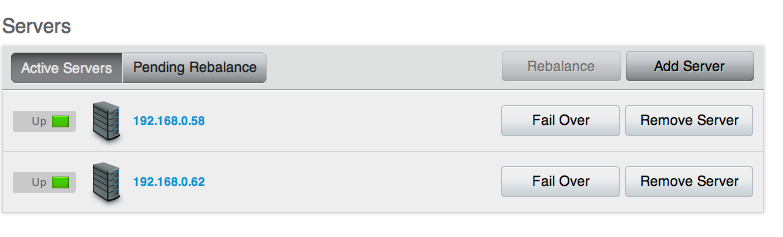

This will start the rebalance process. Once rebalancing has been completed, the

Server Nodesdisplay should display only the remaining (active) nodes in your cluster.

On the node to be upgraded, stop the Couchbase Server process. For guidance on specific platforms, see Startup and Shutdown of Couchbase Server.

With Couchbase Server shutdown on the node, you can perform a standard node upgrade to the new version of Couchbase Server. See Node Upgrade Process for details.

Couchbase Server should be started automatically after the upgrade. If not, restart the Couchbase Server process, using the methods described in Startup and Shutdown of Couchbase Server.

-

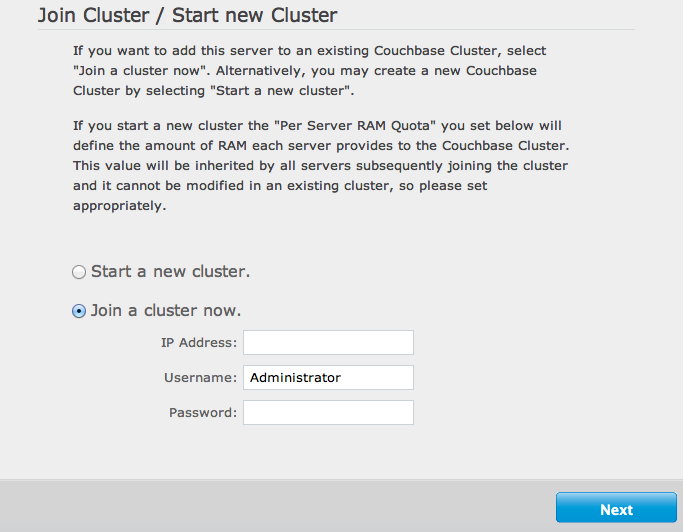

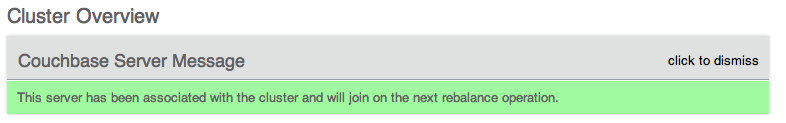

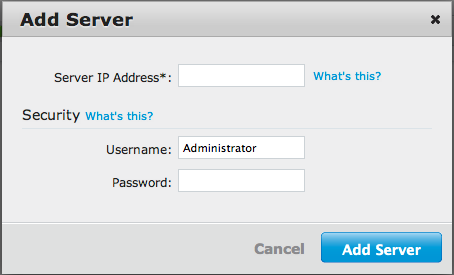

With the node upgraded, you need to add the node back to the cluster.

On an existing node within the running cluster, navigate to the

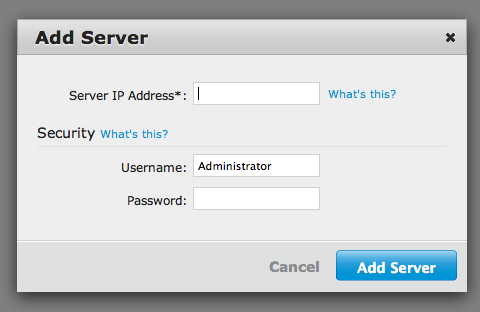

Manage-gt;Server Nodespage. Click theAdd Serverbutton. You will be prompted to enter the IP address and username/password of the server to add back to the cluster.

The

Pending Rebalancecount will indicate that servers need to be rebalanced into the cluster. ClickRebalanceto rebalance the cluster, and bring the node back into production.

You will need to repeate the above sequence for each node within the cluster in order to upgrade the entire cluster to the new version.

Upgrading to Couchbase Server 1.8.1 Using Swap Rebalance¶

Couchbase Server 1.8.1¶

Swap rebalance functionality is available in Couchbase Server 1.8.1.

You can make use of the Swap Rebalance feature to easily and simply upgrade your servers to Couchbase Server 1.8.1, without reducing the performance of your cluster. For background information on the improvements with swap rebalance, see Swap Rebalance.

You must apply a patch to enable the swap rebalance functionality during upgrade. See step 3 below.

You will need one spare node to start the upgrade process.

Install Couchbase Server 1.8.1 on one spare node.

-

Add the new node with Couchbase Server 1.8.1 to the cluster.

For swap rebalance to take effect, the number of nodes being removed and added to the cluster must be identical. Do not rebalance the cluster until the new Couchbase Server 1.8.1 node has become orchestrator of the new cluster.

You must wait for the new node to be identified within the cluster and identify itself itself as the orchestrator node. This will ensure that the node will manage the rebalance operation and perform a swap rebalance operation. You can check for this by opening the

Logportion of the Web UI for the following sequence of messages attributed to the new node:Node ns_1@10.3.2.147 joined cluster Haven’t heard from a higher priority node or a master, so I’m taking over. Current master is older and I’ll try to takeover (repeated 1 times)Once the new node has been assigned as the orchestrator, all rebalances performed will be swap rebalances, assuming they meet the swap rebalance criteria.

-

Download the patch from http://packages.couchbase.com/releases/1.8.1/MB-5895.erl. This patch must be applied only for the first rebalance to enable swap rebalance during the upgrade process.

You must apply the patch to the newley added Couchbase Server 1.8.1 node. To apply the patch:

-

For Linux :

Execute the following command on the new Couchbase Server 1.8.1 node:

shell> /opt/couchbase/bin/curl -v –user Administrator:password -X POST \ -d @MB-5895.erl http://localhost:8091/diag/evalThe output should be similar to the following, showing the connection opened without an explicit server response:

<pre><code class="no-highlight">* About to connect() to localhost port 8091 (#0) * Trying 127.0.0.1... connected * Connected to localhost (127.0.0.1) port 8091 (#0) * Server auth using Basic with user 'Administrator' > POST /diag/eval HTTP/1.1 > Authorization: Basic QWRtaW5pc3RyYXRvcjpUYW1zaW4= > User-Agent: curl/7.21.4-DEV (i686-pc-linux-gnu) libcurl/7.21.4-DEV zlib/1.2.3.4 > Host: localhost:8091 > Accept: */* > Content-Length: 1797 > Content-Type: application/x-www-form-urlencoded > Expect: 100-continue > < HTTP/1.1 100 Continue < HTTP/1.1 200 OK < Server: MochiWeb/1.0 (Any of you quaids got a smint?) < Date: Tue, 17 Jul 2012 15:29:17 GMT < Content-Length: 2 < * Connection #0 to host localhost left intact * Closing connection #0If the command fails for any reason, please verify the command and resubmit.

-

For Windows :

Open a command prompt and execute the following command on the new Couchbase Server 1.8.1 node using the following command:

shell> C:\Program Files\Couchbase\Server\bin>curl -v –user Administrator:password -X POST \ -d @MB-5895.erl http://localhost:8091/diag/evalThe output should be similar to the following, showing the connection opened and no explicit error response from the server:

* timeout on name lookup is not supported- About to connect() to localhost port 8091 (#0)

- Trying 127.0.0.1… connected

- Connected to localhost (127.0.0.1) port 8091 (#0)

- Server auth using Basic with user ‘Administrator’ > POST /diag/eval HTTP/1.1 > Authorization: Basic QWRtaW5pc3RyYXRvcjpUYW1zaW4= > User-Agent: curl/7.21.4-DEV (i686-pc-linux-gnu) libcurl/7.21.4-DEV zlib/1.2.3.4 > Host: localhost:8091 > Accept: / > Content-Length: 1405 > Content-Type: application/x-www-form-urlencoded > Expect: 100-continue > < HTTP/1.1 100 Continue < HTTP/1.1 200 OK < Server: MochiWeb/1.0 (Any of you quaids got a smint?) < Date: Tue, 17 Jul 2012 15:29:17 GMT < Content-Length: 2 <

- Connection #0 to host localhost left intact

- Closing connection #0

If the command fails for any reason, please verify the command and resubmit.

-

-

Mark one of your existing Couchbase 1.8.0 nodes for removal from the cluster.

-

Perform a rebalance operation.

The rebalance will operate as a swap rebalance and move the data directly from the Couchbase 1.8.0 node to the new Couchbase 1.8.1 node.

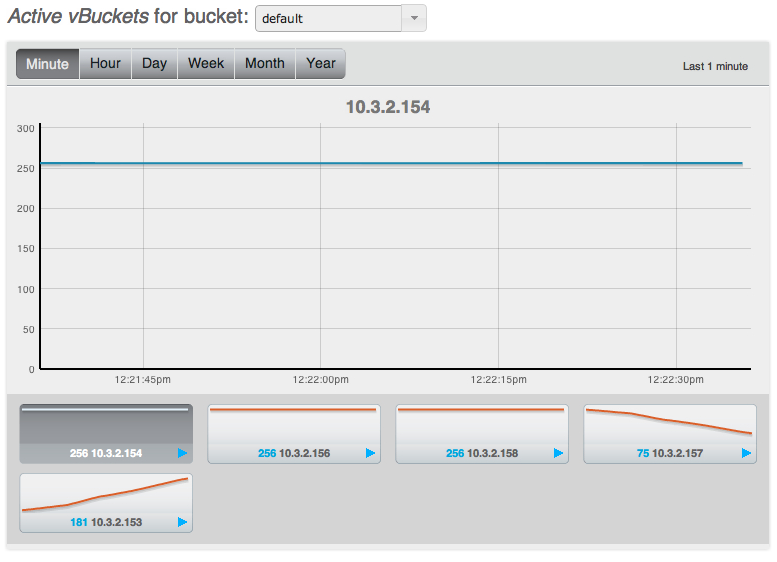

You can monitor the progress by viewing the

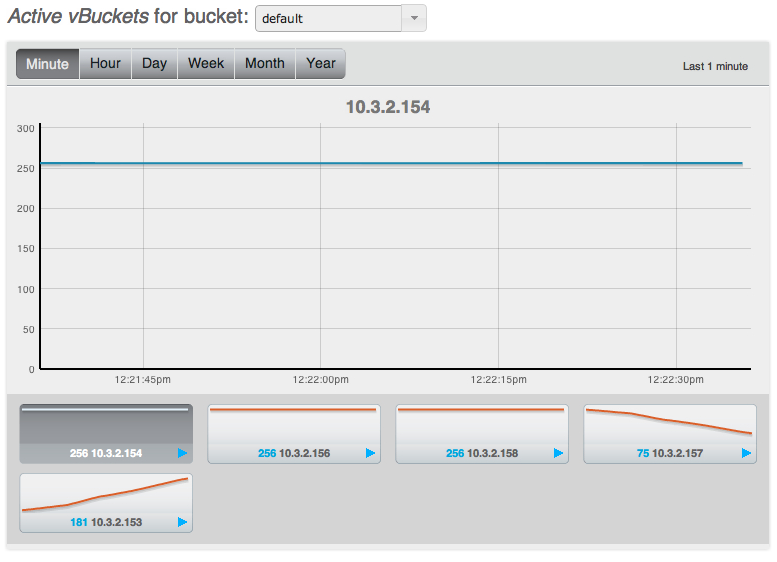

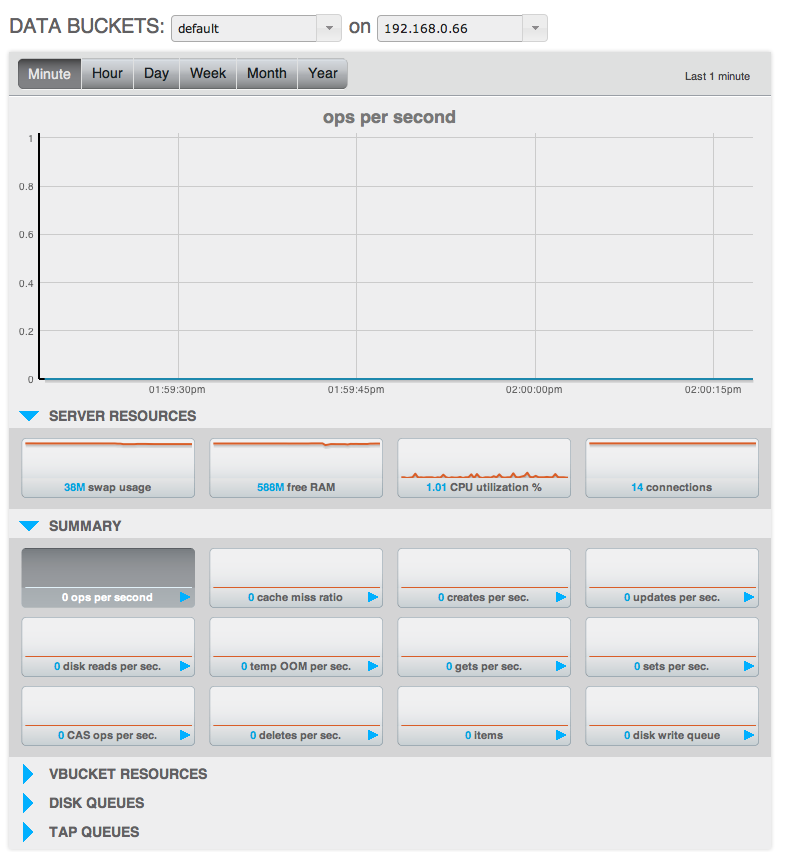

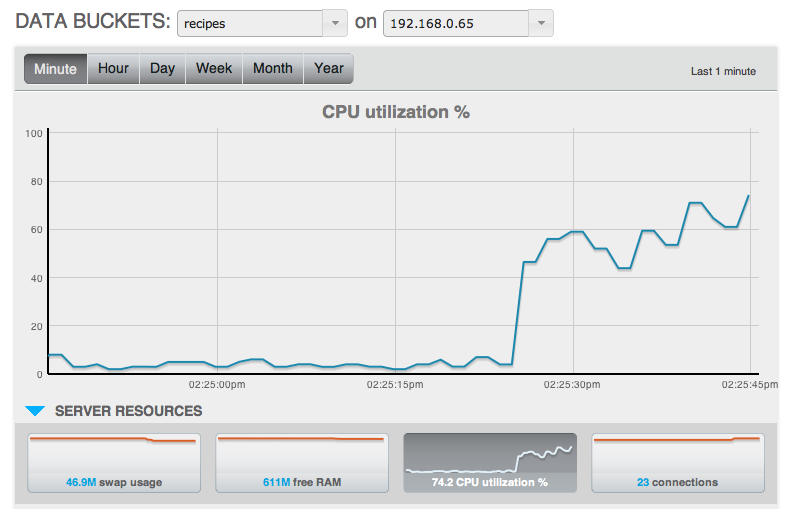

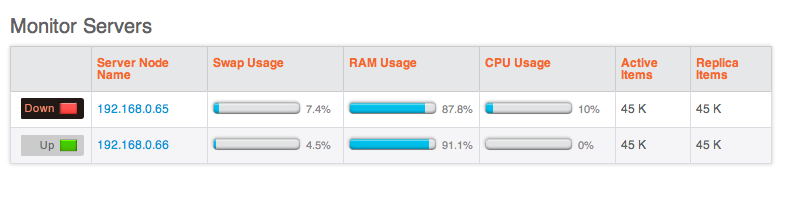

Active vBucketsstatistics. This should show the number of active vBuckets in the 1.8.0 node being removed as reducing, and the number of active vBuckets in the new 1.8.1 node increasing.You can monitor this through the UI by selecting the

vBucketsstatistic in theMonitoringsection of the Administration Web Console.

Repeat steps 1-5 (add/remove and swap rebalance operation), but without the patch upload for all the remaining Couchbase Server 1.8.0 nodes within the cluster so that each node is upgraded to Couchbase Server 1.8.1.

With a Couchbase Server 1.8.1 node in the cluster, you can perform a swap rebalance with multiple nodes, as long as the number of nodes being swapped out, and the number being swapped in are identical. For example, if you have four nodes in your cluster, after the initial rebalance, you can add three new nodes, and remove your existing three 1.8.0 nodes in one rebalance operation.

For more information on swap rebalance, see Swap Rebalance.

Once complete, your entire cluster should now be running Couchbase Server 1.8.1.

Offline (in-place) Upgrade Process¶

The offline (or in-place) upgrade process requires you to shutdown all the applications using the cluster, and the entire Membase Server or Couchbase Server cluster. With the cluster switched off, you can then perform the upgrade process on each of the nodes, and bring your cluster and application back up again.

It’s important to ensure that your disk write queue ( Disk Write Queue ) has been completely drained before shutting down the cluster service. This will ensure that all data has been persisted to disk and will be available after the upgrade. It is a best practice to turn off the application and allow the queue to drain prior to beginning the upgrade.

To upgrade an existing cluster using the offline method:

Turn off your application, so that no requests are going to your Membase Cluster. You can monitor the activity of your cluster by using the Administration Web Console.

With the application switched off, the cluster now needs to complete writing the information stored out to disk. This will ensure that when you cluster is restarted, all of your data remains available. You can do this by monitoring the Disk Write Queue within the Web Console. The disk write queue should reach zero (i.e. no data remaining to be written to disk).

-

On each node within the cluster:

-

Shutdown the Membase Server or Couchbase Server process.

On the node to be upgraded, stop the Couchbase Server process. For guidance on specific platforms, see Startup and Shutdown of Couchbase Server.

With Couchbase Server shutdown on the node, you can perform a standard node upgrade to the new version of Couchbase Server. See Node Upgrade Process for details.

Couchbase Server should be started automatically on each node as you perform the upgrade.

-

As the cluster is warming up again, you can monitor the status of the warmup process to determine when you can switch on your application. See Monitoring startup (warmup).

Once the cluster has been started up again, you can re-enable your application on the upgraded cluster.

Node Upgrade Process¶

Whether you are performing an online or offline upgrade, the steps for upgrading an individual node, including the shutdown, installation, and startup process remains the same.

Download

couchbase-server-edition_and_arch_version.Backup the node data. To backup an existing Couchbase Server installation, use

cbbackup. See Backing Up.-

Backup the node specific configuration files. While the upgrade script will perform a backup of the configuration and data files, it is our recommended best practice to take your own backup of the files located at:

Platform Location Linux /opt/couchbase/var/lib/couchbase/config/config.datWindows C:\Program Files\Couchbase\Server\Config\var\lib\couchbase\config\config.dat -

Linux Upgrade Process from Couchbase Server 1.8.x

Red Hat/CentOS Linux

You can perform an upgrade install using the RPM package — this will keep the data and existing configuration.

root-shell> rpm -U couchbase-server-architecture_meta_current_version.rpmUbuntu/Debian Linux

You can perform a package upgrade by installing the updated

.pkgpackage:shell> sudo dpkg -i couchbase-server-architecture___meta_current_release.debWindows Upgrade Process

If you are upgrading from Membase Server 1.7.2 or Couchbase Server 1.8.0 to Couchbase Server 1.8.1 you must perform additional steps. See Upgrading from Membase Server 1.7.2 and Couchbase Server 1.8.0 to Couchbase Server 1.8.1 (Windows only).

The Couchbase Server Windows installer will upgrade your server installation using the same installation location. For example, if you have installed Couchbase Server in the default location,

C:\Program Files\Couchbase\Server, the Couchbase Server installer will copy new 1.8.1 files to the same location.

The TCP/IP port allocation on Windows by default includes a restricted number of ports available for client communication. For more information on this issue, including information on how to adjust the configuration and increase the available ports, see MSDN: Avoiding TCP/IP Port Exhaustion.

Upgrading from Membase Server 1.7.2 and Couchbase Server 1.8.0 to Couchbase Server 1.8.1 (Windows only)¶

Due to a change in the packaging for Couchbase Server 1.8.1 on Windows you need to run the package installation twice in order to register the package and upgrade correctly. The correct steps are:

Download Windows installed package for Couchbase Server 1.8.1.

Backup the node before running the upgrade process. If you are backing up an existing Membase Server 1.7.x installation, see Membase Server 1.7 Backup. For Couchbase Server 1.8.0, see Backing Up.

If you are upgradeding from Membase Server 1.7.2, stop Membase Server. For Couchbase Server 1.8.0, stop Couchbase Server. For more information, see Startup and Shutdown on Windows. Wait until the server process has stopped completely before continuing.

Double-click on the downloaded package installer for Couchbase Server 1.8.1. The initial execution will update the registry information in preparation for the full upgrade.

Double-click on the downloaded package installer for Couchbase Server 1.8.1. This second installation will take you through the full installation process, upgrading your existing installation for the new version. Follow the on-screen instructions to perform the upgrade.

Once the process has completed, you can start Couchbase Server 1.8.1 and re-add and rebalance your node into your cluster.

Upgrading from Membase Server 1.7¶

If you are upgrading from Membase Server 1.7 you should take a backup and copy your configuration files, before uninstalling the existing Membase Server product. This will keep the data files in place where they will be upgraded during the installation and startup of Couchbase Server 1.8.

Step-by-step instructions are probided below:

Download

couchbase-server-edition_and_arch_version.Backup the node data. Use

mbbackupif you are upgrading from Membase Server 1.7.x (see Backup and Restore in Membase Server 1.7.x ). If you are upgrading Couchbase Server, usecbbackup. See Backing Up.-

Backup the node specific configuration files. While the upgrade script will perform a backup of the configuration and data files, it is our recommended best practice to take your own backup of the files located at:

Version Platform Location Membase Server 1.7.x Linux /opt/membase/var/lib/membase/config/config.datMembase Server 1.7.x Windows C:\Program Files\Membase\Server\Config\var\lib\membase\config.datIf you have multiple version subdirectories in your

/etc/opt/membasedirectory, you must first cleanup the directory so only the last, most recent version remains. -

Linux Upgrade Process from Membase Server 1.7.x

Linux package managers will prevent the

couchbase-serverpackage from being installed when there’s already amembase-serverpackage installed.Red Hat/CentOS Linux

-

Uninstall the existing

membase-serverpackage — this will keep the user’s db data and copies of their configuration.root-shell> rpm -e membase-server -

. Install Couchbase Server 1.8 with special environment variable flags, which force an upgrade. The special env var is

INSTALL_UPGRADE_CONFIG_DIR.root-shell> INSTALL_UPGRADE_CONFIG_DIR=/opt/membase/var/lib/membase/config \ rpm -i \ couchbase-server-architecture_1.8.1.rpm

Ubuntu/Debian Linux

-

. Uninstall the existing

membase-serverpackage — this will keep the user’s db data and copies of their configuration.shell> sudo dpkg -r membase-server -

. Install Couchbase Server 1.8 with special environment variable flags, which forces an upgrade. The special env var is

INSTALL_UPGRADE_CONFIG_DIRshell> sudo INSTALL_UPGRADE_CONFIG_DIR=/opt/membase/var/lib/membase/config \ dpkg -i \ couchbase-server-architecture_1.8.1.deb

-

-

Windows Upgrade Process

The Couchbase Server Windows installer will upgrade your current Membase Server installation to Couchbase Server, using the same installation location. If you have installed Membase Server in the default location,

C:\Program Files\Membase\Server, the Couchbase Server installer will copy the new files to the same location. Once the upgrade process is completed you will see theicon on the Desktop and underStart->Programsreplacing Membase Server.

After every node has been upgraded and restarted, and you can monitor its progress of “warming up”. For more details, see Monitoring startup (warmup). Turn your application back on.

Manually Controlled Upgrade Options¶

This section is not applicable to Windows.

By using environment variable flags during installation you may optionally take more control of the upgrade process and results. The available environment variables are:

-

INSTALL_UPGRADE_CONFIG_DIRThis variable sets the value of the directory of the previous versions config directory. When this environment variable is defined, the rpm/dpkg scripts will upgrade configuration files and data records from Membase Server 1.7 to Couchbase Server 1.8.

The data directory defined and used by your Membase Server 1.7 installation will continue to be used by your upgraded Couchbase Server 1.8.1 instance. For example, if you had mounted/mapped special filesystems for use while running Membase Server 1.7, those paths will continue to be used after upgrading to Couchbase Server 1.8.1.

-

INSTALL_DONT_START_SERVERWhen set to ‘1’, the

rpm/dpkgscripts will not automatically start the Couchbase Server as its last step. -

INSTALL_DONT_AUTO_UPGRADEWhen set to ‘1’, the

rpm/dpkgscripts will not automatically invoke thecbupgradescript that’s included in Couchbase Server 1.8.1, allowing you to manually invokecbupgradelater. This may be useful in case you need to perform more debugging. This should be used with theINSTALL_DONT_START_SERVER=1andINSTALL_UPGRADE_CONFIG_DIR= PATHenvironment variables.

Example flag usage for RedHat / CentOS:

INSTALL_DONT_START_SERVER=1 INSTALL_DONT_AUTO_UPGRADE=1 \

INSTALL_UPGRADE_CONFIG_DIR=/opt/membase/var/lib/membase/config \

rpm -i couchbase-server-community_x86_64_1.8.1.rpm

For Ubuntu

INSTALL_DONT_START_SERVER=1 INSTALL_DONT_AUTO_UPGRADE=1 \

INSTALL_UPGRADE_CONFIG_DIR=/opt/membase/var/lib/membase/config \

dpkg -i couchbase-server-community_x86_64_1.8.1.deb

Example output when using flags, first uninstalling the existing Membase Server 1.7.x:

[root@localhost ~]# rpm -e membase-server

Stopping membase-server[ OK ]

warning: /opt/membase/var/lib/membase/config/config.dat saved as /opt/membase/var

/lib/membase/config/config.dat.rpmsave

[root@localhost ~]# INSTALL_DONT_START_SERVER=1 INSTALL_DONT_AUTO_UPGRADE=1

INSTALL_UPGRADE_CONFIG_DIR=/opt/membase/var/lib/membase/config rpm -i

couchbase-server-community_x86_64_1.8.1r-55-g80f24f2.rpm

Upgrading couchbase-server ...

/opt/couchbase/bin/cbupgrade -c /opt/membase/var/lib/membase/config -a yes

Skipping cbupgrade due to INSTALL_DONT_AUTO_UPGRADE ...

Skipping server start due to INSTALL_DONT_START_SERVER ...

You have successfully installed Couchbase Server.

Please browse to http://localhost.localdomain:8091/ to configure your server.

Please refer to http://couchbase.com for additional resources.

Please note that you have to update your firewall configuration to

allow connections to the following ports: 11211, 11210, 4369, 8091

and from 21100 to 21299.

By using this software you agree to the End User License Agreement.

See /opt/couchbase/LICENSE.txt.

[root@localhost ~]#

After using the INSTALL_DONT_AUTO_UPGRADE option, you can use the

/opt/couchbase/bin/cbupgrade program later to fully control the upgrade steps.

It’s command-line options include:

[root@localhost ~]# /opt/couchbase/bin/cbupgrade -h

Usage: cbupgrade [-c path/to/previous/config/dir] [-a AUTO] [-d FILENAME] [-n] [-s FACTOR]

-c <path/to/previous/config/dir>

-- example: -c /etc/opt/membase/1.6.5.3.1

-a <yes|no>

-- automatic or non-interactive mode; default is 'no';

'yes' to force automatic 'yes' answers to all questions

-d <dbdir_output_file>

-- retrieve db directory from config file and exit

-n -- dry-run; don't actually change anything

-s <free_disk_space_needed_factor>

-- free disk space needed, as a factor of current bucket usage

-- default value is 2.0

-- example: -s 1.0

The cbupgrade program can be run using the -n flag, which tells cbupgrade

to not modify any files, but just describe the changes it would make. For

example:

[root@localhost ~]# /opt/couchbase/bin/cbupgrade -c /opt/membase/var/lib/membase/config -nDry-run

mode: no actual upgrade changes will be made.

Upgrading your Couchbase Server to 1.8.1r-55-g80f24f2.

The upgrade process might take awhile.

Analysing...

Previous config.dat file is /opt/membase/var/lib/membase/config/config.dat.rpmsave

Target node: ns_1@127.0.0.1

Membase/Couchbase should not be running.

Please use: /etc/init.d/couchbase-server stop

or: /etc/init.d/membase-server stop

Is the Membase/Couchbase server already stopped? [yes|no]

yes

Database dir: /opt/membase/var/lib/membase/data

Is that the expected database directory to upgrade? [yes|no]

yes

Buckets to upgrade: default

Are those the expected buckets to upgrade? [yes|no]

yes

Checking disk space available for buckets in directory:

/opt/membase/var/lib/membase/data

Free disk bucket space wanted: 0.0

Free disk bucket space available: 177790963712

Free disk space factor: 2.0

Ok.

Analysis complete.

Proceed with config & data upgrade steps? [yes|no]

yes

SKIPPED (dry-run): Copying /opt/membase/var/lib/membase/config/config.dat.rpmsave

SKIPPED (dry-run): cp /opt/membase/var/lib/membase/config/config.dat.rpmsave /opt/couchbase/var

/lib/couchbase/config/config.dat

Ensuring bucket data directories.

SKIPPED (dry-run): Ensuring bucket data directory: /opt/membase/var/lib/membase/data/default-data

SKIPPED (dry-run): mkdir -p /opt/membase/var/lib/membase/data/default-data

SKIPPED (dry-run): Ensuring dbdir owner/group: /opt/membase/var/lib/membase/data

SKIPPED (dry-run): chown -R couchbase:couchbase /opt/membase/var/lib/membase/data

SKIPPED (dry-run): Ensuring dbdir owner/group: /opt/membase/var/lib/membase/data

SKIPPED (dry-run): chown -R couchbase:couchbase /opt/membase/var/lib/membase/data

Upgrading buckets.

Skipping already converted bucket: /opt/membase/var/lib/membase/data/default-data

Skipping already converted bucket: /opt/membase/var/lib/membase/data/test0-data

Done.

Upgrading from Community Edition to Enterprise Edition¶

To upgrade between Couchbase Server Community Edition and Couchbase Server Enterprise Edition, you can use two methods:

-

Perform an online upgrade installation

Using this method, you remove one or more nodes from the cluster and rebalance. On the nodes you have taken out of the cluster, uninstall Couchbase Server Community Edition package, and install Couchbase Server Enterprise Edition. You can then add the new nodes back to the cluster and rebalance. This process can be repeated until the entire cluster is using the Enterprise Edition.

For more information on performing rolling upgrades, see Online (rolling) Upgrade Process.

-

Perform an offline upgrade

Using this method, you need to shutdown the entire cluster, and uninstall Couchbase Server Community Edition, and install Couchbase Server Enterprise Edition. The data files will be retained, and the cluster can be restarted.

For more information on performing rolling upgrades, see Offline (in-place) Upgrade Process.

You should use the same version number when performing the migration process to prevent version differences causing issues during the upgrade process.

Setting up Couchbase Server¶

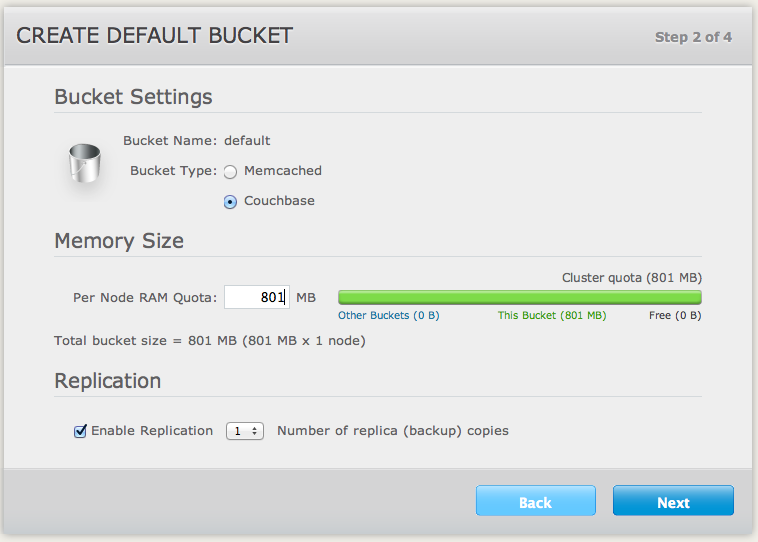

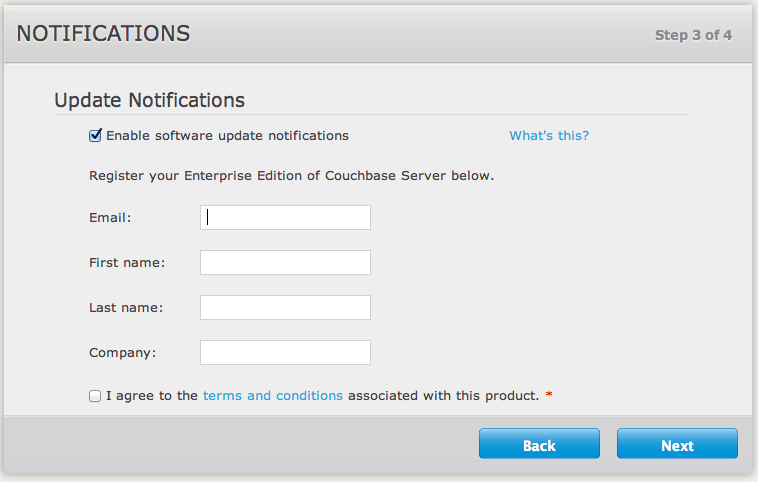

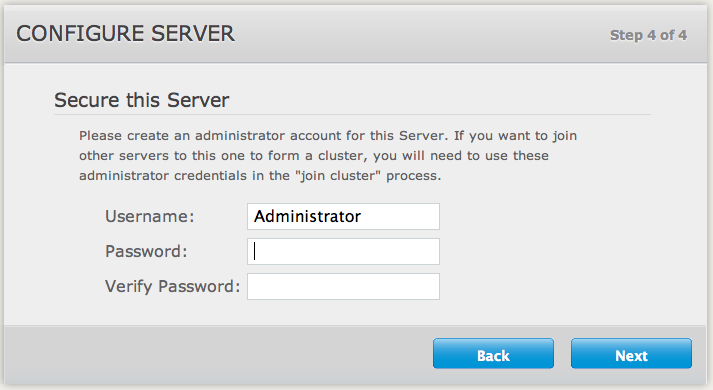

To setup a new Couchbase Server you have a number of different solutions available. All of the solutions require you to set the username and password. You can also optionally configure other settings, such as the port, RAM configuration, and the data file location, as well as creating the first bucket by using any of the following methods:

-

Using command-line tools

The command line toolset provided with your Couchbase Server installation includes